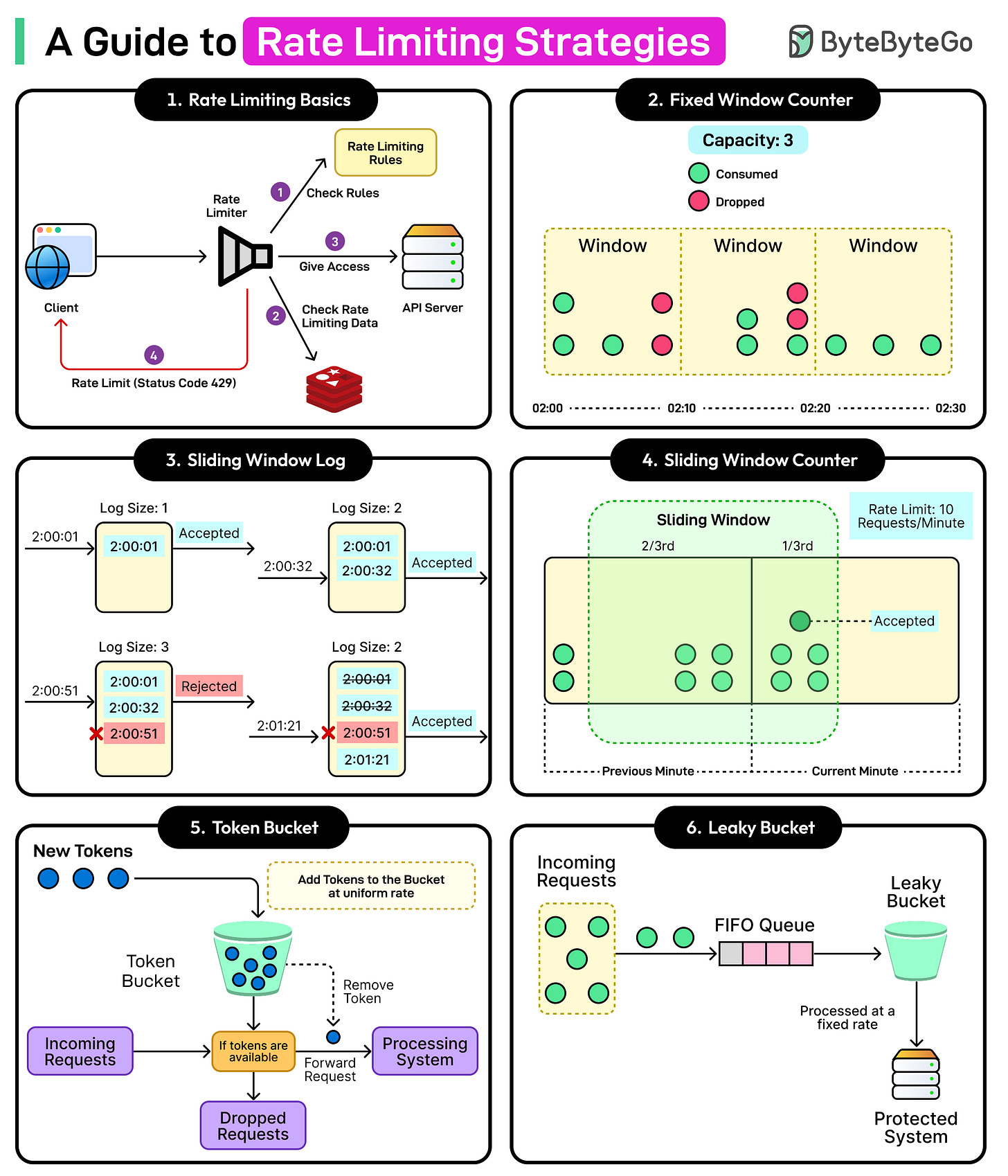

A Guide to Rate Limiting Strategies

No matter how many resources are allocated, systems have a specific capacity beyond which they don’t operate efficiently. Traffic can arrive in bursts, clients retry aggressively, and shared infrastructure makes one team spike everyone’s outage.

This is where rate limiting helps as a defensive and fairness mechanism. It protects services from overload and abuse, shapes traffic to match real capacity, and ensures that high-value work does not drown in noise.

Rate limiting matters because it enforces a defined policy at the moment a request hits the system. The limiter decides whether a request enters the system now, later, or not at all.

A good policy aligns with both reliability and user experience. It can protect downstream applications without surprising clients. In other words, rate limiting is not a feature for edge cases. It is part of the core reliability story, as essential as retries, timeouts, and circuit breakers.

In this article, we will focus on the need for rate limiting and some practical rate limiting strategies that are used in different scenarios.