EP176: How Does SSO Work?

Guide to Monitoring Modern Infrastructure (Sponsored)

Build resilient systems, reduce downtime, and gain full-stack visibility at scale. This in-depth eBook shows you how to evolve your monitoring strategy for today’s dynamic, cloud-native environments with:

Key principles of effective observability in modern architectures

Techniques for tracking performance across ephemeral infrastructure

Real-world examples of metrics, logs, and traces working together"

This week’s system design refresher:

How Does SSO Work?

Best Practices in API Design

Key Terms in Domain-Driven Design

Top AI Agent Frameworks You Should Know

How OpenAI’s GPT-OSS 120B and 20B Models Work?

ByteByteGo Technical Interview Prep Kit

SPONSOR US

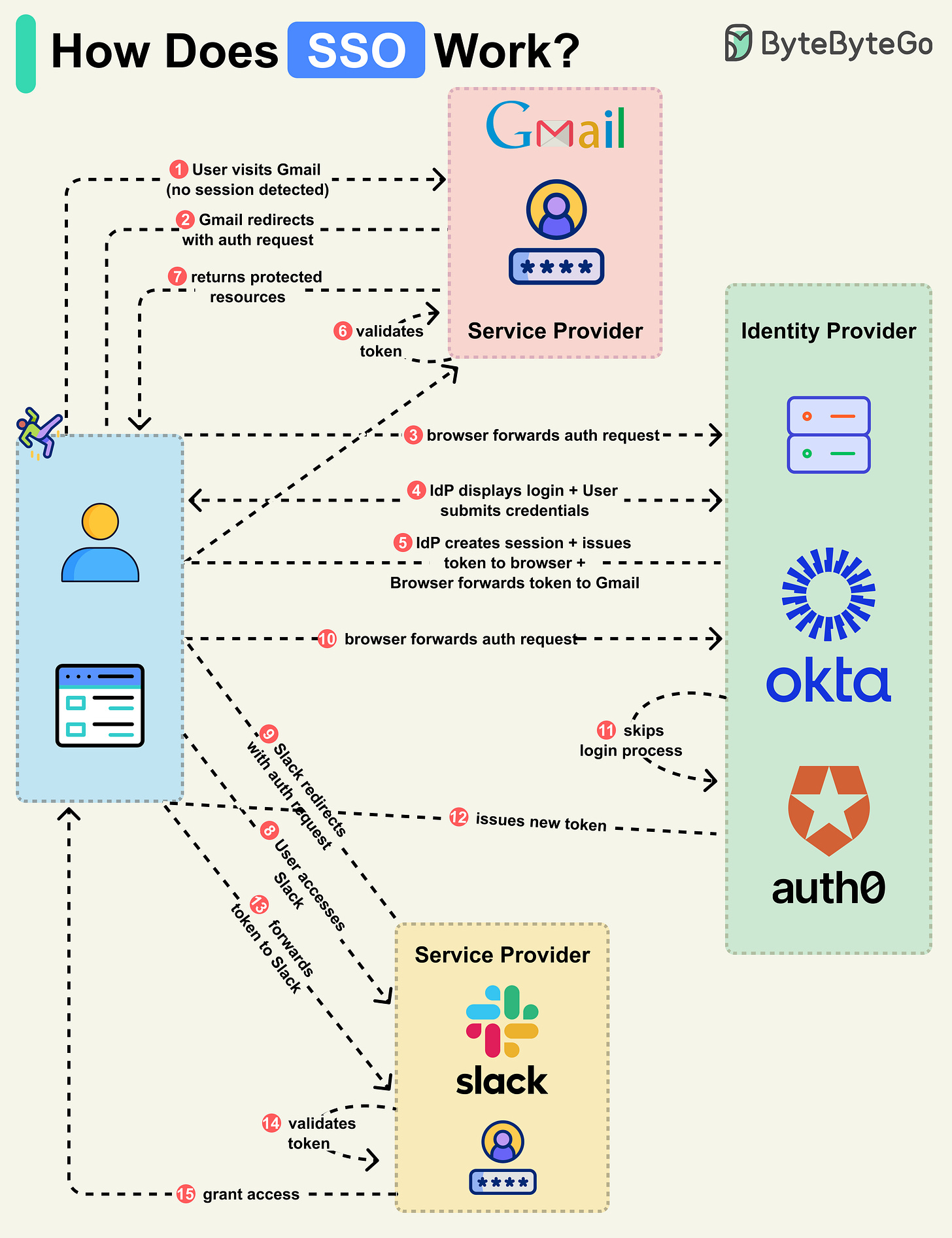

How Does SSO Work?

Single Sign-On (SSO) is an authentication scheme. It allows a user to log in to different systems using a single ID.

Let’s walk through a typical SSO login flow:

Step 1: A user accesses a protected resource on an application like Gmail, which is a Service Provider (SP).

Step 2: The Gmail server detects that the user is not logged in and redirects the browser to the company’s Identity Provider (IdP) with an authentication request.

Step 3: The browser sends the user to the IdP.

Step 4: The IdP shows the login page where the user enters their login credentials.

Step 5: The IdP creates a secure token and returns it to the browser. The IdP also creates a session for future access. The browser forwards the token to Gmail.

Step 6: Gmail validates the token to ensure it comes from the IdP.

Step 7: Gmail returns the protected resource to the browser based on what the user is allowed to access.

This completes the basic SSO login flow. Let’s see what happens when the user navigates to another SSO-integrated application, like Slack.

Step 8-9: The user accesses Slack, and the Slack server detects that the user is not logged in. It redirects the browser to the IdP with a new authentication request.

Step 10: The browser sends the user back to the IdP.

Step 11-13: Since the user has already logged in with the IdP, it skips the login process and instead creates a new token for Slack. The new token is sent to the browser, which forwards it to Slack.

Step 14-15: Slack validates the token and grants the user access accordingly.

Over to you: Would you like to see an example flow for another application?

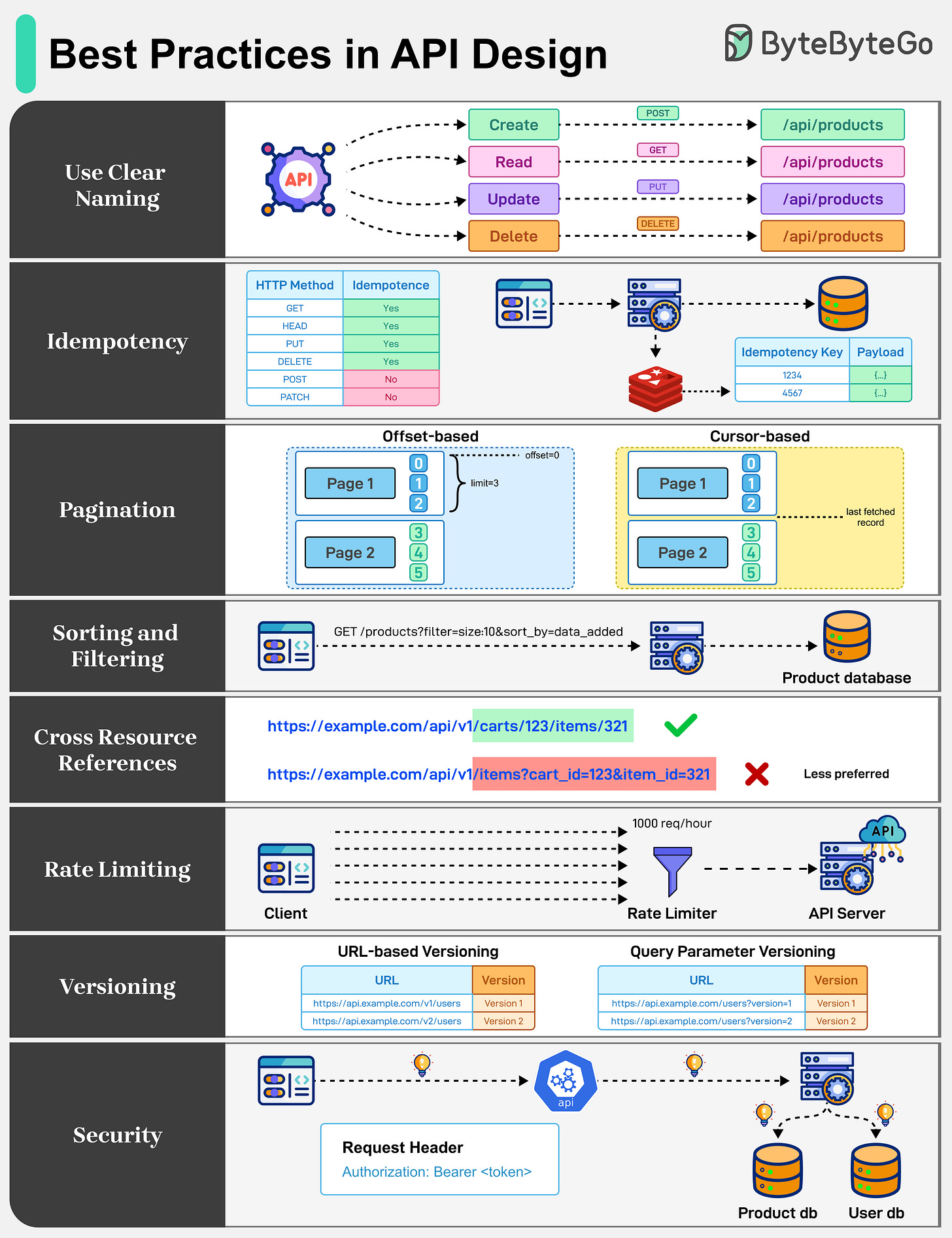

Best Practices in API Design

APIs are the backbone of communication over the Internet. Well-designed APIs behave consistently, are predictable, and grow without friction. Some best practices to keep in mind are as follows:

Use Clear Naming: When building an API, choose straightforward and logical names. Be consistent and stick with intuitive URLs that denote collections.

Idempotency: APIs should be idempotent. They ensure safe retries by making repeated requests to produce the same result, especially for POST operations.

Pagination: APIs should support pagination to prevent performance bottlenecks and payload bloat. Some common pagination strategies are offset-based and cursor-based.

Sorting and Filtering: Query strings are an effective way to allow sorting and filtering of API responses. This makes it easy for developers to see what filters and sort orders are applied.

Cross Resource References: Use clear linking between connected resources. Avoid excessively long query strings that make the API harder to understand.

Rate Limiting: Rate limiting is used to control the number of requests a user can make to an API within a certain timeframe. This is crucial for maintaining the reliability and availability of the API.

Versioning: When modifying API endpoints, proper versioning to support backward compatibility is important.

Security: API security is mandatory for well-designed APIs. Use proper authentication and authorization with APIs using API Keys, JWTs, OAuth2, and other mechanisms.

Over to you: did we miss anything important?

Out Ship, Out Deliver, Out Perform. (Sponsored)

DevStats helps engineering leaders unpack metrics, experience flow, and ship faster so every release drives real business impact.

✅ Spot bottlenecks before they stall delivery

✅ Tie dev work to business goals

✅ Ship more, miss less, prove your impact

It’s time to ship more and make your impact impossible to ignore.

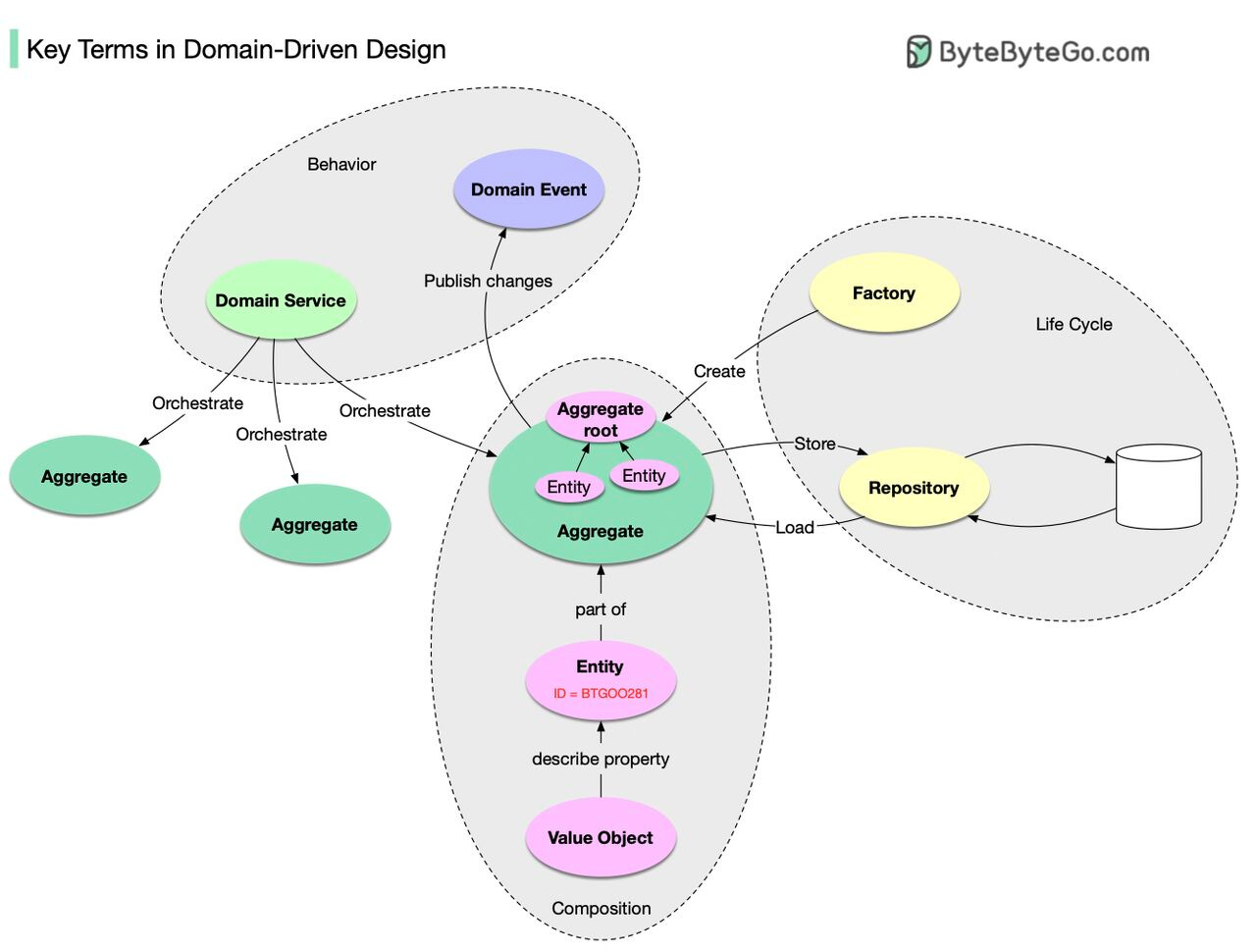

Key Terms in Domain-Driven Design

Have you heard of Domain-Driven Design (DDD), a major software design approach?

DDD was introduced in Eric Evans’ classic book “Domain-Driven Design: Tackling Complexity in the Heart of Software”. It explained a methodology to model a complex business. In this book, there is a lot of content, so I'll summarize the basics.

The composition of domain objects:

Entity: a domain object that has ID and life cycle.

Value Object: a domain object without ID. It is used to describe the property of Entity.

Aggregate: a collection of Entities that are bounded together by Aggregate Root (which is also an entity). It is the unit of storage.

The life cycle of domain objects:

Repository: storing and loading the Aggregate.

Factory: handling the creation of the Aggregate.

Behavior of domain objects:

Domain Service: orchestrate multiple Aggregate.

Domain Event: a description of what has happened to the Aggregate. The publication is made public so others can consume and reconstruct it.

Congratulations on getting this far. Now you know the basics of DDD. If you want to learn more, I highly recommend the book. It might help to simplify the complexity of software modeling.

Over to you: do you know how to check the equality of two Value Objects? How about two Entities?

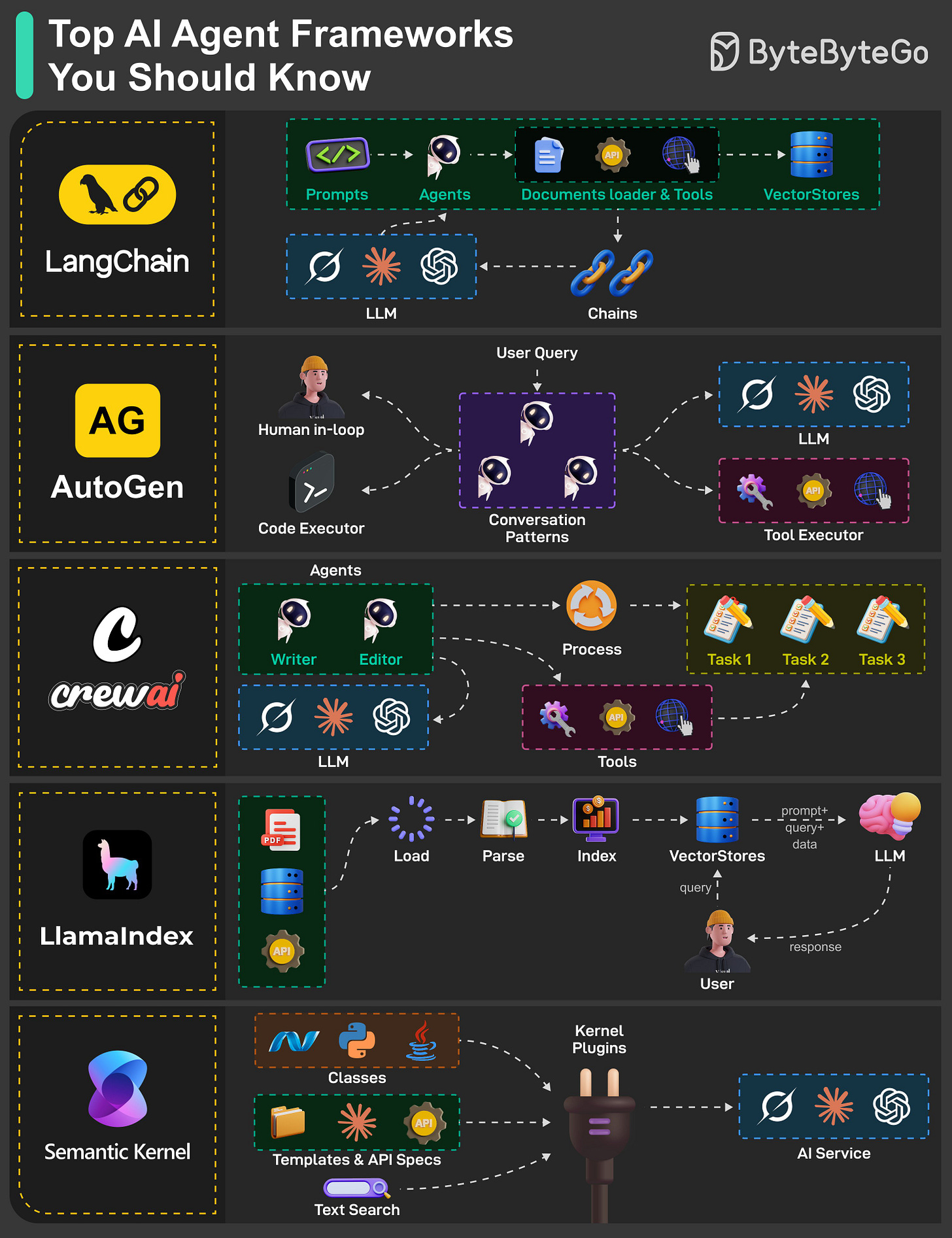

Top AI Agent Frameworks You Should Know

Building smart, independent AI systems is easier with agent frameworks that combine large language models (LLMs) with tools like APIs, web access, or code execution.

LangChain

LangChain makes it simple to connect LLMs with external tools like APIs and vector databases. It allows developers to create chains for sequential task execution and document loaders for context-aware responses.AutoGen

AutoGen allows you to develop AI agents that can chat with each other or involve humans in the loop. It is like a collaborative workspace where agents can run code, pull in data from tools, or get human feedback to complete a task.CrewAI

As the name suggests, CrewAI is all about teamwork. It orchestrates teams of AI agents with roles like writers and editors, processing tasks in a structured workflow. It utilizes LLMs and tools (APIs, Internet, code, etc) to efficiently manage complex task execution and data flow.LlamaIndex

This framework indexes and queries data from documents, APIs, and vector stores to enhance agent responses. It parses and loads data, enabling LLMs to provide context-aware answers, making it ideal for enterprise document search systems and intelligent assistants that access private knowledge bases.Semantic Kernel

Semantic Kernel connects AI services (OpenAI, Calude, Hugging Face models, etc) with a plugin-based architecture that supports skills, templates, and API integrations for flexible workflows. It supports text search and custom workflows for applications.

Over to you: Which AI agent framework have you explored or plan to use?

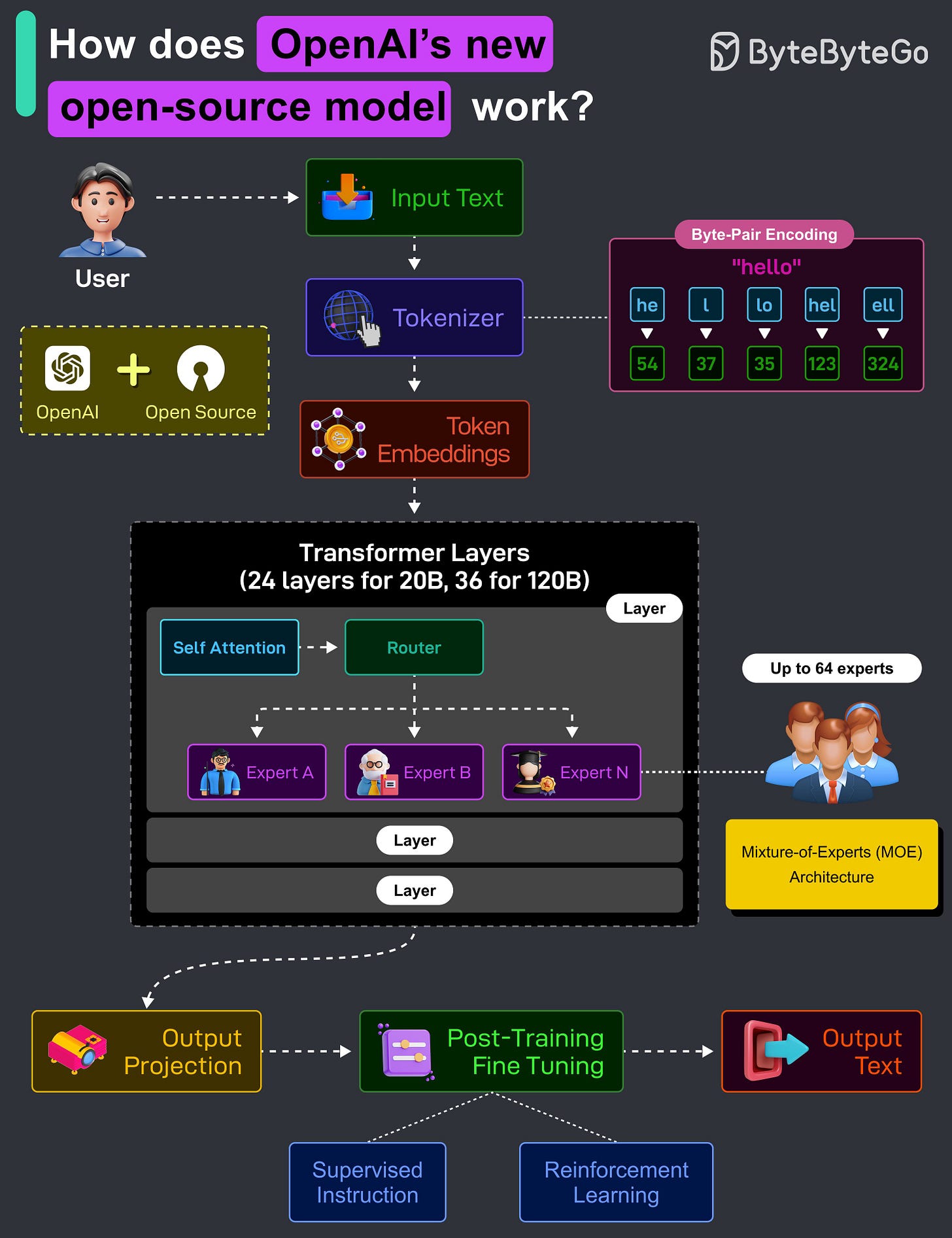

How OpenAI’s GPT-OSS 120B and 20B Models Work?

OpenAI has recently released two LLMs, GPT-OSS-120B (120 billion parameters) and GPT-OSS-20B (20 billion parameters). These are fully open-source models and are provided under an Apache 2.0 license.

These models aim to deliver strong real-world performance at low cost. Here’s how they work:

The user provides some input, such as a question or a task. For example, “Explain quantum mechanics in a simple manner”.

The raw text is converted into numerical tokens using Byte-Pair Encoding (BPE). BPE splits the text into frequently occurring subword units. Since it operates at the byte level, it can handle any input, including text, code, emojis, and more.

Each token is mapped to a vector (a list of numbers) using a learned embedding table. This vectorized form is what the model understands and processes.

Transformer layers are where the real computation happens. The 20B Model has 24 Transformer layers, and the 120B Model has 36 Transformer layers. Each layer includes a self-attention module, router, and experts (MoE).

The self-attention module lets the model understand relationships between words across the entire input.

The LLM uses a Mixture-of-Experts (MOE) architecture. Instead of using all model weights like in traditional models, a router chooses the 2 best “experts” out of a pool of up to 64 total experts. Each expert is a small feedforward network trained to specialize in certain types of inputs. Only 2 experts are activated per token, thereby saving compute while improving quality.

After passing through all layers, the model projects the internal representation back into token probabilities, predicting the next word or phrase.

To make the raw model safe and helpful, it undergoes supervised fine-tuning and reinforcement learning.

Finally, the model generates a response based on the predicted tokens, returning coherent output to the user based on the context.

Over to you: Have you used OpenAI’s open-source models?

Reference: Introducing gpt-oss | OpenAI

ByteByteGo Technical Interview Prep Kit

Launching the All-in-one interview prep. We’re making all the books available on the ByteByteGo website.

What's included:

System Design Interview

Coding Interview Patterns

Object-Oriented Design Interview

How to Write a Good Resume

Behavioral Interview (coming soon)

Machine Learning System Design Interview

Generative AI System Design Interview

Mobile System Design Interview

And more to come

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

> To make the raw model safe and helpful, it undergoes supervised fine-tuning and reinforcement learning.

This does not belong to the inference path. Supervised fine-tuning and reinforcement learning are two post-training approaches.