EP202: MCP vs RAG vs AI Agents

You.com Founders Predict an AI Winter Is Coming in 2026 (Sponsored)

Richard Socher and Bryan McCann are among the most-cited AI researchers in the world. They just released 35 predictions for 2026. Three that stand out:

The LLM revolution has been “mined out” and capital floods back to fundamental research

“Reward engineering” becomes a job; prompts can’t handle what’s coming next

Traditional coding will be gone by December; AI writes the code and humans manage it

This week’s system design refresher:

MCP vs RAG vs AI Agents

How ChatGPT Routes Prompts and Handles Modes

Agent Skills, Clearly Explained

12 Architectural Concepts Developers Should Know

How to Deploy Services

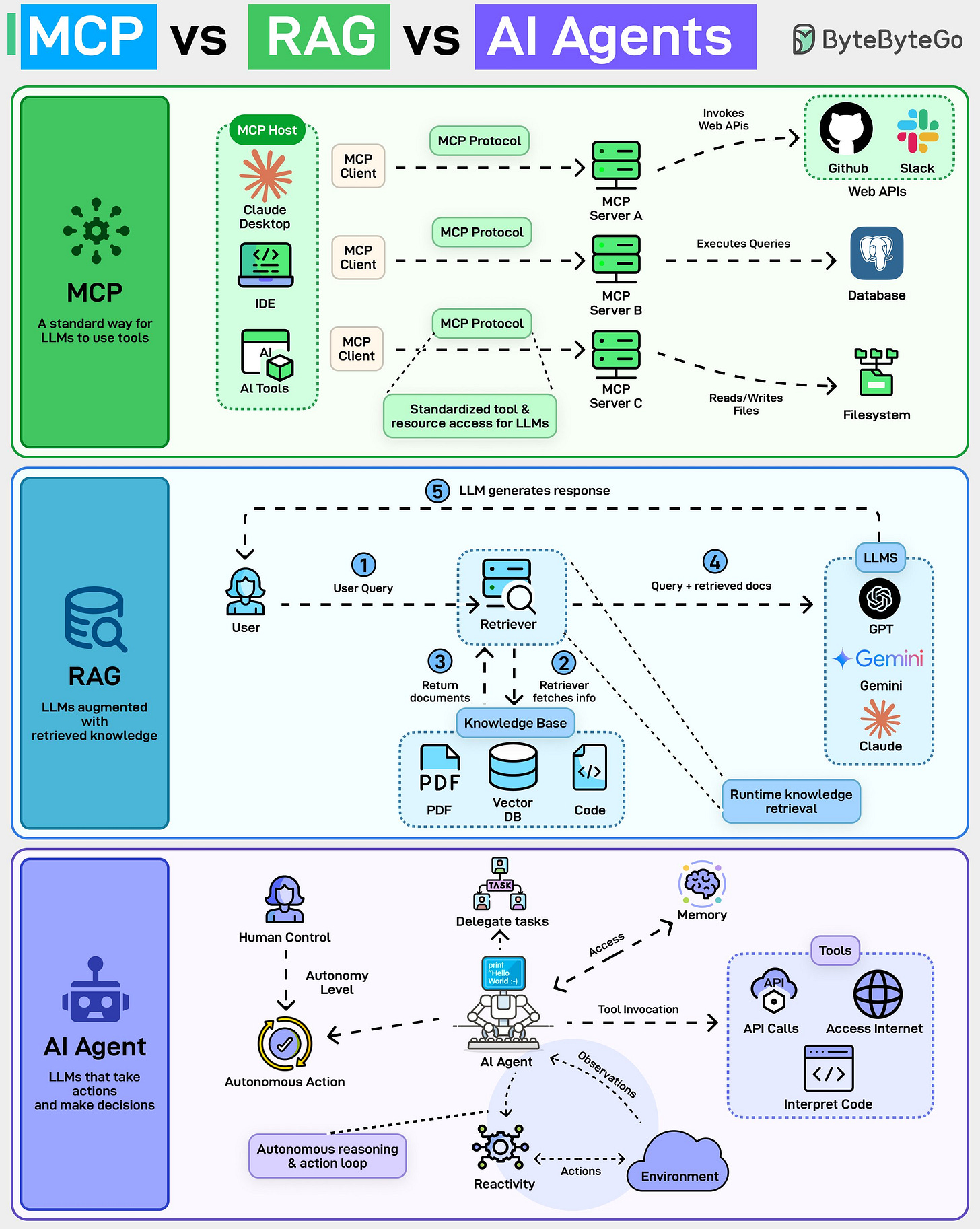

MCP vs RAG vs AI Agents

Everyone is talking about MCP, RAG, and AI Agents. Most people are still mixing them up. They’re not competing ideas. They solve very different problems at different layers of the stack.

MCP (Model Context Protocol) is about how LLMs use tools. Think of it as a standard interface between an LLM and external systems. Databases, file systems, GitHub, Slack, internal APIs.

Instead of every app inventing its own glue code, MCP defines a consistent way for models to discover tools, invoke them, and get structured results back. MCP doesn’t decide what to do. It standardizes how tools are exposed.

RAG (Retrieval-Augmented Generation) is about what the model knows at runtime. The model stays frozen. No retraining. When a user asks a question, a retriever fetches relevant documents (PDFs, code, vector DBs), and those are injected into the prompt.

RAG is great for:

Internal knowledge bases

Fresh or private data

Reducing hallucinations

But RAG doesn’t take actions. It only improves answers.

AI Agents are about doing things. An agent observes, reasons, decides, acts, and repeats. It can call tools, write code, browse the internet, store memory, delegate tasks, and operate with different levels of autonomy.

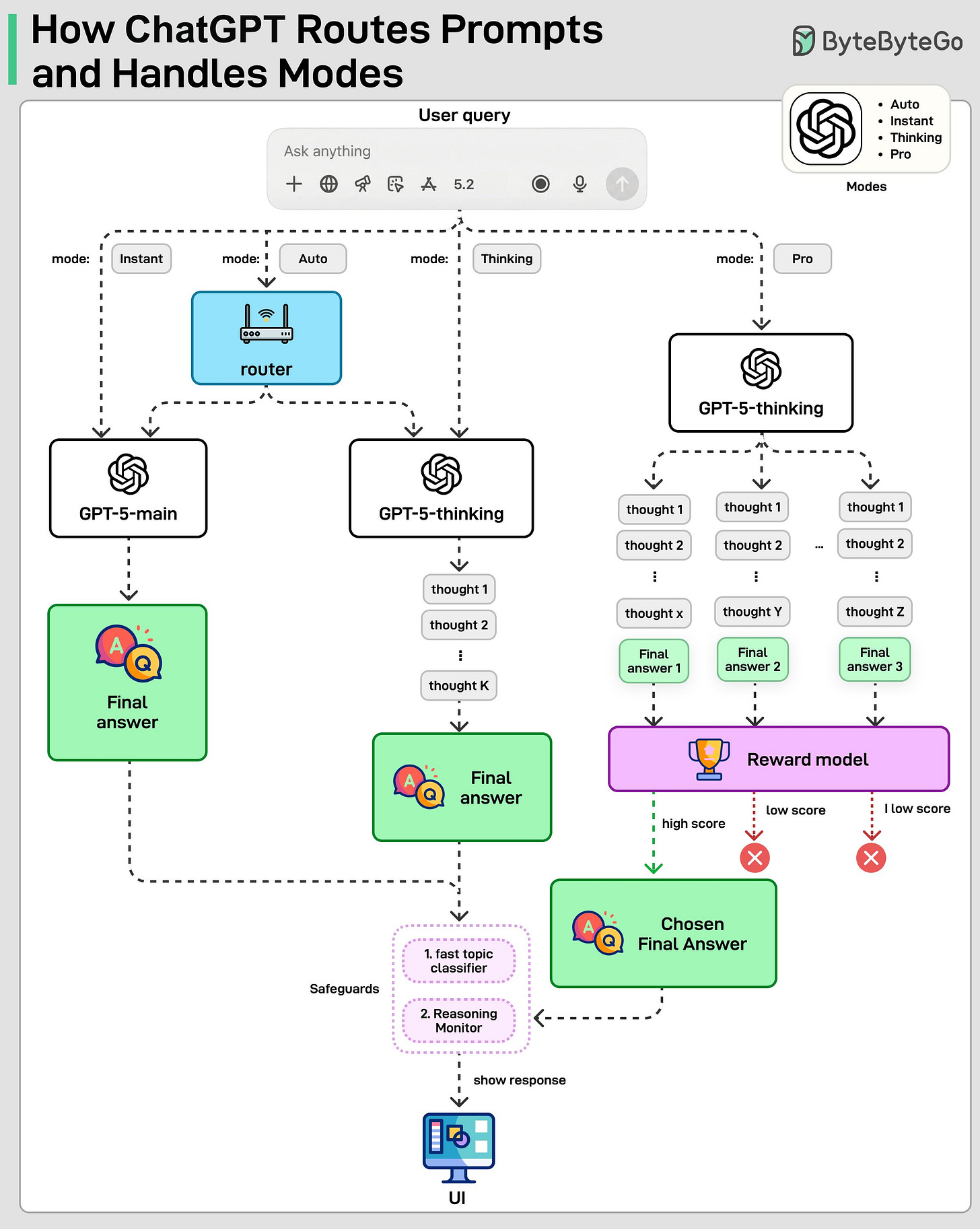

How ChatGPT Routes Prompts and Handles Modes

GPT-5 is not one model.

It is a unified system with multiple models, safeguards, and a real-time router.

This post and diagram are based on our understanding of the GPT 5 system card.

When you send a query, the mode determines which model to use and how much work the system does.

Instant mode sends the query directly to a fast, non-reasoning model named GPT-5-main. It optimizes for latency and is used for simple or low-risk tasks like short explanations or rewrites.

Thinking mode uses a reasoning model named GPT-5-thinking that runs multiple internal steps before producing the final answer. This improves correctness on complex tasks like math or planning.

Auto mode adds a real-time router. A lightweight classifier looks at the query and decides whether to use GPT-5-main or GPT-5-thinking when deeper reasoning is needed.

Pro mode does not use a different model. It uses GPT-5-thinking but samples multiple reasoning attempts and selects the best one using a reward model.

Across all modes, safeguards run in parallel at various stages. A fast topic classifier determines whether the topic is high-risk, followed by a reasoning monitor that applies stricter checks to ensure unsafe responses are blocked.

Over to you: What's your favorite AI chat bot?

AI code review with the judgment of your best engineer. (Sponsored)

Unblocked is the only AI code review tool that has deep understanding of your codebase, past decisions, and internal knowledge, giving you high-value feedback shaped by how your system actually works instead of flooding your PRs with stylistic nitpicks.

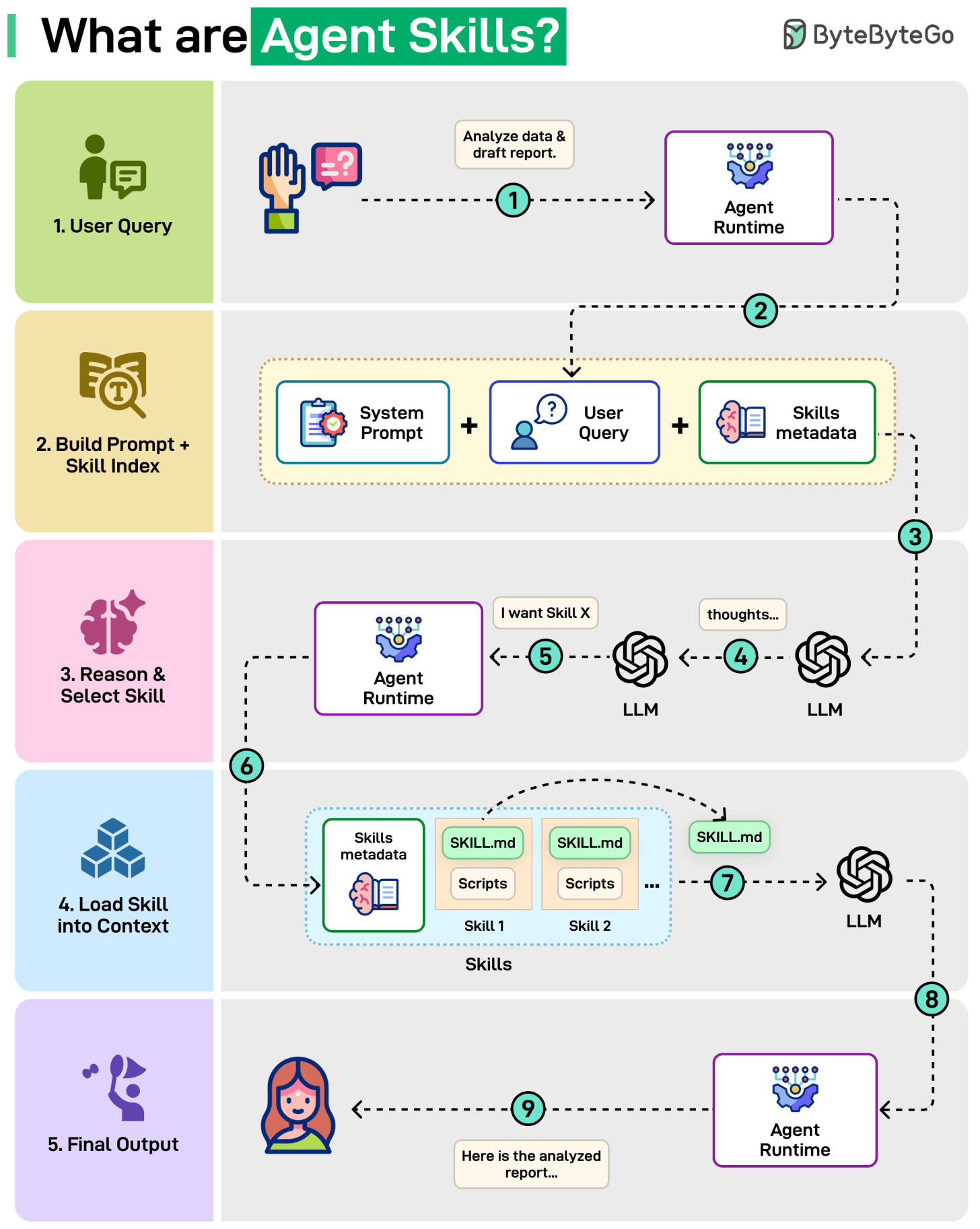

Agent Skills, Clearly Explained

Why do we need Agent Skills? Long prompts hurt agent performance. Instead of one massive prompt, agents keep a small catalog of skills, reusable playbooks with clear instructions, loaded only when needed.

Here is what the Agent Skills workflow looks like:

User Query: A user submits a request like “Analyze data & draft report”.

Build Prompt + Skills Index: The agent runtime combines the query with Skills metadata, a lightweight list of available skills and their short descriptions.

Reason & Select Skill: The LLM processes the prompt, thinks, and decides: "I want Skill X."

Load Skill into Context: The agent runtime receives the specific skill request from the LLM. Then, it loads SKILL. md and adds it into the LLM's active context.

Final Output: The LLM follows SKILL. md, runs scripts, and generates the final report.

By dynamically loading skills only when needed, Agent Skills keep context small and the LLM’s behavior consistent.

Over to you: What skills would you find most useful in agents?

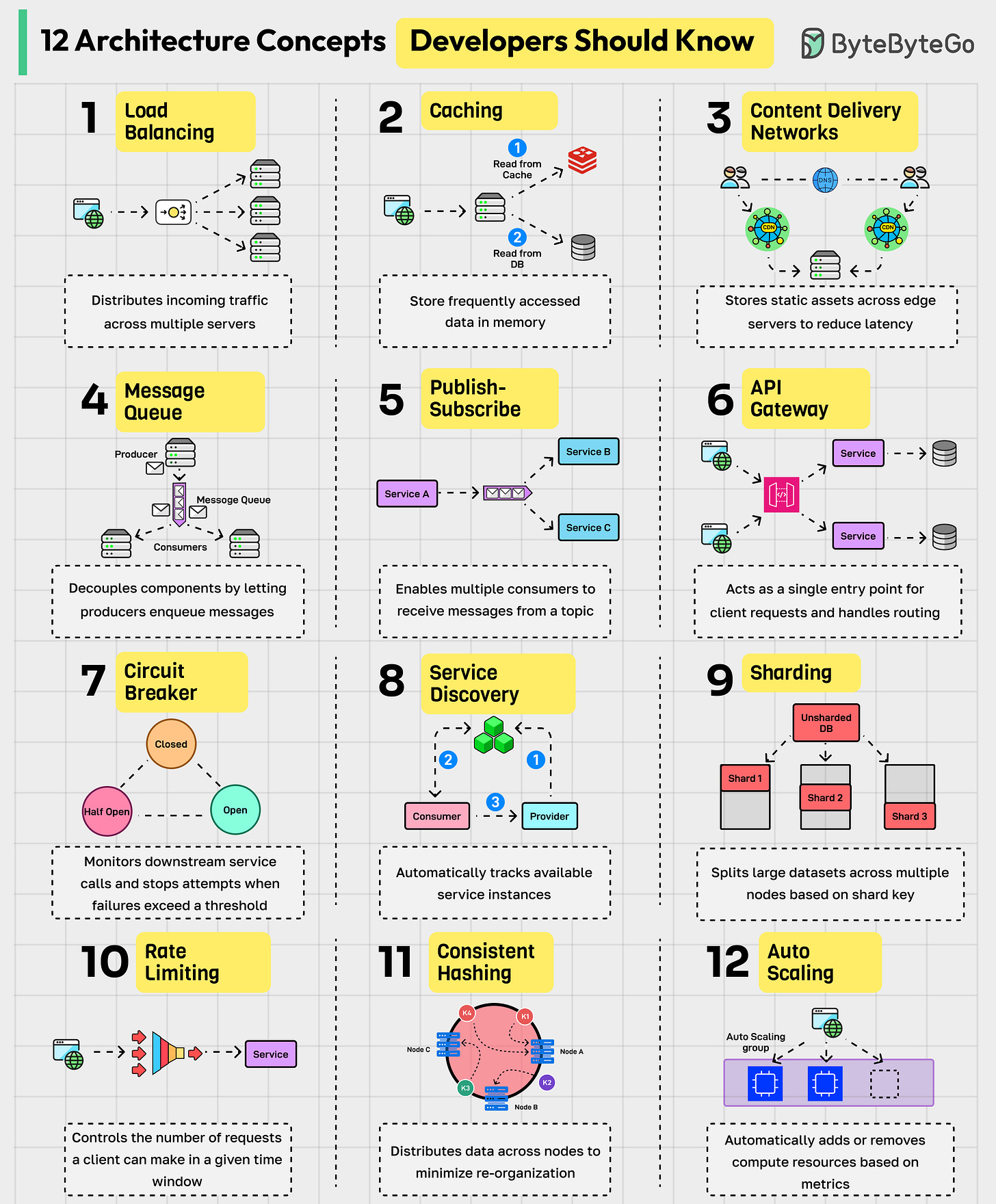

12 Architectural Concepts Developers Should Know

Load Balancing: Distributed incoming traffic across multiple servers to ensure no single node is overwhelmed.

Caching: Stores frequently accessed data in memory to reduce latency.

Content Delivery Network (CDN): Stores static assets across geographically distributed edge servers so users download content from the nearest location.

Message Queue: Decouples components by letting producers enqueue messages that consumers process asynchronously.

Publish-Subscribe: Enables multiple consumers to receive messages from a topic.

API Gateway: Acts as a single entry point for client requests, handling routing, authentication, rate limiting, and protocol translation.

Circuit Breaker: Monitors downstream service calls and stops attempts when failures exceed a threshold.

Service Discovery: Automatically tracks available service instances so components can locate and communicate with each other dynamically.

Sharding: Splits large datasets across multiple nodes based on a specific shard key.

Rate Limiting: Controls the number of requests a client can make in a given time window to protect services from overload.

Consistent Hashing: Distributes data across nodes in a way that minimizes reorganization when nodes join or leave.

Auto Scaling: Automatically adds or removes compute resources based on defined metrics.

Over to you: Which architectural concept will you add to the list?

How to Deploy Services

Deploying or upgrading services is risky. In this post, we explore risk mitigation strategies.

The diagram below illustrates the common ones.

Multi-Service Deployment

In this model, we deploy new changes to multiple services simultaneously. This approach is easy to implement. But since all the services are upgraded at the same time, it is hard to manage and test dependencies. It’s also hard to rollback safely.

Blue-Green Deployment

With blue-green deployment, we have two identical environments: one is staging (blue) and the other is production (green). The staging environment is one version ahead of production. Once testing is done in the staging environment, user traffic is switched to the staging environment, and the staging becomes the production. This deployment strategy is simple to perform rollback, but having two identical production quality environments could be expensive.

Canary Deployment

A canary deployment upgrades services gradually, each time to a subset of users. It is cheaper than blue-green deployment and easy to perform rollback. However, since there is no staging environment, we have to test on production. This process is more complicated because we need to monitor the canary while gradually migrating more and more users away from the old version.

A/B Test

In the A/B test, different versions of services run in production simultaneously. Each version runs an “experiment” for a subset of users. A/B test is a cheap method to test new features in production. We need to control the deployment process in case some features are pushed to users by accident.

Over to you - Which deployment strategy have you used? Did you witness any deployment-related outages in production and why did they happen?

The ChatGPT routing strategy (GPT-5-main vs GPT-5-thinking) mirrors what I learned the hard way with my automation agent. Started using Opus for everything. Burned through API limits. Switched to Haiku by default. Quality actually improved because simpler tasks got clearer instructions instead of over-engineered prompts. Now I route based on task complexity: Haiku for email/scraping, Sonnet for content, Opus when multi-step reasoning required. The classifier layer matters more than the model itself. Cost dropped 70%, no quality loss. https://thoughts.jock.pl/p/claude-model-optimization-opus-haiku-ai-agent-costs-2026

This is a super clean mental model: MCP = tool interface (plumbing), RAG = knowledge injection (memory), Agents = decision loop (manager). They’re not rivals, they sit at different layers of the stack.

The next real battleground is trust + security: once MCP connects models to real systems (files, Slack, GitHub, internal APIs), the cool demo becomes an attack surface, so teams will need least-privilege tool access, audit logs, and safety checks between agents and external MCP servers