How Grab’s Migration from Go to Rust Cut Costs by 70%

Streamfest 2025: Where Streaming Meets AI (Sponsored)

On November 5–6, join thousands of builders and innovators at Redpanda Streamfest 2025, the premier online event for streaming data and real-time AI. Across two days of keynotes, live demos, tutorials, and case studies, you’ll see how leading teams are fusing event streaming with agentic AI to create faster, smarter, and more resilient systems. Learn practical strategies to cut costs, simplify architectures, and handle AI-scale workloads with ease. From next-gen connectors to real-world deployments, Streamfest will give you the tools and insights to stay ahead in the AI era.

Disclaimer: The details in this post have been derived from the official documentation shared online by the Grab Engineering Team. All credit for the technical details goes to the Grab Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Grab has long relied on Golang for most of its microservices.

The language was chosen for its simplicity and the speed at which developers can build and deploy new features. Over the years, this approach has served the company well as it scaled across multiple business lines.

At the same time, however, Rust has gradually found its place within Grab. It first appeared in small command-line tools and later proved itself in larger systems such as Catwalk, a Rust-based reverse proxy for serving machine learning models. These early successes created momentum for exploring whether Rust could be applied to other production systems.

The next step was to test if rewriting an existing Golang service in Rust could deliver real business benefits.

Rust is often praised for its strong memory safety, lack of garbage collection, and performance close to C. It also avoids common errors like null pointer issues. However, rewriting an existing system is never a straightforward decision. Important context from the old code can be lost, hidden edge cases can return, and projects can take much longer than expected.

To approach this decision carefully, the Grab Engineering Team set clear criteria for selecting a service to rewrite. The chosen system needed to be simple enough in its functionality to avoid unnecessary complexity. It also had to serve large amounts of traffic so that any efficiency gains would be meaningful. Finally, the team needed to be confident that engineers were willing and able to learn Rust to support the system for the long term.

In this article, we look at how Grab migrated their counter service from Go to Rust, resulting in a 70% cost reduction.

Help us Make ByteByteGo Newsletter Better

TL:DR: Take this 2-minute survey so I can learn more about who you are,. what you do, and how I can improve ByteByteGo

Why Counter Service?

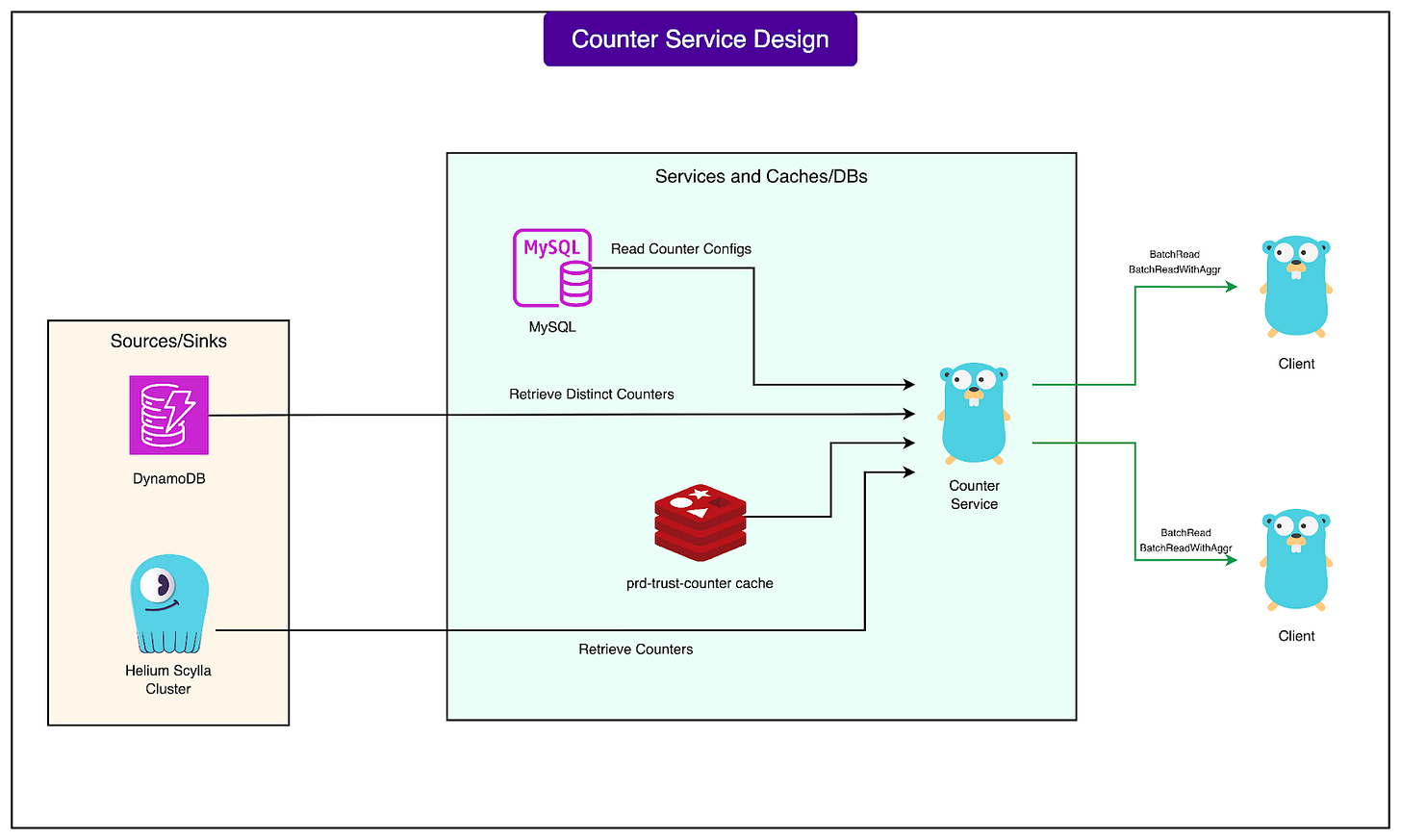

The service chosen for the rewrite was Counter Service. It is a core part of Grab’s fraud detection and machine learning systems. Its job is to keep track of events and make those counts available to other services that rely on them.

Counter Service has two main responsibilities:

The first is stream ingestion. This means it continuously reads events as they happen, adds them up, and stores the results in a database called Scylla.

The second responsibility is exposing GRPC endpoints. These are network interfaces that other services can call to quickly ask questions such as “how many times has this event happened for a given user?”

To answer these questions, Counter Service retrieves data from Scylla and sometimes from other databases such as Redis, MySQL, or DynamoDB.

See the diagram below:

While Counter Service handles very high traffic, reaching tens of thousands of requests every second at peak times, the work it performs is straightforward. It counts, stores, and returns numbers.

This combination of high traffic and simple functionality made it a strong candidate for the Rust rewrite. The simplicity reduced the risk of reintroducing hidden bugs, and the high traffic meant that even small efficiency improvements would bring significant savings.

Rewrite Approach and Library Choices

When the Grab Engineering Team decided to move Counter Service from Golang to Rust, they avoided doing a simple line-by-line conversion.

Tools like C2Rust can automatically translate code, but the team knew that code written in an idiomatic Golang style would not necessarily translate well into idiomatic Rust.

Instead, they treated the existing service as a black box. This meant they focused only on the inputs and outputs defined by the service’s GRPC interfaces. If another system sent the same request to both the old and new versions, the response should be identical. With this contract in mind, the engineers rewrote the logic in Rust completely from scratch, aiming to match the original functionality without being constrained by Golang’s design.

A major step in this process was checking if Rust had the right open-source libraries to replace the tools and integrations used in the old service. Each dependency was evaluated for maturity, popularity, and active support.

Here are the main dependencies:

Datadog StatsD client: The Grab engineering team chose Cadence. It had fewer than 500 GitHub stars, which suggested a smaller user base, but the API was intuitive and covered all the required features.

OpenTelemetry: They used opentelemetry-rust, which had more than 1,000 stars and solid community backing.

GRPC: The tonic library was selected, a well-known Rust implementation of GRPC with active use and more than 500 stars.

Web server: actix-web was picked. It is one of the most popular Rust frameworks, with more than 20,000 stars.

Redis: Initially, the team tried redis-rs, the “official” Redis client with over 3,000 stars. However, it did not meet the needs of the project’s asynchronous design. They switched to fred.rs, which had over 5,000 stars and better async support.

Scylla: They used the scylla-rust-driver. Although it had only around 500 stars, it was officially maintained by the Scylla project, giving confidence in its long-term support.

Kafka: The kafka-rust client, with over 1,000 stars, was adopted to handle messaging needs.

One concern the team faced was the popularity and stability of libraries.

In open source, the number of GitHub stars can reflect how widely a project is used and how much community support it may receive. Less popular libraries carry a higher risk of being abandoned or poorly maintained.

However, the team made exceptions when the library was officially backed by the creators of the underlying technology, as in the case of the Scylla driver.

By carefully choosing libraries and rewriting logic based on clear contracts, the Grab Engineering Team built a Rust version of Counter Service that matched the old system’s functionality while taking advantage of Rust’s efficiency and safety.

Another challenge the Grab Engineering Team faced during the rewrite was that most of the company’s internal libraries were written in Go. These libraries had been built up over the years and were heavily used across different microservices. By moving to Rust, the team could no longer rely on them directly.

A clear example was the internal configuration system. In the Go-based services, this system used Go Templates to manage settings for different environments, such as staging and production. It also had wrappers to handle sensitive information, like pulling and injecting secrets securely.

Since this library was not available in Rust, the engineers had to build a replacement. To do this, they turned to a Rust tool called Nom, which is a framework for writing parsers. A parser is a program that reads structured text and turns it into data that the system can use. Nom is powerful and fast, but it comes with a steep learning curve.

Despite the complexity, the team successfully built a Rust-based equivalent of the configuration system. With Nom, they could parse templates and render configuration files in a way that worked similarly to the Go version. This allowed the new service to handle configuration and secrets properly without depending on the old Go libraries.

Technical Challenges

While rewriting Counter Service in Rust, the Grab engineering team ran into a few technical challenges. These were not unexpected, since Rust works very differently from Go in some important areas.

Borrow Checker

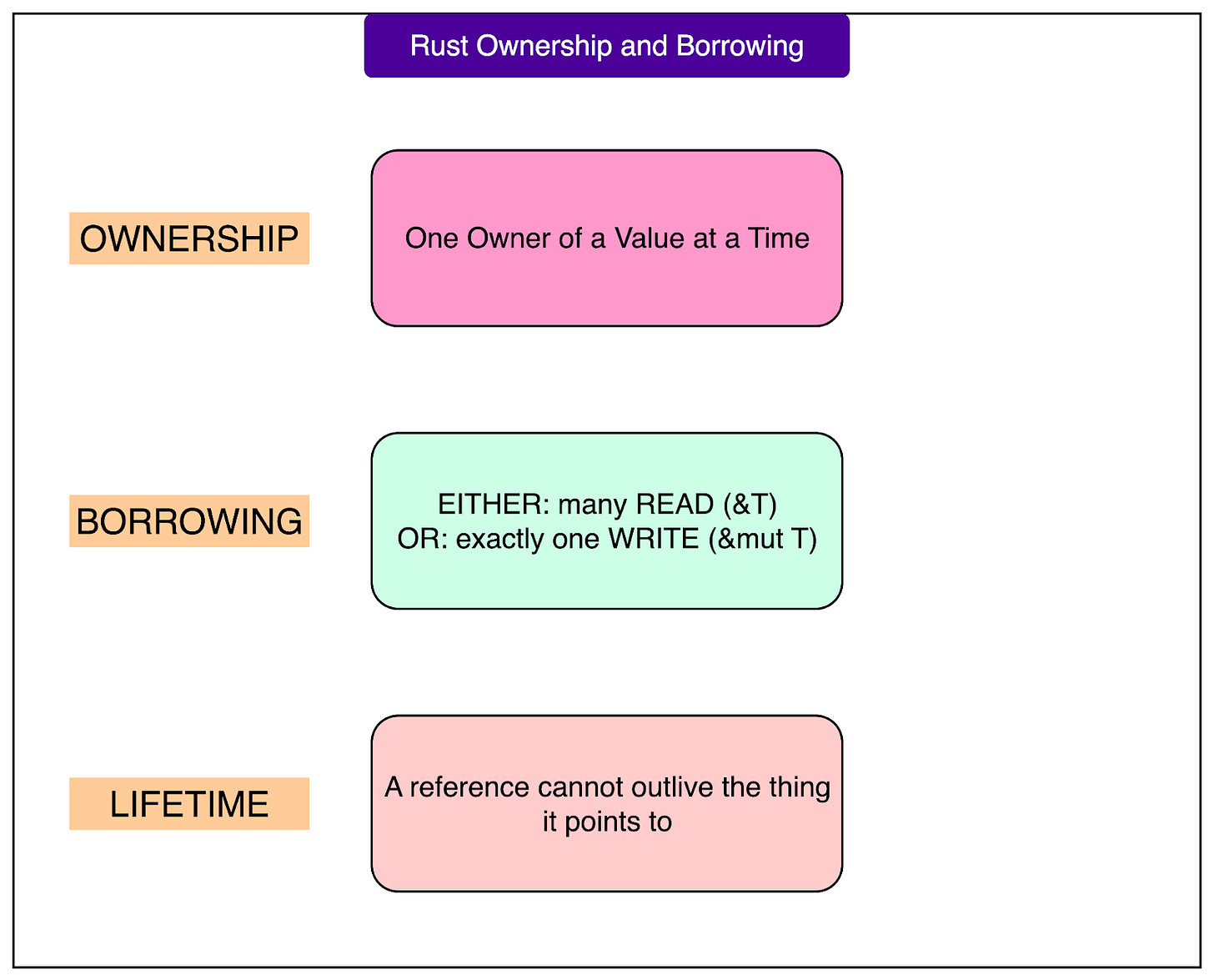

One of Rust’s defining features is the borrow checker, which enforces strict rules about how memory is accessed.

In simple terms, Rust does not allow two parts of a program to change the same piece of data at the same time unless it is done in a safe way. This prevents entire categories of bugs, but it also means developers have to carefully think about how data is passed around.

A common difficulty for beginners is dealing with lifetimes, which describe how long a piece of data is valid. At first, this can feel confusing and restrictive. To move faster in the early stages, the team often used clones of data or wrapped it in structures like Arcs (atomic reference counters) to share ownership safely. While this is less efficient, it allowed them to make progress without getting stuck. Later, they could return to the code and optimize it. Tools such as Flamegraph helped by showing where unnecessary allocations were happening.

Async Concurrency

Another major difference was in how the two languages handle concurrency, or running multiple tasks at the same time.

In Go, concurrency is based on Goroutines, which are managed by the Go runtime. Developers can simply add the go keyword in front of a function call, and it runs in parallel. The runtime takes care of scheduling these tasks automatically, which makes it straightforward to use.

In Rust, concurrency is based on async/await and is known as cooperative scheduling. Each asynchronous function has to be explicitly marked as async, and the program must use await at specific points to give control back to the runtime. This means developers need to think more carefully about which code is blocking and which code can run in the background.

This difference led to some mistakes early in the rewrite. For example, the team accidentally used a blocking Redis call inside async code. This caused the service to perform poorly until they fixed it by switching to a fully non-blocking, asynchronous Redis client.

Conclusion

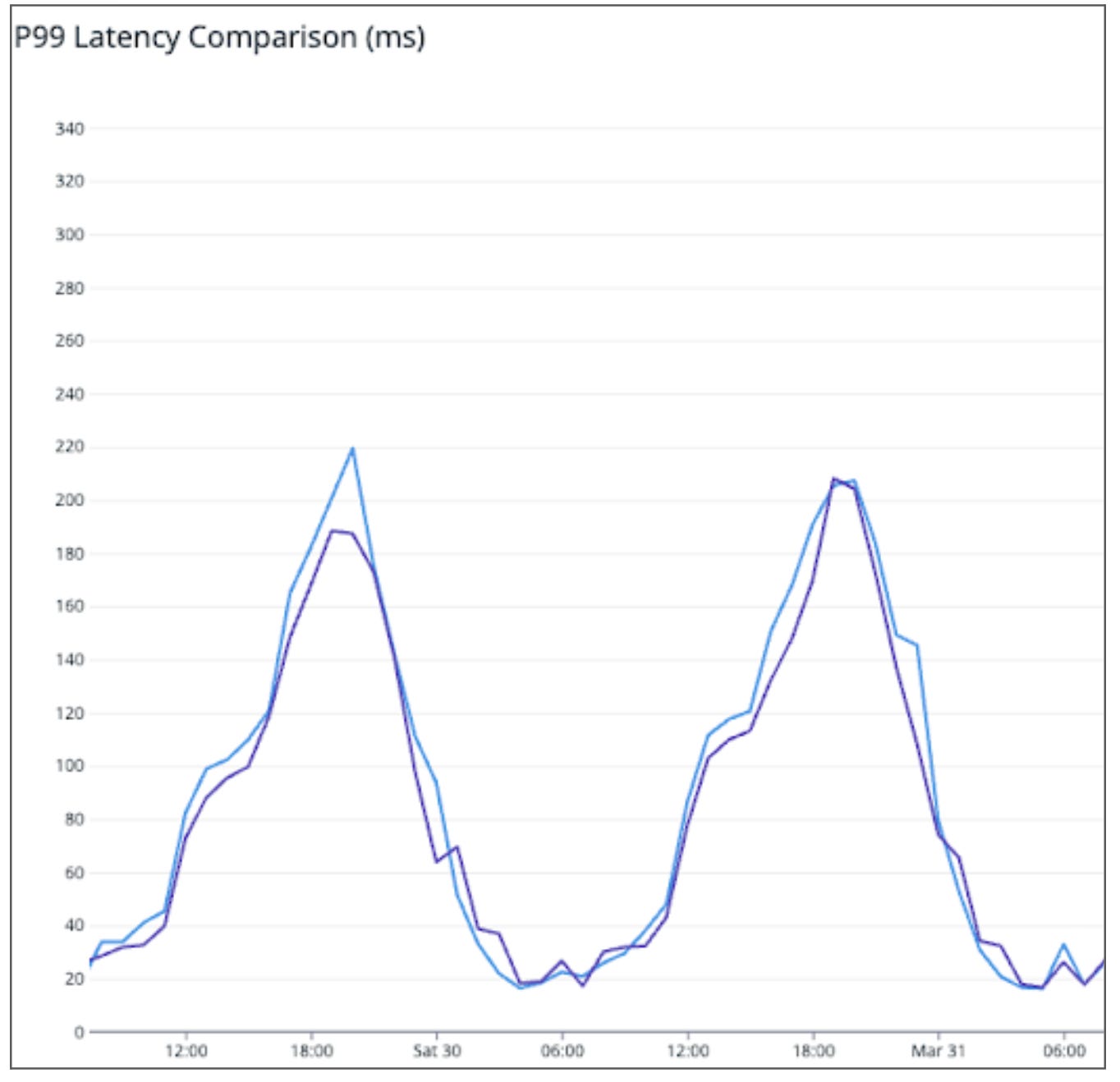

After completing the rewrite, the Grab engineering team compared the new Rust version of Counter Service with the original Go version. The findings were a mix of surprises and confirmations.

In terms of latency, the two services performed almost the same. At the 99th percentile (P99), which measures the slowest requests, Rust was sometimes even a little slower than Go. This showed that switching to Rust did not automatically make the service faster.

The real benefit came from resource efficiency. To handle 1,000 requests per second (QPS):

The Go service needed about 20 CPU cores.

The Rust service needed only about 4.5 cores.

This translated into nearly five times better efficiency and about 70 percent lower infrastructure cost for the same workload. From this, the team drew a few key lessons:

Rust is not always faster than Go. Go is already fast enough for most high-performance services, so Rust will not necessarily reduce latency.

Rust is more efficient. Thanks to its design, Rust uses less computing power to achieve the same results. This makes it especially valuable for services with heavy traffic where cost savings add up quickly.

Rust’s learning curve depends on context. Writing synchronous code in Rust is manageable for most experienced developers, especially with the help of the compiler and the Clippy linter. The real challenge comes with asynchronous code, which requires a deeper understanding of concurrency.

Overall, the rewrite showed that while Rust may not always deliver raw speed improvements over Go, it can deliver significant efficiency gains. For high-traffic services, this can make the effort worthwhile, provided the team is ready to invest in learning and maintaining Rust.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Original author here, thanks for analysing my blog post and sharing it here! Always been a fan of Alex and bytebytego, it's an honour to be featured.

++ Good Post, Also, start here Compilation of 100+ Most Asked System Design, ML System Design Case Studies and LLM System Design

https://open.substack.com/pub/naina0405/p/important-compilation-of-most-asked?r=14q3sp&utm_campaign=post&utm_medium=web&showWelcomeOnShare=false