How Netflix Runs on Java?

Kubernetes Quick-Start Guide (Sponsored)

Cut through the noise with this engineer-friendly guide to Kubernetes observability. Get a fast-track reference to essential kubectl commands and critical metrics — from disk I/O and network latency to real-time cluster events. Perfect for scaling, debugging, and tuning your workloads without sifting through endless docs.

Disclaimer: The details in this post have been derived from the articles/videos shared online by the Netflix Engineering Team. All credit for the technical details goes to the Netflix Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Netflix is a masterclass in backend engineering at scale. Behind the seamless playback, tailored recommendations, and cross-device consistency lies an intricate architecture powered by Java.

The majority of Netflix’s backend services run on Java. This might surprise engineers who’ve watched the rise of Kotlin, Go, Rust, and reactive frameworks. But Netflix isn’t sticking with Java out of inertia. Java has matured, and so has the ecosystem around it. Modern JVMs offer powerful garbage collectors. Spring Boot has become both extensible and reliable. And with the arrival of virtual threads and structured concurrency, Java is reclaiming its place in high-throughput, low-latency system design, without the overhead of reactive complexity.

In this article, we’ll walk through how Netflix uses Java today. We will also cover the following topics:

The architectural backbone of Netflix: a federated GraphQL platform connecting client apps to dozens of Java backend services.

The concurrency model: how Java virtual threads and modern garbage collectors change performance and reliability.

The evolution: a company-wide migration off technical debt and onto Spring Boot, JDK 21+, and beyond.

Backend Architecture with the GraphQL Foundation

At the heart of Netflix’s backend lies a federated GraphQL architecture. This is the primary abstraction through which all client applications interact with backend data. The model provides both flexibility and insulation: clients can express exactly what they need, and backend teams can evolve their services independently.

Every GraphQL query from a Netflix client, whether from a smart TV, phone, or browser, lands at a centralized API Gateway. This gateway parses the query, decomposes it into subqueries, and routes those to the appropriate backend services.

Each backend team owns a Domain Graph Service (DGS), which implements a slice of the overall GraphQL schema. Every Domain Graph Service (DGS) at Netflix is a Spring Boot application.

The DGS framework itself is built as an extension of Spring Boot. This means that it supports the following features:

Dependency injection, configuration, and lifecycle management are handled by Spring Boot.

GraphQL resolvers are just annotated Spring components.

Observability, security, retry logic, and service mesh integration are implemented using Spring’s mechanisms.

Netflix picked Spring Boot because it’s proven at scale and a long-lived technology at Netflix. It’s also extensible as Netflix layers in their modules for security, metrics, service discovery, and more.

The DGSs register their schema fragments to a shared registry. The gateway then knows which service is responsible for which field. This turns one “monolithic” schema into a fully federated, independently deployable graph.

This separation of schema ownership enables:

Independent deployability of services

Schema-driven collaboration between frontend and backend

Cleaner boundaries between domains (for example, recommendations vs. user profiles)

The key idea is that backend services own their part of the graph, not just their internal data.

Build Your Own Production-Ready Agent

Few outside major AI labs have optimized LLM agents with reinforcement learning. But Will Brown and Kyle Corbitt have.

In this first-of-its-kind course, they’re teaching engineers how to:

Choose the right agent pattern for your use case

Integrate MCP tools with Notion, Linear, and Slack

Evaluate where and why agents fail

Understand reinforcement learning without the math

3 weeks, 2x/week lectures with live coding/prompting, office hours, and Discord. Requires familiarity with Python and high-level AI/ML concepts.

Starts June 16th. Use code BYTEBYTEGO to save $100.

Microservice Fan-out: What a Query Hits

Behind the scenes, even a simple query, like fetching titles and images for five shows, fans out across multiple services:

The API Gateway receives the request.

It contacts 2–3 DGSs to resolve fields like metadata, artwork, and availability.

Each DGS may then fan out again to fetch from data stores or call other services.

This fan-out pattern is essential for flexibility but introduces real complexity. The system needs aggressive timeouts, retry logic, and fallback strategies to prevent one slow service from cascading into user-visible latency.

Protocol Choices

Between the client and the gateway, Netflix sticks with HTTP and GraphQL over standard web protocols. This ensures compatibility across browsers, mobile apps, and smart TVs.

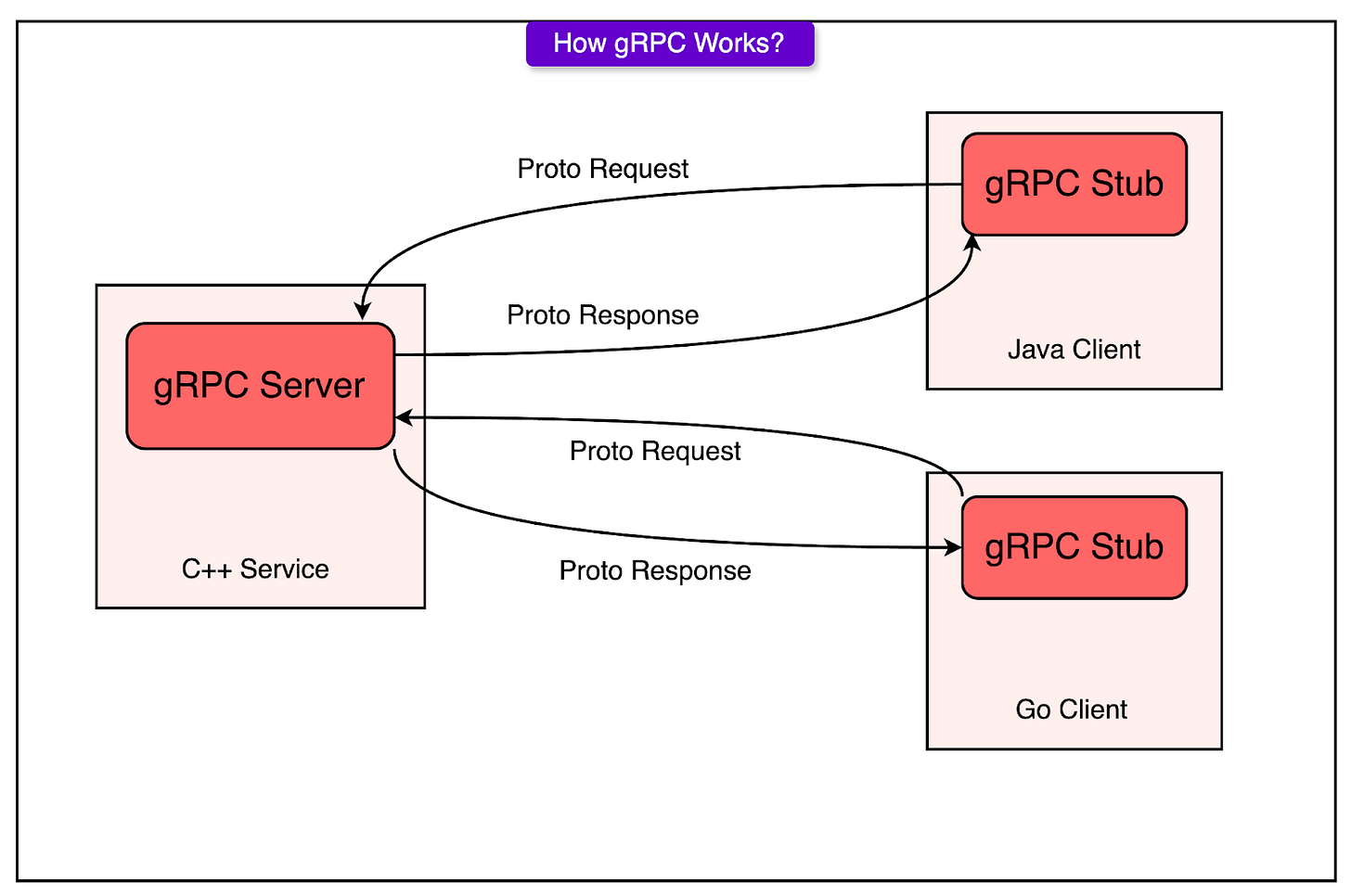

Inside the backend, services communicate over gRPC: a high-performance, binary protocol that supports efficient service-to-service calls. gRPC enables:

Low-latency communication

Strong typing via Protocol Buffers

Easy interface evolution

This separation makes sense: GraphQL is great for flexible, client-driven data fetching, while gRPC excels in internal RPC-style interactions.

JVM Evolution

Until recently, much of Netflix’s Java codebase was stuck on JDK 8. The problem wasn’t inertia but lock-in. A custom in-house application framework, built years earlier, had accumulated layers of unmaintained libraries and outdated APIs. These dependencies had tight couplings and compatibility issues that made upgrading anything beyond JDK 8 risky.

In this setup, service owners couldn’t move forward independently. Even when newer Java versions were technically available, the platform wasn’t ready. Teams had little incentive to upgrade because it required effort with no immediate benefit. The result was stalled progress across the board.

Breaking that cycle required a direct approach. Netflix patched the incompatible libraries themselves, not by rewriting everything, but by forking and minimally updating what was necessary to make it compatible with JDK-17. In practice, this wasn’t as daunting as it sounds. Ultimately, only a small number of critical libraries required intervention.

In parallel, the company began migrating all Java services (around 3000) to Spring Boot. This wasn’t a simple lift-and-shift. They built automated tooling to transform code, configure services, and standardize deployment. While the effort was significant, the result is a unified platform that can evolve in step with the broader Java ecosystem.

Now, the baseline across most teams is Spring Boot on JDK 17 or newer. A few legacy services remain for backward compatibility, but they were the exception.

Once services moved to JDK 17, the benefits became obvious:

The G1 garbage collector, already in use, showed a significant improvement: roughly 20% less CPU time spent on GC without changing application code.

Fewer and shorter stop-the-world pauses led to fewer cascading timeouts in distributed systems.

Higher overall throughput and better CPU utilization became possible, especially for high RPS services.

Generational ZGC

G1 garbage collector served Netflix well for years. It struck a balance between throughput and pause time, and most JVM-based services used it by default. But as traffic scaled and timeouts tightened, the cracks showed.

Under high concurrency, some services saw stop-the-world pauses lasting over a second, long enough to cause IPC timeouts and trigger retry logic across dependent services. These retries inflated traffic, introduced jitter, and obscured the root cause of failures. In clusters running at high CPU loads, G1's occasional latency spikes became an operational burden.

However, the introduction of generational ZGC changed the game.

ZGC had been available in prior Java versions, but it lacked a generational memory model. That limited its effectiveness for workloads where most allocations were short-lived, like Netflix’s streaming services.

In JDK 21, generational ZGC finally arrived. It brought a modern, low-pause garbage collector that also understood object lifetime. The effect was immediate:

Pause times dropped to near-zero, even under heavy load.

Services no longer timed out during GC pauses, which led to a visible reduction in error rates.

With fewer garbage collection stalls, fewer upstream requests failed, reducing cluster-wide pressure.

Clusters ran closer to CPU saturation without falling over. Headroom previously held in reserve for GC safety was now available for real workloads.

From an operator’s perspective, these improvements were significant. A one-line configuration change (switching from G1 to ZGC) translated into smoother behavior, fewer alerts, and more predictable scaling.

Use of Java Virtual Threads

In the traditional concurrency model, each request handler runs on a separate thread.

For high-throughput systems, this leads to high thread counts, inflated memory usage, and scheduling overhead. Netflix faced exactly this situation, particularly in its GraphQL stack, where individual field resolvers might perform blocking I/O.

Parallelizing these resolver calls manually was possible, but painful. Developers had to reason about thread pools, manage “CompletableFutures”, and deal with the complexity of mixing blocking and non-blocking models. Most didn’t bother unless performance made it unavoidable.

With Java 21+, Netflix began rolling out virtual threads. These lightweight threads, scheduled by the JVM instead of the OS, allowed blocking code to scale without monopolizing resources. For services built on the DGS framework and Spring Boot, the integration was automatic. Resolvers could now run in parallel by default.

See the diagram below that shows the concept of Java virtual threads.

Take the common example of a GraphQL query that returns five shows, each requiring artwork data. Previously, the resolver fetching artwork URLs ran serially, adding latency across multiple calls. With virtual threads, those calls now execute in parallel, cutting total response time significantly, without changing the application code.

Netflix wired virtual thread support directly into their frameworks:

Spring Boot–based services automatically benefit from parallel execution in field resolvers.

Developers don’t need to use new APIs or change annotations.

The thread scheduling model remains abstracted away, preserving familiar development workflows.

This opt-in-by-default model works because virtual threads impose almost no overhead. The JVM manages them efficiently, making them suitable even in high-volume paths where traditional thread-per-request models fall apart.

Trade-Offs

Virtual threads aren't magic. Early experiments revealed a specific failure mode: deadlocks caused by thread pinning.

Here's what happened:

Some libraries used synchronized blocks or methods.

When a virtual thread enters a synchronized block, it becomes pinned to a physical platform thread.

If many pinned virtual threads block while holding locks, and the pool of platform threads is exhausted, the system can deadlock. No threads can make progress, because the thread that holds the lock can’t be scheduled.

Netflix encountered this exact scenario in production.

The issue was serious enough that Netflix temporarily backed off aggressive virtual thread adoption. But with JDK 24, this problem was addressed directly: the JVM rewrote the internals of synchronized to avoid unnecessary thread pinning.

With that change in place, the engineering team was able to once again push forward. The performance and simplicity gains are too good to ignore, and now the risk has been meaningfully reduced.

Why Netflix Moved Away from RxJava?

Netflix helped pioneer reactive programming in the Java ecosystem. RX Java, one of the earliest and most influential reactive libraries, was born in-house. For years, reactive abstractions shaped the way services handled high-concurrency workloads.

Reactive programming excels when applied end-to-end: network IO, computation, and data storage all wrapped in non-blocking, event-driven flows. But that model requires total buy-in. In practice, most systems fall somewhere in between: some async libraries, some blocking IO, and a lot of legacy code. The result is a brittle mix of concurrency models that’s hard to reason about, hard to debug, and easy to get wrong.

One frequent pain point was combining a thread-per-request model with a reactive HTTP client like WebClient. Even when it worked, it introduced two concurrency layers (one blocking, one non-blocking), creating complex failure modes and resource contention. It was effective for certain fan-out use cases, but operationally expensive.

The introduction of virtual threads shifted the equation. As mentioned, they allowed thousands of concurrent blocking operations without the overhead of traditional threads. Combined with structured concurrency, developers can express complex async workflows using plain code, without the callback hell of reactive programming.

Having said that, reactive programming still has its place. In services with long IO chains, backpressure concerns, or streaming workloads, reactive APIs remain useful. However, for the bulk of Netflix’s backend, which involves RPC calls, in-memory joins, and tight response times, virtual threads and structured concurrency offer the same benefits with lower complexity.

The Spring Boot Netflix Stack

Netflix standardizes its backend services on Spring Boot, not as an off-the-shelf framework, but as a base for a deeply integrated, extensible platform. Every service runs on what the team calls “Spring Boot Netflix”: a curated stack of modules that layer company-specific infrastructure into the familiar Spring ecosystem.

This design keeps the programming model clean. Developers use standard Spring annotations and idioms. Under the hood, Netflix wires in custom logic for everything from authentication to service discovery.

The Spring Boot Netflix stack includes:

Security integration with Netflix’s authentication and authorization systems, exposed through standard Spring Security annotations like @Secured and @PreAuthorize.

Observability support using Spring’s Micrometer APIs, connected to internal tracing, metrics, and logging pipelines built to handle Netflix-scale telemetry.

Service mesh integration for all traffic via a proxy-based system (built on ProxyD), handling TLS, service discovery, and retry policies transparently.

gRPC framework based on annotation-driven programming models, letting engineers write gRPC services with the same approach as REST controllers.

Dynamic configuration with “fast properties”: runtime-changeable settings that avoid service restarts and enable live tuning during incidents.

Retryable clients wrapped around gRPC and WebClient to enforce timeouts, retries, and fallback strategies out of the box.

Netflix stays closely aligned with Spring Boot upstream. Minor versions roll out to the fleet within days. For major releases, the team builds tooling and compatibility layers to smooth the upgrade path.

The move to Spring Boot 3 required migrating from javax.* to jakarta.* namespaces. It was a breaking change that affected many libraries. Rather than wait for external updates, Netflix built a Gradle plugin that performs bytecode transforms at artifact resolution time. This plugin rewrites compiled classes to use the new Jakarta APIs, allowing Spring Boot 2-era libraries to work on Spring Boot 3 without source changes.

Conclusion

Netflix’s Java architecture in 2025 isn’t a relic of the past, but a deliberate, modern engineering system. What makes it interesting isn’t the choice of language, but the way that choice is continuously reevaluated, optimized, and aligned with real-world constraints.

The system isn’t static. Netflix pushed past the limits of Java 8 by aggressively upgrading its stack, not by rewriting it. It embraced Spring Boot as a foundation but extended it to meet the unique demands of a global streaming platform. It adopted GraphQL for flexibility, virtual threads for concurrency, and ZGC for performance.

Some key takeaways are as follows:

Java is still competitive when treated as an ecosystem. Netflix extracts significant performance gains from modern JVM features. It doesn’t settle for default settings or old frameworks. Evolution is deliberate.

Owning the platform enables speed. Building in-house tooling for patching, transforming, and deploying applications turns upgrades from risk into routine. Platform ownership is a leverage for them.

Virtual threads reduce complexity. They preserve a familiar coding style while scaling better under load. The payoff is cleaner code, fewer bugs, and simpler mental models.

Tuning infrastructure and not just code improves reliability. Upgrading the garbage collector and optimizing thread behavior led to fewer timeouts, lower error rates, and more consistent throughput across the board.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing hi@bytebytego.com.

Good story.

Epic article, thanks a lot, I didn't know all this!