How Netflix Warms Petabytes of Cache Data

Free tickets to P99 CONF — 60+ low-latency engineering talks (Sponsored)

P99 CONF is the technical conference for anyone who obsesses over high-performance, low-latency applications. Engineers from Disney, Shopify, LinkedIn, Netflix, Google, Meta, Uber + more will be sharing 60+ talks on topics like Rust, Go, Zig, distributed data systems, Kubernetes, and AI/ML.

Join 20K of your peers for an unprecedented opportunity to learn from experts like Michael Stonebraker, Bryan Cantrill, Avi Kivity, Liz Rice & Gunnar Morling & more – for free, from anywhere.

Bonus: Registrants are eligible to enter to win 1 of 300 free swag packs, get 30-day access to the complete O’Reilly library & learning platform, plus free digital books.

Disclaimer: The details in this post have been derived from the Netflix Tech Blog. All credit for the technical details goes to the Netflix engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

The goal of Netflix is to keep users streaming for as long as possible.

However, a user’s typical attention span is just 90 seconds. If the streaming application is not responding fast enough, there is a high chance that the user will drop off.

EVCache is one tool that helps Netflix reduce latency on their streaming app by supporting multiple use cases such as:

Lookaside Cache: When the application needs some data, it first tries the EVCache client and if the data is not in the cache, it goes to the backend service and the Cassandra database to fetch the data.

Transient Data Store: Netflix uses EVCache to keep track of transient data such as playback session information. One application service might start the session while the other may update the session followed by a session closure at the end.

Primary Store: Netflix runs large-scale pre-compute systems every night to compute a brand-new home page for every profile belonging to every user based on the watch history and recommendations. All of that data is written into the EVCache cluster from where the online services read the data and build the homepage.

High Volume Data: Netflix has data that has a high volume of access and also needs to be highly available. For example, UI strings and translations are shown on Netflix's home page. A separate process asynchronously computes and publishes the UI strings to EVCache from where the application can read it with low latency and high availability.

The diagram below shows the various use cases in more detail.

Netflix uses EVCache as a tier-1 cache. For reference, it is a distributed in-memory caching solution based on memcached that is integrated with Netflix OSS and AWS EC2 infrastructure.

At Netflix, EVCache holds petabytes of data comprising thousands of nodes and hundreds of clusters in production. These clusters are routinely scaled up due to the increasing growth of the Netflix user base and the user data generated.

The earlier process to make the cache bigger followed the below steps:

Set up a new, empty cache replica (group of nodes).

Write data to both the old and new cache replica at the same time.

Wait for the data in the old cache to expire.

Then, switch to using just the new, bigger cache replica.

FusionAuth: Auth. Built for Devs, by Devs. (Sponsored)

Hosting Flexibility: You host or we host - the choice is yours with no loss of features.

Scale Confidently: Lightning-fast performance for 10 users or 10 million users (or more).

Developer-Centric: True API first design, quick integration, built on standards, highly flexible & customizable.

Total Control: Deploy on any computer, anywhere in the world and integrate easily with any tech stack.

Data Isolation: Single tenant by design means your data is physically isolated from everyone else’s.

Unlimited: Unlimited IDPs, unlimited users, unlimited tenants, unlimited applications, always free.

FusionAuth is a complete auth & user platform that has 10M+ downloads and is trusted by industry leaders!

See the diagram below for reference:

This approach worked, but it was expensive because they had to run two systems simultaneously for a while. Also, this solution had a few more problems:

It didn’t work well for data that never expires.

It was inefficient for data that doesn’t change often.

When they replaced parts of the system, it could cause a slowdown because the new nodes weren’t warm, resulting in cache misses.

To fix these problems, Netflix created a new tool called the cache warmer. This tool had two main features:

Replica Warmer: When Netflix adds new storage units (replicas), they need to fill them with data. The replica warmer quickly copies data from an existing storage unit to the new one. It does this without slowing down or disrupting the user experience for existing users.

Instance Warmer: Sometimes, a storage unit (node) needs to be replaced or shuts down unexpectedly. The instance warmer quickly fills the new or restarted unit with data by copying data from another healthy storage unit.

For Instance Warmer, they copy data to the new empty node from another replica in the system. This is done because the other nodes in the system have continued to operate and update data while this node was down or being replaced. By choosing a replica that doesn’t include this node, they ensure they’re getting the most up-to-date data from nodes that have been continuously operational.

Cache Warmer Design

The diagram below shows the architectural overview of the cache warming system built by Netflix.

The cache warming system consists of three main components:

Controller

Dumper

Populator

Let’s look at each component one by one.

Controller

The Controller is the manager of the entire cache warming process. It sets up the environment and creates a communication channel (SQS queue) between the Dumper and Populator.

Some of the key functions performed by the controller are as follows:

The Controller selects the source replica. If the replica is not user-specified, it is usually the one with the most data.

Next, it creates an SQS queue for communication and initiates the data dump on the source nodes.

After that, the Controller creates a new Populator cluster.

Finally, it monitors the process and cleans up the resources after the job is done.

Dumper

The Dumper is part of the EVCache sidecar, which is a separate service running alongside the main cache (memcached instance) on each node. Its job is to extract data from the existing cache.

The dumping process works as follows:

The Dumper lists all keys using the memcached LRU Crawler utility

It saves keys into multiple “key-chunk” files.

For each key, it retrieves the value and additional data. This data is saved into “data-chunk” files.

It uploads the data chunks to S3 when they reach a certain size.

Lastly, it sends a message to the SQS queue with the S3 location of the data chunk.

The Dumper supports configurable chunk sizes for flexibility. It is also capable of parallel processing of multiple key chunks.

Populator

The Populator is responsible for filling the new cache with data from the Dumper.

Here’s how it works:

The Populator instance receives configuration (like SQS queue name) from the Controller.

It pulls messages from the SQS queue.

Next, it downloads data chunks from S3 based on these messages and inserts the data into the new cache replicas. It uses “add” operations to avoid overwriting newer data.

The Populator starts working as soon as data is available and doesn’t wait for the full dump. It can auto-scale based on the amount of data available to process.

Instance Warmer

In a large EVCache deployment, individual nodes (servers) can sometimes be terminated or replaced due to hardware issues or other problems. This can cause:

Latency spikes or slowdowns for applications

Drops in cache hit rates if multiple replicas are affected

To minimize these issues, the Netflix engineering team also developed an Instance Warmer that can quickly fill up replaced or restarted nodes with data.

The diagram below shows the overall architecture of the Instance Warmer.

The Instance Warmer works as follows:

EVCache nodes are modified to send a signal to the Controller when they restart.

The Controller receives the restart signal from a node and checks if the node has less than its expected share of data. If so, it triggers the warming process. However, it doesn’t use the replica containing the restarted node as the data source.

The Controller initiates dumping on all nodes in other replicas. It specifies which nodes need warming and which replica they belong to.

The Dumper only extracts data for keys that would hash to the specific nodes being warmed.

Lastly, the Populator consumes these targeted data chunks. It fills only the specific nodes that need warming in the affected replica.

The instance warming process is more efficient than full replica warming because it deals with a smaller fraction of data on each node. Also, it is targeted to specific nodes rather than entire replicas.

The Implementation Results

Netflix is extensively using the cache warmer for scaling if the TTL is greater than a few hours. This has helped them handle the holiday traffic efficiently.

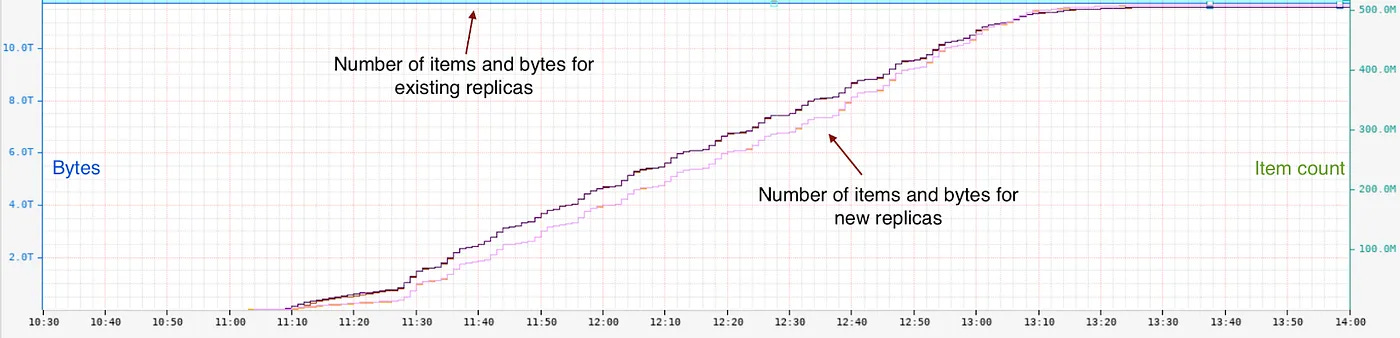

The chart below shows the warming up of two new replicas from one of the two existing replicas. Existing replicas had about 500 million items and 12 Terabytes of data. The warm-up took around 2 hours to complete.

The Instance Warmer is also running in production and warming up a few instances every day. The chart below shows an instance getting replaced at around 5.27. It was warmed up in less than 15 minutes with about 2.2 GB of data and 15 million items.

Conclusion

In this post, we’ve looked at the cache-warming architecture implemented by Netflix in detail.

This flexible cache-warming architecture has allowed Netflix to warm petabytes of cache data by copying it from existing replicas to one or more new replicas. Also, it has made it easy to warm specific nodes that were terminated or replaced due to hardware issues.

One of the key takeaways is the cache warmer following a loosely coupled design where the Dumper and Populator are integrated through SQS. This shows how loosely coupled systems provide flexibility and extensibility in the long run as Netflix continues to enhance its system for greater efficiency.

References: