How Salesforce Used AI To Reduce Test Failure Resolution Time By 30%

Your free ticket to P99 CONF is waiting — 60+ engineering talks on all things performance (Sponsored)

P99 CONF is the technical conference for anyone who obsesses over high-performance, low-latency applications. Leading engineers from today’s most impressive gamechangers will be sharing 60+ talks on topics like Rust, Go, Zig, distributed data systems, Kubernetes, and AI/ML.

Sign up to get 30-day access to the complete O’Reilly library & learning platform, free books, and a chance to win 1 of 500 free swag packs!

Join 30K of your peers for an unprecedented opportunity to learn from experts like Chip Huyen (author of the O’Reilly AI Engineering book), Alexey Milovidov (Clickhouse creator/CTO) & Andy Pavlo (CMU professor) and more – for free, from anywhere.

Disclaimer: The details in this post have been derived from the details shared online by the Salesforce Engineering Team. All credit for the technical details goes to the Salesforce Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Modern software systems run on millions of automated tests every single day. At Salesforce, this testing ecosystem operates at an enormous scale.

The company runs about 6 million tests daily, covering more than 78 billion possible test combinations. Every month, these tests generate around 150,000 failures, and there are more than 27,000 code changelists submitted each day.

Before automation, dealing with these failures was a slow and tiring process. Developers had to spend hours going through error logs, changelists, and internal tracking systems like GUS to figure out what went wrong. Integration failures were especially difficult because any of the 30,000 engineers across the company could be responsible for a given issue. This made it hard to find the root cause and fix the problem quickly.

The result was a growing backlog of unresolved failures, increasing developer frustration, and long delays. On average, it took about seven days to resolve a single test failure. The Salesforce engineering team recognized that this was not sustainable. They needed a faster, more reliable way to handle failures and keep the development process moving smoothly. This challenge set the stage for building an AI-powered solution to remove these bottlenecks.

In this article, we will look at how Salesforce developed such a system and the key takeaways from their journey.

Ship code that breaks less: Sentry AI Code Review (Sponsored)

Catch issues before they merge. Sentry’s AI Code Review inspects pull requests using real error and performance signals from your codebase. It surfaces high-impact bugs, explains root causes, and generates targeted unit tests in separate branches. Currently supports GitHub and GitHub Enterprise. Free while in open beta.

The Goal of the System

Salesforce has a dedicated Platform Quality Engineering team that plays a critical role in the software development process.

This team acts as the final line of defense before any code is released to customers. While individual scrum teams focus on testing their own products in isolation, the Platform Quality Engineering team goes a step further. They run integration tests across multiple products to make sure everything works well together as one unified system.

This focus on integration is important because customers often use several Salesforce products in combination. A product might work perfectly on its own, but when used together with others, unexpected problems can appear. Customers sometimes describe this as the products feeling like they come from different companies. The Platform Quality Engineering team exists to catch these integration bugs early, before they ever reach customers. Fixing bugs after deployment is expensive and time-consuming, so identifying them early is a major priority for Salesforce.

Beyond this core testing role, the team is always looking for ways to automate engineering workflows and make developers more productive. One of the most time-consuming parts of their work was triaging large numbers of test failures.

To address this, the Salesforce engineering team set a clear goal:

Reduce the amount of manual time engineers spend diagnosing failures

Give developers clear, context-aware recommendations that help them fix issues quickly

Build trust in AI tools by avoiding vague or incorrect suggestions that could waste time

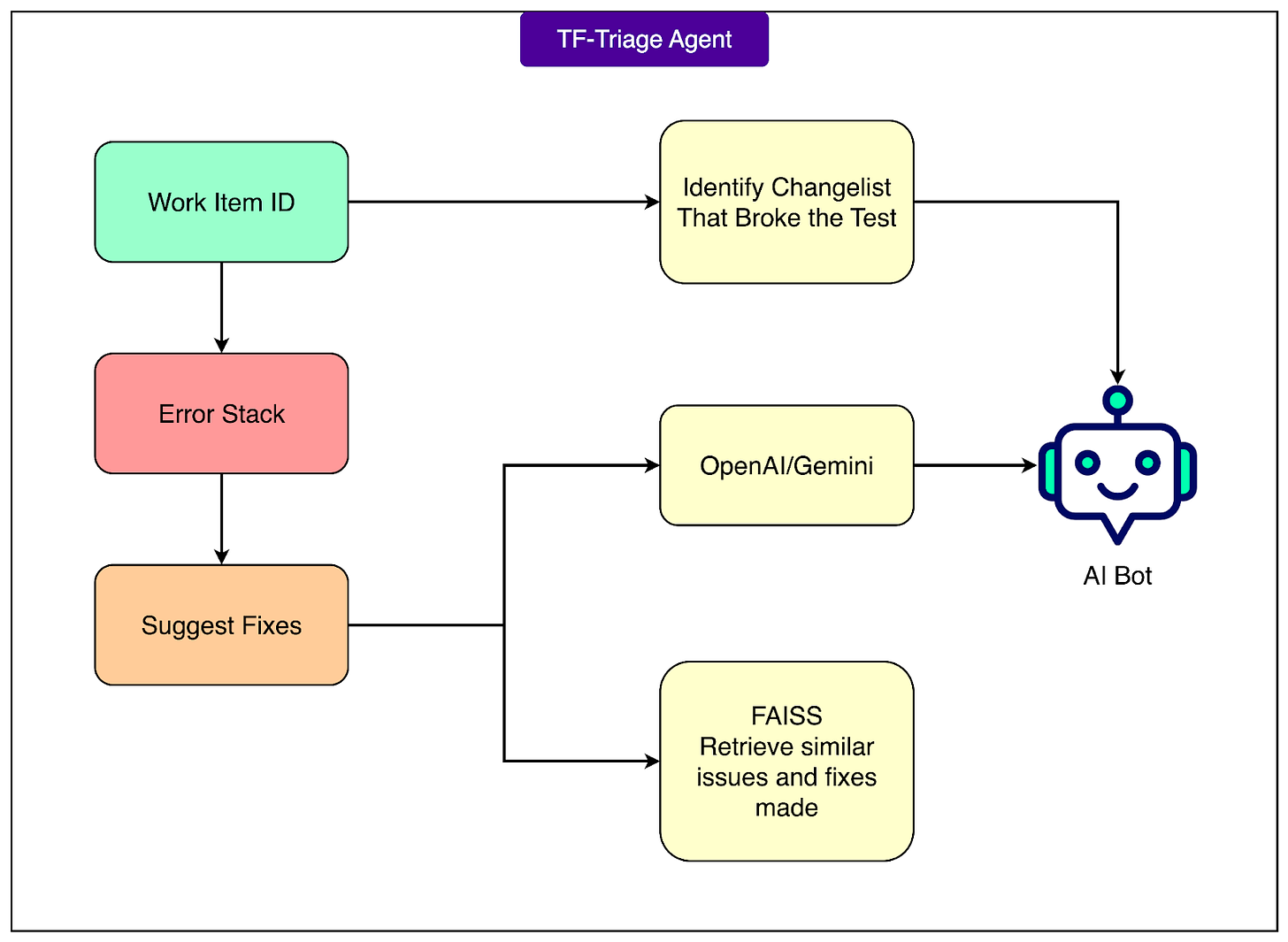

To meet these goals, Salesforce built the Test Failure (TF) Triage Agent, an AI-powered system that provides concrete recommendations within seconds of a failure occurring.

The TF Triage Agent is designed to transform what used to be a slow, manual triage process into a fast and reliable automated workflow. This system fits directly into the team’s mission of maintaining high product quality at scale while keeping the development process efficient.

AI and Automation Architecture

To build an AI-powered system that could process millions of test results quickly and accurately, the Salesforce engineering team designed a specialized AI and automation architecture.

This architecture had to work with massive amounts of noisy, unstructured error data while keeping response times under 30 seconds. Achieving this required a combination of intelligent data processing, search techniques, and careful system design.

Here are the main technical components of the architecture:

1 - Semantic Search with FAISS

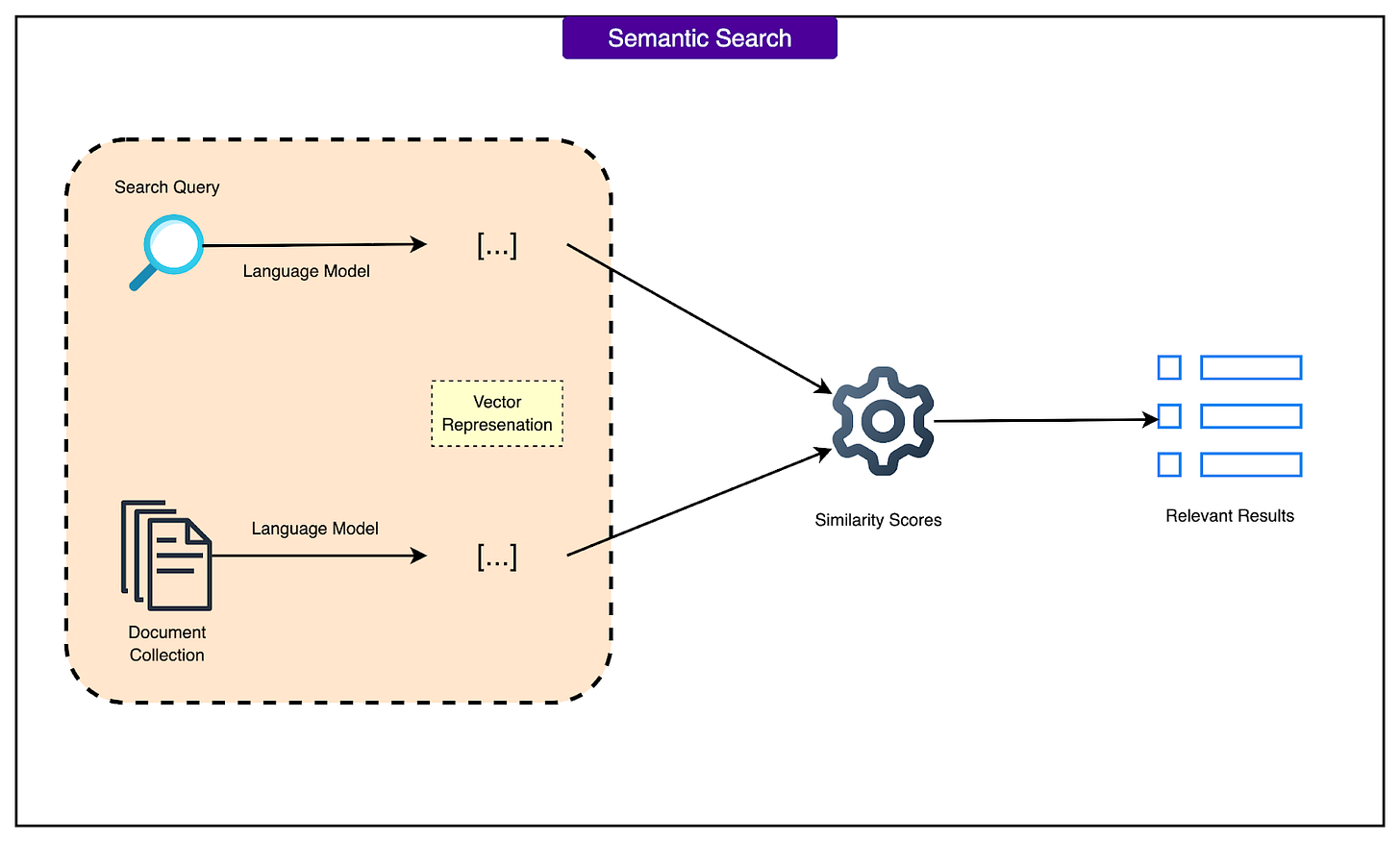

Salesforce used FAISS (Facebook AI Similarity Search) to create a semantic search index of historical test failures and their resolutions. FAISS is a library that allows very fast similarity searches between data represented as vectors.

Every time a new test failure occurs, the system performs a vector similarity search against this index to find past failures that look similar. This makes it possible to match a new error with previously fixed problems and suggest likely solutions. Using FAISS replaced older methods that relied on SQL databases, which were too slow for real-time lookups at Salesforce’s scale.

2 - Contextual Embeddings and Parsing Pipelines

Error logs and code snippets are often messy and inconsistent. To make them useful for semantic search, the Salesforce engineering team built parsing pipelines that clean and structure the data before it is processed.

Once the data is cleaned, the system generates contextual embeddings, which are mathematical representations that capture the meaning of code snippets and error messages. By embedding both error stacks and historical fixes, the system can compare them in a meaningful way and identify the most probable solutions for a new failure.

3 - Asynchronous and Decoupled Pipelines

The team designed the pipelines to work asynchronously and to be decoupled from the main CI/CD workflows. This means that the AI triage process runs in parallel, without slowing down code integration or testing activities.

This design choice is critical for speed. Instead of making developers wait for the AI system to finish, the pipelines process failures independently and return recommendations quickly, keeping overall latency low.

4 - Hybrid of LLM Reasoning and Semantic Search

The Salesforce engineering team combined semantic search with large language model (LLM) reasoning to get the best of both worlds.

The semantic search step finds the most relevant historical examples, while the LLM then interprets and refines these results to produce clear and specific guidance. This approach ensures that developers receive precise recommendations instead of vague or generic answers. It also helps avoid speculative outputs that can reduce developer trust in AI tools.

Development Approach with Cursor

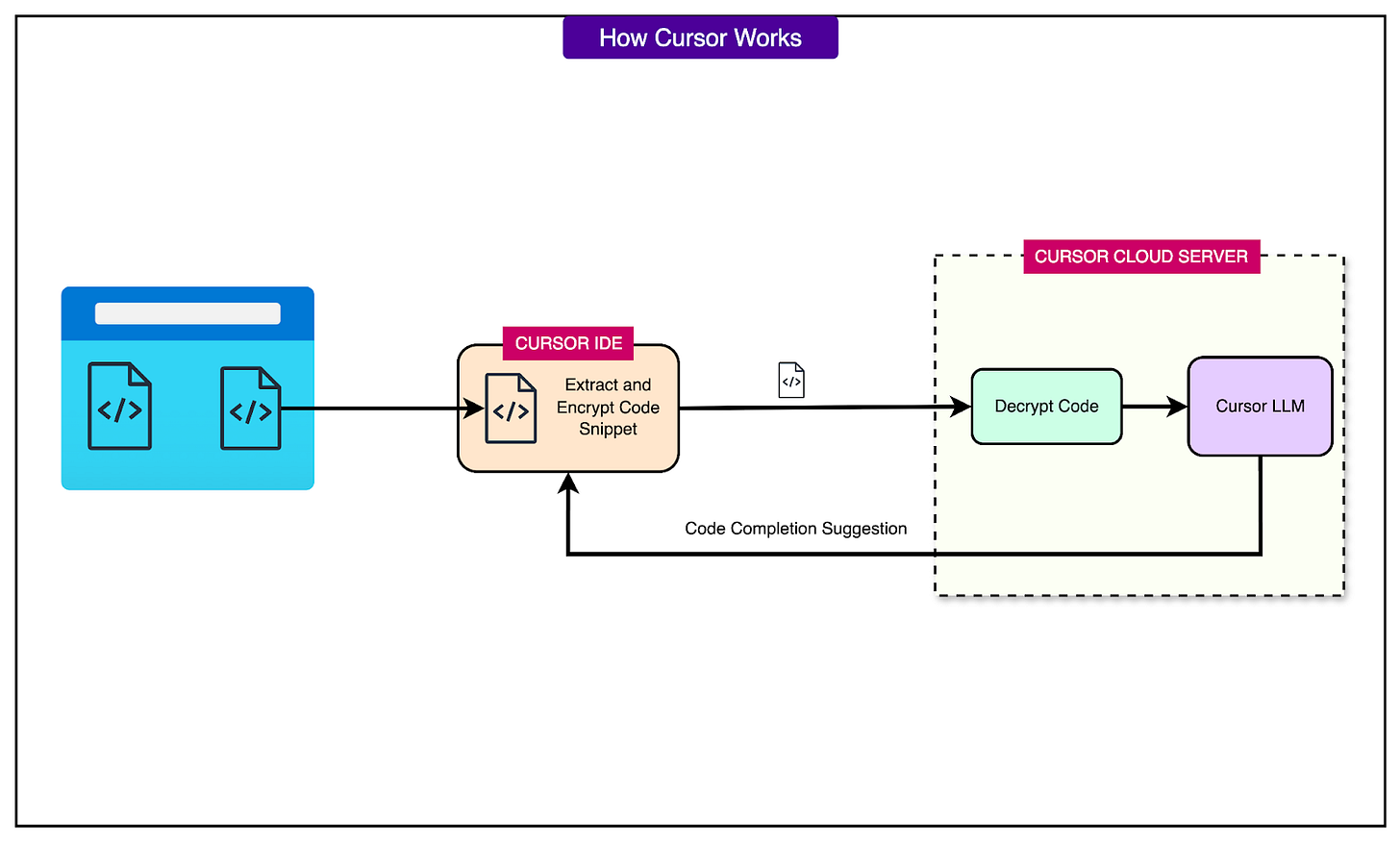

To build the TF Triage Agent quickly and effectively, the Salesforce engineering team decided to use Cursor, an AI-powered pair programming and code-retrieval tool. This decision played a major role in speeding up development and reducing unnecessary engineering effort.

Normally, building a system like this would have taken several months of manual work. By using Cursor, the Salesforce engineering team was able to complete the project in just four to six weeks. Cursor’s strength lies in its deep integration with the codebase and its ability to provide real-time, contextually relevant code references while engineers are working.

During development, when the team needed to add a new similarity engine to the TF Triage Agent, Cursor made it easy to find existing code patterns that were already implemented elsewhere in the system. This meant engineers did not have to reinvent the wheel for every new component. Instead, they could quickly understand and reuse proven approaches.

Cursor was also valuable when the team faced scaling challenges. Instead of relying on trial and error, engineers could explore multiple architectural options suggested by Cursor and make informed decisions quickly. This ability to iterate fast helped the team build a more reliable and scalable system in a shorter time.

Another key benefit was that Cursor allowed Salesforce engineers to focus their time and energy on the core failure triage logic, which was the most complex and valuable part of the project. Tasks like searching through legacy code or writing repetitive boilerplate were handled much more efficiently with Cursor’s assistance.

Conclusion

By building and deploying the TF Triage Agent, the Salesforce engineering team was able to transform a slow, manual process into a fast and reliable automated workflow.

Some of the key lessons from this project are as follows:

By using vector search and embeddings for historical failure data, the system can retrieve the most relevant past solutions quickly and accurately. Adding context-rich prompts and LLM reasoning on top of this improves both the precision of recommendations and the level of trust developers place in the system.

The team’s decision to build asynchronous pipelines ensured that triage runs efficiently without blocking critical CI/CD processes. This architectural choice allowed the system to scale smoothly even as it processed millions of tests every day.

Another key factor was the use of AI development tools like Cursor, which helped shorten the build cycle from months to just a few weeks.

Finally, the Salesforce engineering team approached deployment thoughtfully, using incremental rollout and trust-building through concrete data. This ensured that developers adopted the system with confidence and experienced clear improvements in their daily workflows.

Together, these decisions led to a 30 percent faster test failure resolution time and a significant boost in developer productivity.

Reference:

Help us Make ByteByteGo Newsletter Better

TL:DR: Take this 2-minute survey so I can learn more about who you are, what you do, and how I can improve ByteByteGo

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Good insight

The instruction manual they should have included with AI.

https://open.substack.com/pub/xpraxisx/p/reality-navigators?r=699zlt&utm_medium=ios