How Spotify Uses GenAI and ML to Annotate a Hundred Million Tracks

Azure VM Cheatsheet for DevOps Teams (Sponsored)

Azure Virtual Machine (VM) lets you flexibly run virtualized environments and scale on demand. But how do you make sure your VMs are optimized and cost-effective?

Download the cheatsheet to see how Datadog’s preconfigured Azure VM dashboard helps you:

Visualizing real-time VM performance and system metrics

Correlating host data with application behavior

Right-sizing VMs to optimize costs and performance

Disclaimer: The details in this post have been derived from the articles shared online by the Spotify Engineering Team. All credit for the technical details goes to the Spotify Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Spotify applies machine learning across its catalog to support key features.

One set of models assigns tracks and albums to the correct artist pages, handling cases where metadata is missing, inconsistent, or duplicated. Another set analyzes podcasts to detect platform policy violations. These models review audio, video, and metadata to flag restricted content before it reaches listeners.

All of these activities depend on large volumes of high-quality annotations. These annotations act as the ground truth for model training and evaluation. Without them, model accuracy drops, feedback loops fail, and feature development slows down. As the number of use cases increased, the existing annotation workflows at Spotify became a bottleneck. Each team built isolated tools, managed their reviewers, and shipped data through manual processes that didn’t scale or integrate with machine learning pipelines.

The problem was structural. Annotation was treated as an isolated task instead of a core part of the machine learning workflow. There was no shared tooling, no centralized workforce model, and no infrastructure to automate annotation at scale.

This article explains how Spotify addressed these challenges by building an annotation platform designed to scale with its machine learning needs. It covers:

How was human expertise organized into a structured and scalable workflow?

What tools were built to support complex annotation tasks across different data types?

How was the infrastructure designed to integrate annotation directly into ML pipelines?

The trade-offs involved in balancing quality, cost, and speed.

Warp's AI coding agent leaps ahead of Claude Code to hit #1 on Terminal-Bench (Sponsored)

Warp just launched the first Agentic Development Environment, built for devs who want to move faster with AI agents.

It's the top overall coding agent, jumping ahead of Claude Code by 20% to become the #1 agent on Terminal-Bench and scoring 71% on SWE-bench Verified.

✅ Long-running commands: something no other tool can support

✅ Agent multi-threading: run multiple agents in parallel – all under your control

✅ Across the development lifecycle: setup → coding → deployment

Moving from Manual Workflow to Scalable Annotation

The starting point was a straightforward machine learning (ML) classification task. The team needed annotations to evaluate model predictions and improve training quality, so they built a minimal pipeline to collect them.

They began by sampling model outputs and serving them to human annotators through simple scripts. Each annotation was reviewed, captured, and passed back into the system. The annotated data was then integrated directly into model training and evaluation workflows. There was no full-fledged platform yet, but just a focused attempt to connect annotations to something real and measurable.

Even with this basic setup, the results were significant:

The annotation corpus grew by a factor of ten.

Annotator throughput tripled compared to previous manual efforts.

This early success wasn’t just about volume. It showed that when annotation is directly tied into the model lifecycle, feedback loops become more useful and productivity improves. The outcome was enough to justify further investment.

From here, the focus shifted from running isolated tasks to building a dedicated platform that could generalize the workflow and support many ML use cases in parallel.

Platform Architecture

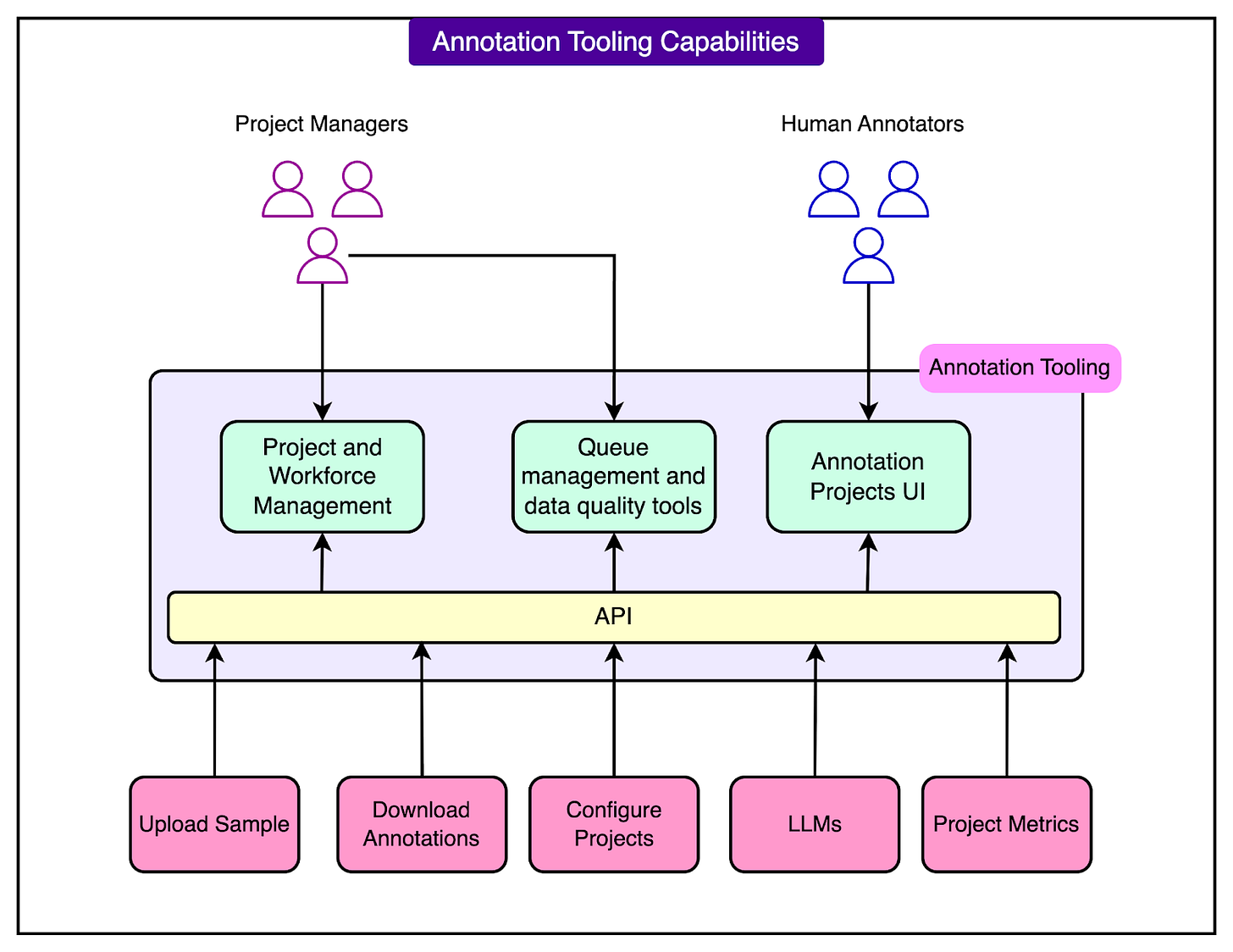

The overall platform architecture consists of three pillars. See the diagram below for reference:

Let’s look at each pillar in more detail.

1 - Scaling Human Expertise

To support large-scale annotation work, Spotify first focused on organizing its human annotation resources. Instead of treating annotators as a generic pool, the team defined clear roles with distinct responsibilities and escalation paths.

The annotation workforce was structured into three levels:

Core annotators handled the initial pass across most annotation cases. These were domain experts trained to apply consistent standards across large datasets.

Quality analysts acted as the escalation layer for complex or ambiguous cases. These were top-level reviewers who ensured that edge cases were resolved with precision, especially when annotations involved subjectivity or context-specific judgment.

Project managers worked across teams to coordinate annotation efforts. They connected ML engineers and product stakeholders with the annotation workforce, maintained training materials, gathered feedback, and adjusted data collection strategies as projects evolved.

In parallel with the human effort, Spotify also developed a configurable system powered by large language models.

This system operates in conjunction with human annotators and is designed to generate high-quality labels for cases that follow predictable patterns. It is not a full replacement but a complement that handles clear-cut examples, allowing humans to focus on harder problems.

See the diagram below:

This hybrid model significantly increased annotation throughput. By assigning the right cases to the right annotator (human or machine), Spotify was able to expand its dataset coverage at a lower cost and with higher consistency.

2 - Building Annotation Tooling for Complex Tasks

As annotation needs grew beyond simple classification, Spotify expanded its tooling to support a wide range of complex, multimodal tasks.

Early projects focused on basic question-answer formats, but new use cases required more flexible and interactive workflows. These included:

Annotating specific segments in audio and video streams.

Handling natural language processing tasks involving context-sensitive labeling.

Supporting multi-label classification where multiple attributes must be tagged per item.

To support these varied requirements, the team invested in several core areas of tooling:

Custom interfaces were built to allow fast setup of new annotation tasks. This made it possible to launch new projects without writing custom code for each use case.

Back-office systems managed user access, assigned annotation tasks across multiple experts, and tracked the status of each project. This was critical for scaling to dozens of projects running simultaneously.

Project dashboards were introduced to give teams real-time visibility into key metrics such as:

Completion rates for each task.

Total annotation volume.

Per-annotator productivity.

See the diagram below for annotating tooling capabilities:

In cases where annotation tasks involved subjective interpretation or fine-grained distinctions, such as identifying background music layered into a podcast, different experts could produce conflicting results. To handle this, the system computed an agreement score across annotators. Items with low agreement were automatically escalated to the quality analysts for resolution.

This structure allowed multiple annotation projects to run in parallel with consistent oversight, predictable output quality, and tight feedback loops between engineers, annotators, and reviewers. It turned what was once a manual process into a managed and observable workflow.

3 - Foundational Infrastructure and Integration

To support annotation at Spotify scale, the platform infrastructure was designed to be flexible and tool-agnostic. No single tool can serve all annotation needs, so the team focused on building the right abstractions. These abstractions make it possible to integrate a variety of tools depending on the task, while keeping the core system consistent and maintainable.

The foundation includes:

Generic APIs and data models that support multiple types of annotation tools. This allows teams to choose the right interface for each task without being locked into a specific implementation.

Interoperable interfaces that let engineers swap tools, layer them together, or use specialized tools for audio, video, text, or metadata tasks without rewriting pipelines.

See the diagram below:

Integration was built across two levels of ML development:

For early-stage and experimental work, lightweight command-line tools and simple UIs were created. These allow teams to run ad hoc annotation projects with minimal overhead.

For production-grade workflows, the platform connects directly to Spotify’s internal batch orchestration systems and workflow infrastructure. This supports large-scale, long-running annotation jobs that need reliability, tracking, and throughput guarantees.

The result is a system that supports both fast iteration in research environments and stable operation in production pipelines.

Engineers can move between these modes without changing how they define tasks or access results. The infrastructure sits behind the tooling, but it is what allows the annotation platform to scale efficiently across diverse use cases.

Impact on Annotation Velocity

The shift from manual, fragmented workflows to a unified annotation platform resulted in a sharp increase in annotation throughput.

Internal metrics showed a sustained acceleration in annotation volume over time, driven by both improved tooling and more efficient workforce coordination. See the figure below that shows the rate of annotations over time.

This increase in velocity directly reduced the time required to develop and iterate on machine learning models. Teams were able to move faster across several dimensions:

Training cycles became shorter, as labeled data could be collected, reviewed, and integrated into models with fewer handoffs and delays.

Ground-truth quality improved, thanks to structured escalation paths, agreement scoring, and the ability to resolve edge cases consistently.

GenAI experimentation became more efficient since the platform supported high-volume annotation needs with less setup overhead and more reliable output.

As a result, ML teams could test hypotheses, refine models, and ship features faster. The annotation platform became a core enabler for iterative, data-driven development at scale.

Conclusion

Spotify’s annotation platform is built on a clear principle: scaling machine learning requires more than just more data or larger models.

It depends on structured, high-quality annotations delivered through systems that are efficient, adaptable, and integrated into the full model development lifecycle. Relying entirely on human labor can create bottlenecks. On the other hand, full automation without oversight can lead to quality drift. Real leverage comes from combining both, with humans providing context and judgment and automation handling volume and repeatability.

By moving from isolated workflows to a unified platform, Spotify turned annotation into a shared capability rather than a one-time cost. The implementation of standardized roles, modular tools, and consistent infrastructure allowed ML teams to build and iterate faster without rebuilding pipelines from scratch.

This approach supports fast experimentation and scaling across a wide range of use cases.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.