How Uber Built a Conversational AI Agent For Financial Analysis

Stream Smarter with Amazon S3 + Redpanda (Sponsored)

Join us live on November 12 for a Redpanda Tech Talk with AWS experts exploring how to connect streaming and object storage for real-time, scalable data pipelines. Redpanda’s Chandler Mayo and AWS Partner Solutions Architect Dr. Art Sedighi will show how to move data seamlessly between Redpanda Serverless and Amazon S3 — no custom code required. Learn practical patterns for ingesting, exporting, and analyzing data across your streaming and storage layers. Whether you’re building event-driven apps or analytics pipelines, this session will help you optimize for performance, cost, and reliability.

Disclaimer: The details in this post have been derived from the details shared online by the Uber Engineering Team. All credit for the technical details goes to the Uber Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

For a company operating at Uber’s scale, financial decision-making depends on how quickly and accurately teams can access critical data. Every minute spent waiting for reports can delay decisions that impact millions of transactions worldwide.

Uber Engineering Team recognized that their finance teams were spending a significant amount of time just trying to retrieve the right data before they could even begin their analysis.

Historically, financial analysts had to log into multiple platforms like Presto, IBM Planning Analytics, Oracle EPM, and Google Docs to find relevant numbers. This fragmented process created serious bottlenecks. Analysts often had to manually search across different systems, which increased the risk of using outdated or inconsistent data. If they wanted to retrieve more complex information, they had to write SQL queries. This required deep knowledge of data structures and constant reference to documentation, which made the process slow and prone to errors.

In many cases, analysts submitted requests to the data science team to get the required data, which introduced additional delays of several hours or even days. By the time the reports were ready, valuable time had already been lost.

For a fast-moving company, this delay in accessing insights can limit the ability to make informed, real-time financial decisions.

Uber Engineering Team set out to solve this. Their goal was clear: build a secure and real-time financial data access layer that could live directly inside the daily workflow of finance teams. Instead of navigating multiple platforms or writing SQL queries, analysts should be able to ask questions in plain language and get answers in seconds.

This vision led to the creation of Finch, Uber’s conversational AI data agent. Finch is designed to bring financial intelligence directly into Slack, the communication platform already used by the company’s teams. In this article, we will look at how Uber built Finch and how it works under the hood.

What is Finch?

To solve the long-standing problem of slow and complex data access, the Uber Engineering Team built Finch, a conversational AI data agent that lives directly inside Slack. Instead of logging into multiple systems or writing long SQL queries, finance team members can simply type a question in natural language. Finch then takes care of the rest.

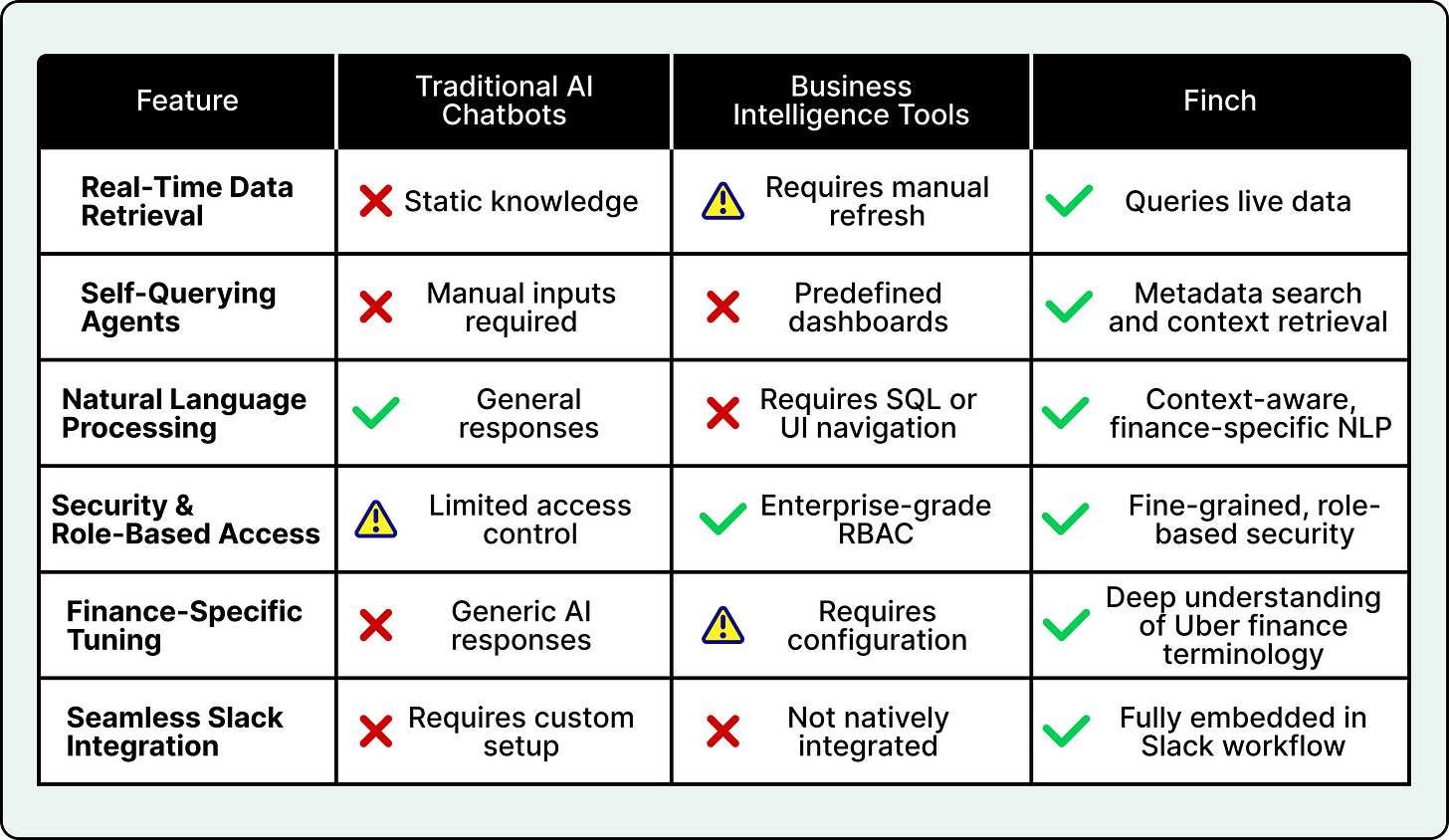

See the comparison table below that shows how Finch stands out from other AI finance tools.

At its core, Finch is designed to make financial data retrieval feel as easy as sending a message to a colleague. When a user types a question, Finch translates the request into a structured SQL query behind the scenes. It identifies the right data source, applies the correct filters, checks user permissions, and retrieves the latest financial data in real time.

Security is built into this process through role-based access controls (RBAC). This ensures that only authorized users can access sensitive financial information. Once Finch retrieves the results, it sends the response back to Slack in a clean, readable format. If the data set is large, Finch can automatically export it to Google Sheets so that users can work with it directly without any extra steps.

For example, the user might ask: “What was the GB value in US&C in Q4 2024?”

Finch quickly finds the relevant table, builds the appropriate SQL query, executes it, and returns the result right inside Slack. The user gets a clear, ready-to-use answer in seconds instead of spending hours searching, writing queries, or waiting for another team.

Finch Architecture Overview

The design of Finch is centered on three major goals: modularity, security, and accuracy in how large language models generate and execute queries.

Uber Engineering Team built the system so that each part of the architecture can work independently while still fitting smoothly into the overall data pipeline. This makes Finch easier to scale, maintain, and improve over time.

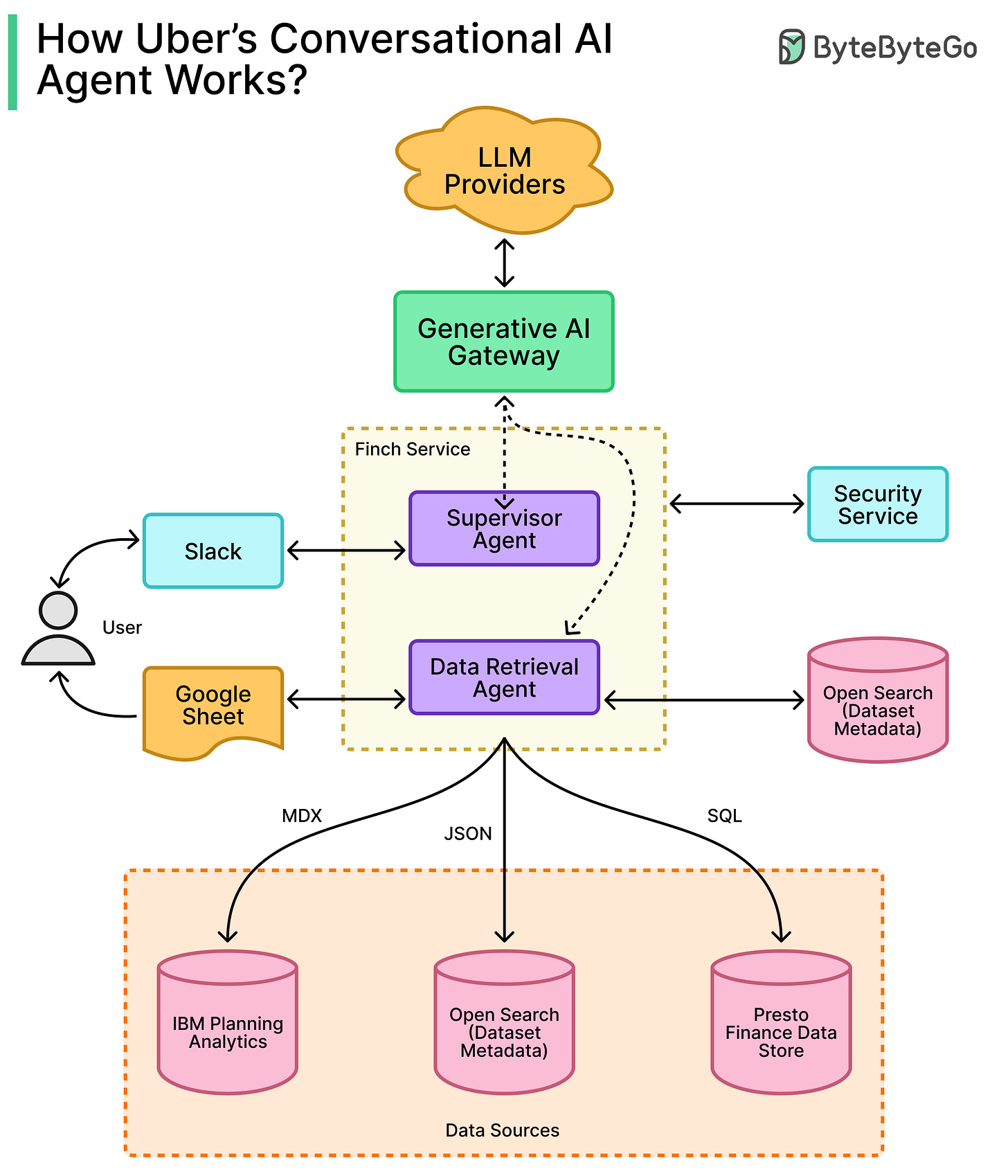

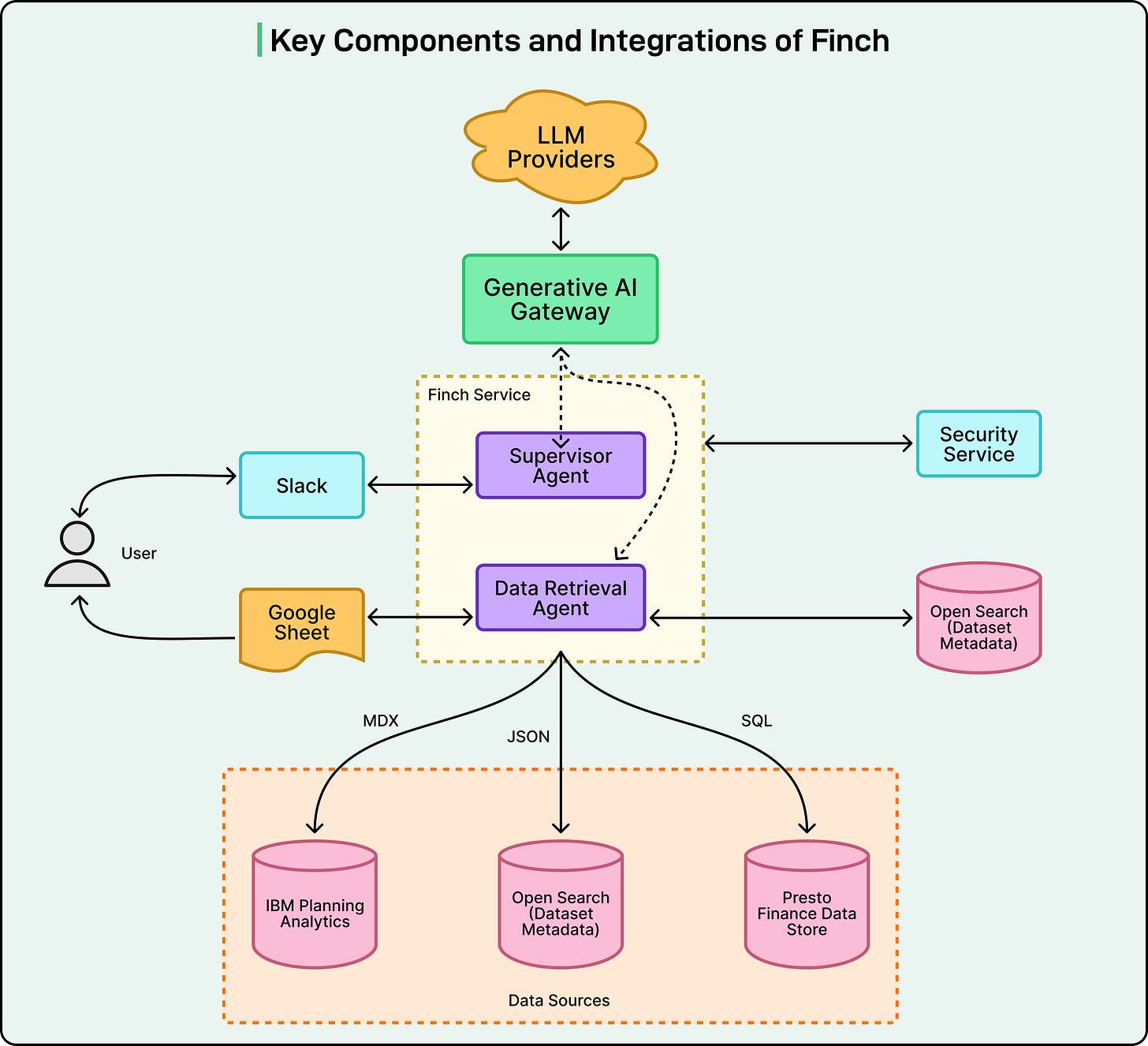

The diagram below shows the key components of Finch:

At the foundation of Finch is its data layer. Uber uses curated, single-table data marts that store key financial and operational metrics. Instead of allowing queries to run on large, complex databases with many joins, Finch works with simplified tables that are optimized for speed and clarity.

To make Finch understand natural language better, the Uber Engineering Team built a semantic layer on top of these data marts. This layer uses OpenSearch to store natural language aliases for both column names and their values. For example, if someone types “US&C,” Finch can map that phrase to the correct column and value in the database. This allows the model to do fuzzy matching, meaning it can correctly interpret slightly different ways of asking the same question. This improves the accuracy of WHERE clauses in the SQL queries Finch generates, which is often a weak spot in many LLM-based data agents.

Finch’s architecture combines several key technologies that work together to make the experience seamless for finance teams.

Generative AI Gateway: This is Uber’s internal infrastructure for accessing multiple large language models, both self-hosted and third-party. It allows the team to swap models or upgrade them without changing the overall system.

LangChain and LangGraph: This framework is used to orchestrate specialized agents inside Finch, such as the SQL Writer Agent and the Supervisor Agent. Each agent has a specific role, and LangGraph coordinates how these agents work together in sequence to understand a question, plan the query, and return the result.

OpenSearch: This is the backbone of Finch’s metadata indexing. It stores the mapping between natural language terms and the actual database schema. This makes Finch much more reliable when handling real-world language variations.

Slack SDK and Slack AI Assistant APIs: These enable Finch to connect directly with Slack. The APIs allow the system to update the user with real-time status messages, offer suggested prompts, and provide a smooth, chat-like interface. This means analysts can interact with Finch as if they were talking to a teammate.

Google Sheets Exporter: For larger datasets, Finch can automatically export results to Google Sheets. This removes the need to copy data manually and allows users to analyze results with familiar spreadsheet tools.

Finch Agentic Workflow

One of the most important elements of Finch is how its different components work together to handle a user’s query.

Uber Engineering Team designed Finch to operate through a structured orchestration pipeline, where each agent in the system has a clear role:

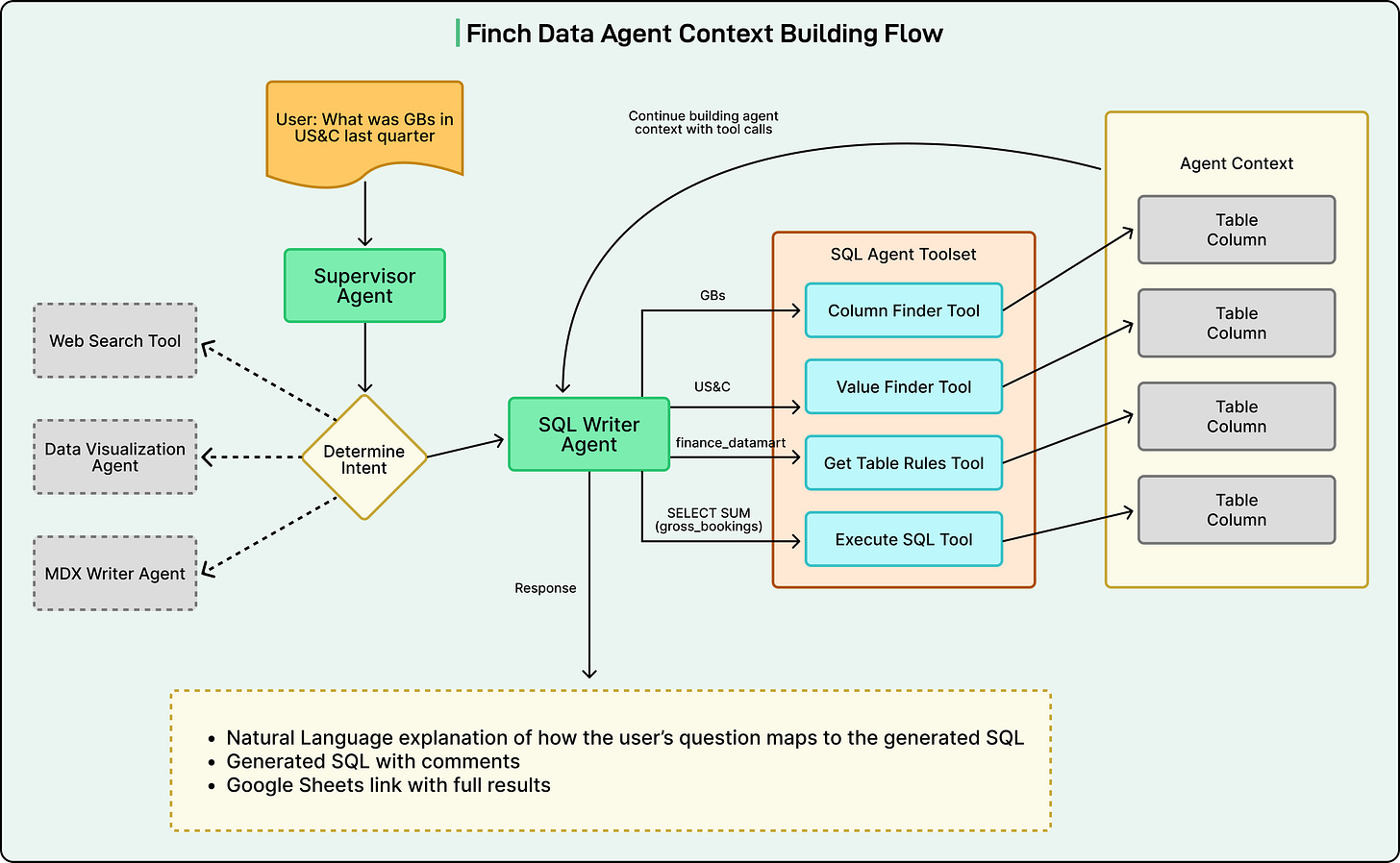

The process starts when a user enters a question in Slack. This can be something as simple as “What were the GB values for US&C in Q4 2024?”

Once the message is sent, the Supervisor Agent receives the input. Its job is to figure out what type of request has been made and route it to the right sub-agent. For example, if it’s a data retrieval request, the Supervisor Agent will send the task to the SQL Writer Agent.

After routing, the SQL Writer Agent fetches metadata from OpenSearch, which contains mappings between natural language terms and actual database columns or values. This step is what allows Finch to correctly interpret terms like “US&C” or “gross bookings” without the user needing to know the exact column names or data structure.

Next, Finch moves into query construction. The SQL Writer Agent uses the metadata to build the correct SQL query against the curated single-table data marts. It ensures that the right filters are applied and the correct data source is used. Once the query is ready, Finch executes it.

While this process runs in the background, Finch provides live feedback through Slack. The Slack callback handler updates the user in real time, showing messages like “identifying data source,” “building SQL,” or “executing query.” This gives users visibility into what Finch is doing at each step.

Finally, once the query is executed, the results are returned directly to Slack in a structured, easy-to-read format. If the data set is too large, Finch automatically exports it to Google Sheets and shares the link.

Users can also ask follow-up questions like “Compare to Q4 2023,” and Finch will refine the context of the conversation to deliver updated results.

See the diagram below that shows the data agent’s context building flow:

Finch’s Accuracy and Performance Evaluation

For Finch to be useful at Uber’s scale, it must be both accurate and fast. A conversational data agent that delivers wrong or slow answers would quickly lose the trust of financial analysts.

Uber Engineering Team built Finch with multiple layers of testing and optimization to ensure it performs consistently, even when the system grows more complex. There were two main areas:

Continuous Evaluation

Uber continuously evaluates Finch to make sure each part of the system works as expected. Here are the key evaluation steps:

This starts with sub-agent evaluation, where agents like the SQL Writer and Document Reader are tested against “golden queries.” These golden queries are the correct, trusted outputs for a set of common use cases. By comparing Finch’s output to these expected answers, the team can detect any drop in accuracy.

Another key step is checking the Supervisor Agent routing accuracy. When users ask questions, the Supervisor Agent decides which sub-agent should handle the request. Uber tests this decision-making process to catch issues where similar queries might be routed incorrectly, such as confusing data retrieval tasks with document lookup tasks.

The system also undergoes end-to-end validation, which involves simulating real-world queries to ensure the full pipeline works correctly from input to output. This helps catch problems that might not appear when testing components in isolation.

Finally, regression testing is done by re-running historical queries to see if Finch still returns the same correct results. This allows the team to detect accuracy drift before any model or prompt updates are deployed.

Performance Optimization

Finch is built to deliver answers quickly, even when handling a large volume of queries.

Uber Engineering Team optimized the system to minimize database load by making SQL queries more efficient. Instead of relying on one long, blocking process, Finch uses multiple sub-agents that can work in parallel, reducing latency.

To make responses even faster, Finch pre-fetches frequently used metrics. This means that some common data is already cached or readily accessible before users even ask for it, leading to near-instant responses in many cases.

Conclusion

Finch represents a major step in how financial teams at Uber access and interact with data.

Instead of navigating multiple platforms, writing complex SQL queries, or waiting for data requests to be fulfilled, analysts can now get real-time answers inside Slack using natural language. By combining curated financial data marts, large language models, metadata enrichment, and secure system design, the Uber Engineering Team has built a solution that removes layers of friction from financial reporting and analysis.

The architecture of Finch shows a thoughtful balance between innovation and practicality. It uses a modular agentic workflow to orchestrate different specialized agents, ensures accuracy through continuous evaluation and testing, and delivers low latency through smart performance optimizations. The result is a system that not only works reliably at scale but also fits seamlessly into the daily workflow of Uber’s finance teams.

Looking ahead, Uber plans to expand Finch even further. The roadmap includes deeper FinTech integration to support more financial systems and workflows across the organization. For executive users like the CEO and CFO, Uber Engineering Team is introducing a human-in-the-loop validation system, where a “Request Validation” button will allow critical answers to be reviewed by subject matter experts before final approval. This will increase trust in Finch’s responses for high-stakes decisions.

The team is also working to support more user intents and specialized agents, expanding Finch beyond simple data retrieval into richer financial use cases such as forecasting, reporting, and automated analysis. As these capabilities grow, Finch will evolve from being a helpful assistant into a central intelligence layer for Uber’s financial operations.

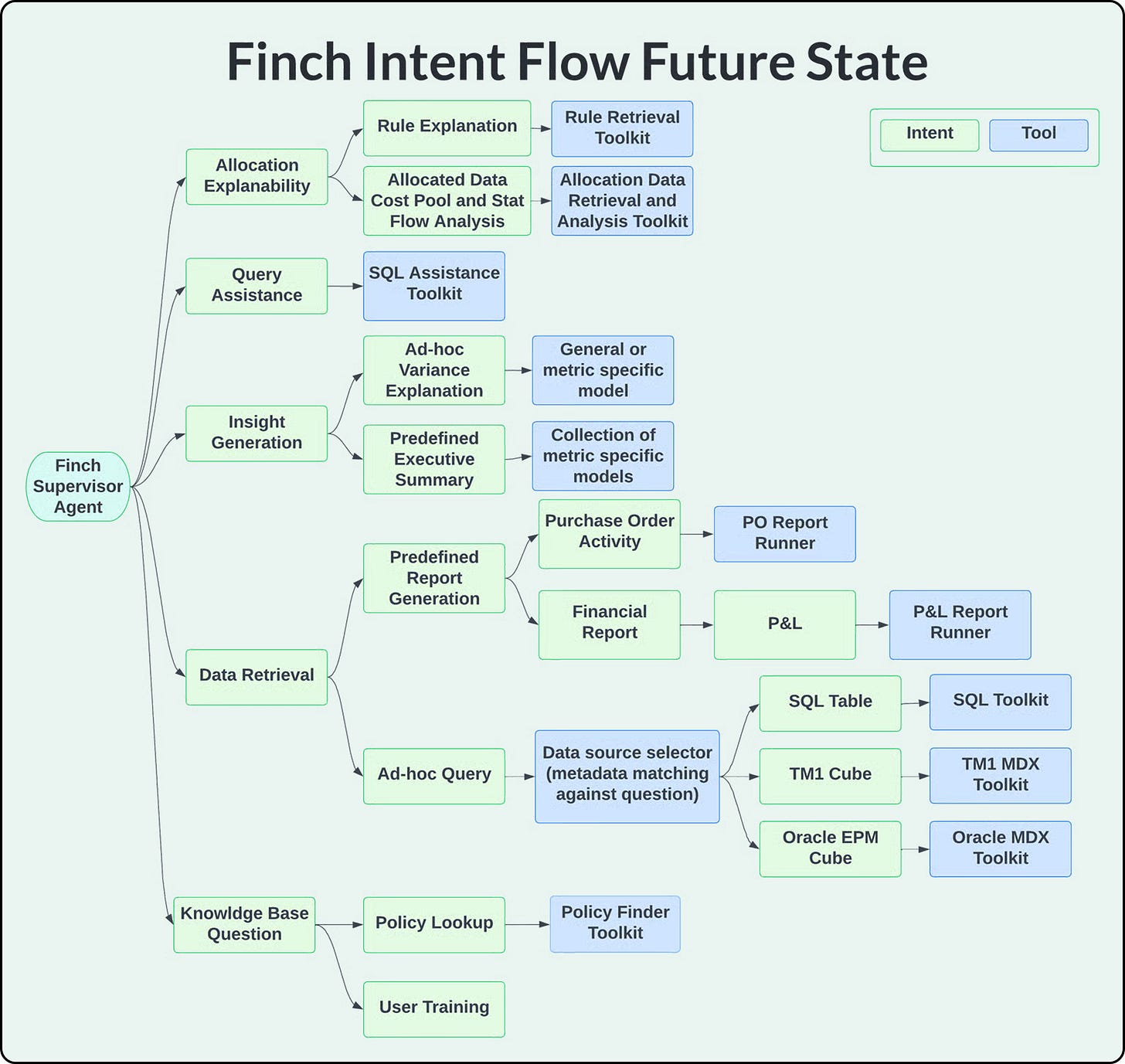

See the diagram below that shows a glimpse of Finch’s Intent Flow Future State:

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

information is very helpful..

incredibly well written, i’ve never seen such complex information be presented in a meaningful, impactful and digestive way! i’ll be reading more!