Inside Airbnb’s AI-Powered Pipeline to Migrate Tests: Months of Work in Days

DevOps Roadmap: Future-proof Your Engineering Career (Sponsored)

Full-stack isn't enough anymore. Today's top developers also understand DevOps.

Our actionable roadmap cuts straight to what matters.

Built for busy coders, this step-by-step guide maps out the essential DevOps skills that hiring managers actively seek and teams desperately need.

Stop feeling overwhelmed and start accelerating your market value. Join thousands of engineers who've done the same.

This guide was created exclusively for ByteByteGo readers by TechWorld with Nana

Disclaimer: The details in this post have been derived from the articles/videos shared online by the Airbnb Engineering Team. All credit for the technical details goes to the Airbnb Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Code migrations are usually a slow affair. Dependencies change, frameworks evolve, and teams get stuck rewriting thousands of lines that don’t even change product behavior.

That was the situation at Airbnb.

Thousands of React test files still relied on Enzyme, a tool that hadn’t kept up with modern React patterns. The goal was clear: move everything to React Testing Library (RTL). However, with over 3,500 files in scope, the effort appeared to be a year-long grind of manual rewrites.

Instead, the team finished it in six weeks.

The turning point was the use of AI, specifically Large Language Models (LLMs), not just as assistants, but as core agents in an automated migration pipeline. By breaking the work into structured, per-file steps, injecting rich context into prompts, and systematically tuning feedback loops, the team transformed what looked like a long, manual slog into a fast, scalable process.

This article unpacks how that migration happened. It covers the structure of the automation pipeline, the trade-offs behind prompt engineering vs. brute-force retries, the methods used to handle complex edge cases, and the results that followed.

Where Fintech Engineers Share How They Actually Build (Sponsored)

Built by developers, for developers, fintech_devcon is the go-to technical conference for engineers and product leaders building next-generation financial infrastructure.

Why attend? It’s dev-first, focused on deep, educational content (with no sales pitches). Hear from builders at Wise, Block, Amazon, Adyen, Plaid, and more.

What will you learn? Practical sessions on AI, payment flows, onboarding, dev tools, security, and more. Expect code, architecture diagrams, and battle-tested lessons.

When and where? Happening in Denver, August 4–6. Use code BBG25 to save $195.

Still on the fence? Watch past sessions, including Kelsey Hightower’s phenomenal 2024 keynote.

The Need for Migration

Enzyme, adopted in 2015, provided fine-grained access to the internal structure of React components. This approach matched earlier versions of React, where testing internal state and component hierarchy was a common pattern.

By 2020, Airbnb had shifted all new test development to React Testing Library (RTL).

RTL encourages testing components from the perspective of how users interact with them, focusing on rendered output and behavior, not implementation details. This shift reflects modern React testing practices, which prioritize maintainability and resilience to refactoring.

However, thousands of existing test files at Airbnb were still using Enzyme. Migrating them introduced several challenges:

Different testing models: Enzyme relies on accessing component internals. RTL operates at the DOM interaction level. Tests couldn’t be translated line-for-line and required structural rewrites.

Risk of coverage loss: Simply removing legacy Enzyme tests would leave significant gaps in test coverage, particularly for older components no longer under active development.

Manual effort was prohibitive: Early projections estimated over a year of engineering time to complete the migration manually, which was too costly to justify.

The migration was necessary to standardize testing across the codebase and support future React versions, but it had to be automated to be feasible.

Migration Strategy and Proof of Concept

The first indication that LLMs could handle this kind of migration came during a 2023 internal hackathon. A small team tested whether a large language model could convert Enzyme-based test files to RTL. Within days, the prototype successfully migrated hundreds of files. The results were promising in terms of accuracy as well as speed.

That early success laid the groundwork for a full-scale solution. In 2024, the engineering team formalized the approach into a scalable migration pipeline. The goal was clear: automate the transformation of thousands of test files, with minimal manual intervention, while preserving test intent and coverage.

To get there, the team broke the migration process into discrete, per-file steps that could be run independently and in parallel. Each step handled a specific task, like replacing Enzyme syntax, fixing Jest assertions, or resolving lint and TypeScript errors. When a step failed, the system invoked an LLM to rewrite the file using contextual information.

This modular structure made the pipeline easy to debug, retry, and extend. More importantly, it made it possible to run migrations across hundreds of files concurrently, accelerating throughput without sacrificing quality.

Pipeline Design and Techniques

Here are the key components of the pipeline design and the various techniques involved:

1 - Step-Based Workflow

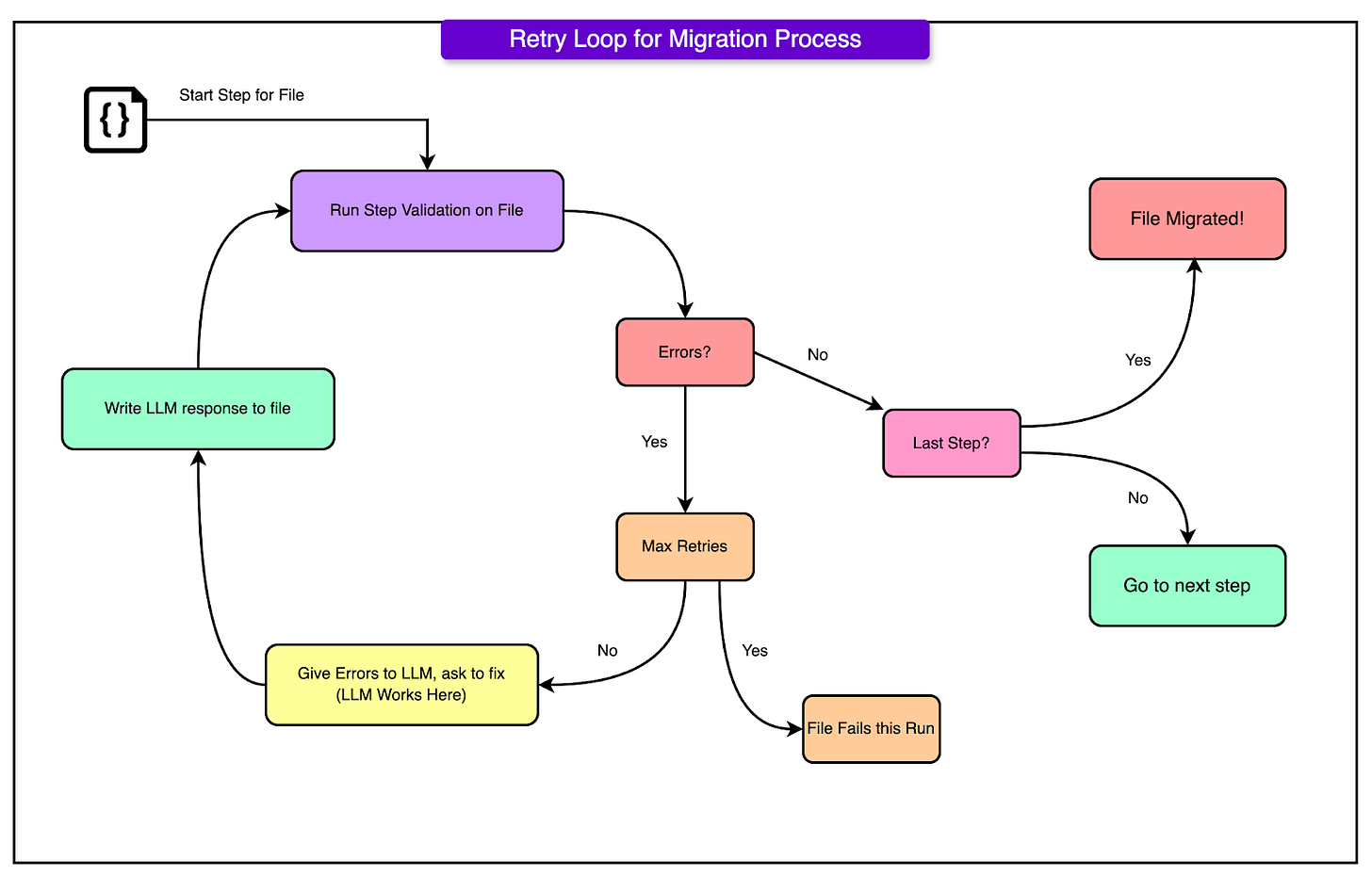

To scale migration reliably, the team treated each test file as an independent unit moving through a step-based state machine. This structure enforced validation at every stage, ensuring that transformations passed real checks before advancing.

Each file advanced through the pipeline only if the current step succeeded. If a step failed, the system paused progression, invoked an LLM to refactor the file based on the failure context, and then re-validated before continuing.

Key stages in the workflow included:

Enzyme refactor: Replaced Enzyme-specific API calls and structures with RTL equivalents.

Jest fixes: Addressed changes in assertion patterns and test setup to ensure compatibility with RTL.

Lint and TypeScript checks: Ensured the output aligned with Airbnb’s static analysis standards and type safety expectations.

Final validation: Confirmed the migrated test behaved as expected, with no regressions or syntax issues.

This approach worked for the following reasons:

State transitions made progress measurable. Every file had a clear status and history across the pipeline.

Failures were contained and explainable. A failed lint check or Jest test didn’t block the entire process, just the specific step for that file.

Parallel execution became safe and efficient. The team could run hundreds of files through the pipeline concurrently without bottlenecks or coordination overhead.

Step-specific retries became easy to implement. When errors showed up consistently at one stage, fixes could target that layer without disrupting others.

This structured approach provided a foundation for automation to succeed at scale. It also set up the necessary hooks for advanced retry logic, context injection, and real-time debugging later in the pipeline.

2 - Retry Loops and Dynamic Prompting

Initial experiments showed that deep prompt engineering only got so far.

Instead of obsessing over the perfect prompt, the team leaned into a more pragmatic solution: automated retries with incremental context updates. The idea was simple. If a migration step failed, try again with better feedback until it passed or hit a retry limit.

At each failed step, the system fed the LLM:

The latest version of the file

The validation errors from the failed attempt

This dynamic prompting approach allowed the model to refine its output based on concrete failures, not just static instructions. Instead of guessing at improvements, the model had specific reasons why the last version didn’t pass.

Each step ran inside a loop runner, which retried the operation up to a configurable maximum. This was especially effective for simple to mid-complexity files, where small tweaks (like fixing an import, renaming a variable, or adjusting test structure) often resolved the issue.

This worked for the following reasons:

The feedback loop wasn’t manual. It ran automatically and cheaply at scale.

Most files didn’t need so many tries. Many succeeded after 1 or 2.

There was no need to perfectly tune the initial prompt. The system learned through failure.

Retrying with context turned out to be a better investment than engineering the “ideal” prompt up front. It allowed the pipeline to adapt without human intervention and pushed a large portion of files through successfully with minimal effort.

3 - Rich Prompt Context

Retry loops handled the bulk of test migrations, but they started to fall short when dealing with more complex files: tests with deep indirection, custom utilities, or tightly coupled setups. These cases needed more than just brute-force retries. They needed contextual understanding.

To handle these, the team significantly expanded prompt inputs, pushing token counts into the 40,000 to 100,000 range. Instead of a minimal diff, the model received a detailed picture of the surrounding codebase, testing patterns, and architectural intent.

Each rich prompt included:

The component source code being tested

The test file targeted for migration

Any validation errors from previous failed attempts

Sibling test files from the same directory to reflect team-specific patterns

High-quality RTL examples taken from the same project

Relevant import files and utility modules

General migration guidelines outlining preferred testing practices

const prompt = [

'Convert this Enzyme test to React Testing Library:',

`SIBLING TESTS:\n${siblingTestFilesSourceCode}`,

`RTL EXAMPLES:\n${reactTestingLibraryExamples}`,

`IMPORTS:\n${nearestImportSourceCode}`,

`COMPONENT SOURCE:\n${componentFileSourceCode}`,

`TEST TO MIGRATE:\n${testFileSourceCode}`,

].join('\n\n');Source: Airbnb Engineering Blog

The key insight was choosing the right context files, pulling in examples that matched the structure and logic of the file being migrated. Adding more tokens didn’t help unless those tokens carried meaningful, relevant information.

By layering rich, targeted context, the LLM could infer project-specific conventions, replicate nuanced testing styles, and generate outputs that passed validations even for the hardest edge cases. This approach bridged the final complexity gap, especially in files that reused abstractions, mocked behavior indirectly, or followed non-standard test setups.

4 - Systematic Cleanup From 75% to 97%

The first bulk migration pass handled 75% of the test files in under four hours. That left around 900 files stuck. These were too complex for basic retries and too inconsistent for a generic fix. Handling this long tail required targeted tools and a feedback-driven cleanup loop.

Two capabilities made this possible:

Migration Status Annotations

Each file was automatically stamped with a machine-readable comment that recorded its migration progress.

These markers helped identify exactly where a file had failed, whether in the Enzyme refactor, Jest fixes, or final validation.

// MIGRATION STATUS: {"enzyme":"done","jest":{"passed":8,"failed":2}}Source: Airbnb Engineering Blog

This gave the team visibility into patterns: common failure points, repeat offenders, and areas where LLM-generated code needed help.

Step-Specific File Reruns

A CLI tool allowed engineers to reprocess subsets of files filtered by failure step and path pattern:

$ llm-bulk-migration --step=fix-jest --match=project-abc/**Source: Airbnb Engineering Blog

This made it easy to focus on fixes without rerunning the full pipeline, accelerating feedback, and isolating scope.

Structured Feedback Loop

To convert failure patterns into working migrations, the team used a tight iterative loop:

Sample 5 to 10 failing files with a shared issue

Tune prompts or scripts to address the root cause

Test the updated approach against the sample

Sweep across all similar failing files

Repeat the cycle with the next failure category

This method wasn’t theoretical. In practice, it pushed the migration from 75% to 97% completion in just four days. For the remaining ~100 files, the system had already done most of the work. LLM outputs weren’t usable as-is, but served as solid baselines. Manual cleanup on those final files wrapped up the migration in a matter of days, not months.

The takeaway was that brute force handled the bulk, but targeted iteration finished the job. Without instrumentation and repeatable tuning, the migration would have plateaued far earlier.

Conclusion

The results validated both the tooling and the strategy. The first bulk run completed 75% of the migration in under four hours, covering thousands of test files with minimal manual involvement.

Over the next four days, targeted prompt tuning and iterative retries pushed completion to 97%. The remaining ~100 files, representing the final 3%, were resolved manually using LLM-generated outputs as starting points, cutting down the time and effort typically required for handwritten migrations.

Throughout the process, the original test intent and code coverage were preserved. The transformed tests passed validation, matched behavioral expectations, and aligned with the structural patterns encouraged by RTL. Even for complex edge cases, the baseline quality of LLM-generated code reduced the manual burden to cleanup and review, not full rewrites.

In total, the entire migration was completed in six weeks, with only six engineers involved and modest LLM API usage. Compared to the original 18-month estimate for a manual migration, the savings in time and cost were substantial.

The project also highlighted where LLMs excel:

When the task involves repetitive transformations across many files.

When contextual cues from sibling files, examples, and project structure can guide generation.

When partial automation is acceptable, and post-processing can clean up the edge cases.

Airbnb now plans to extend this framework to other large-scale code transformations, such as library upgrades, testing strategy shifts, or language migrations.

The broader conclusion is clear: AI-assisted development can reduce toil, accelerate modernization, and improve consistency when structured properly, instrumented well, and paired with domain knowledge.

References:

Jobright Agent : The First AI that hunts jobs for you

Job hunting can feel like a second full-time job—hours each day scrolling through endless listings, re-typing the same forms, tweaking your resume, yet still hearing nothing back.

What if you had a seasoned recruiter who handled 90% of the grunt work and lined up more interviews for you? That’s the experience with Jobright Agent:

Scan 400K+ fresh postings every morning and line up your best matches before you’re even awake.

One-click apply—tailors your resume, writes a fresh cover letter, fills out the forms, and hits submit.

Track every application and recommend smart next moves to land more interviews.

Stay by your side, cheering you on and guiding you when it matters most.

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Do we know which LLM they are using or they trained one for the migration?

Also wonder if the information about success criteria for each step is also made available to us.