Kubernetes: When and How to Apply It

Welcome back! In the first part of our Kubernetes deep dive, we covered the fundamentals - Kubernetes' architecture, key components like pods and controllers, and core capabilities like networking and storage.

Now, we'll dive into the practical side of Kubernetes. You'll learn when and how to apply Kubernetes based on your application needs and team skills. We'll explore advanced features, benefits and drawbacks, use cases where Kubernetes excels, and situations where it may be overkill.

By the end, you'll have a starting roadmap to putting Kubernetes into practice safely and successfully. Let's get started!

Kubernetes' Declarative Architecture

One of Kubernetes' key strengths is its declarative architecture. With declarative APIs, you specify the desired state of your application and Kubernetes handles reconciling the actual state to match it.

The Declarative Model

For example, to deploy an application, you would create a Deployment resource (discussed in the last issue) that declares details like:

The Deployment resource declares the desired state:

Use the nginx 1.16 image

Run 3 replicas

Match pods by app=my-app label

Kubernetes then handles all the underlying details of actually deploying and scaling your app based on your declared spec.

This is different from an imperative approach that would require step-by-step commands to deploy and update.

Custom Resource Definition

A key benefit of this architecture is extensibility. Kubernetes is designed to watch for new resource types and seamlessly handle them via declarative APIs. No modifications to Kubernetes itself are needed. Developers can create Custom Resource Definitions for new resource types that work just like built-ins.

Here is a simple example of Custom Resource Definition:

This defines a new App resource under mycompany.com/v1. It could be used like:

The declarative model enables powerful automation capabilities. Controllers can monitor resource specs and automatically adjust them as needed. For example, the HorizontalPodAutoscaler tracks metrics like CPU usage and scales Deployments up or down in response. The Cluster Autoscaler modulates node counts based on pod resource demands.

While Kubernetes does support imperative commands like kubectl run, these are less extensible and do not integrate with Kubernetes' automation capabilities as seamlessly. Using declarative APIs provides significant advantages in terms of extensibility, portability and self-service automation.

Advanced Built-in Resources

Kubernetes provides many built-in resources that leverage its declarative architecture to make managing applications easier. Some examples:

Ingress resources allow declarative configuration of external access to Kubernetes services. This leverages extensibility by introducing a custom resource to abstract the implementation details of exposing services. Different Ingress controllers can be implemented for various environments like Nginx, ALB, Traefik etc. This separation of concerns enables portability.

ConfigMaps provide a native Kubernetes way to inject configuration data into pods. ConfigMaps hold key-value data that can be mounted or set as environment variables. This allows separation of configuration from code/images. Pods directly consume ConfigMaps. This integrates configuration natively via Kubernetes' extensible API.

Role-Based Access Control (RBAC) introduces custom resources like Roles, RoleBindings and ClusterRoles. These combine to form access policies which enable granular permissions. RBAC deeply integrates declarative authorization into the Kubernetes API via admission control and enforcement.

Third-party Add-ons

In addition, a vibrant ecosystem of add-ons use Kubernetes APIs to extend functionality. Here are a few popular examples:

Helm introduces charts, which package YAML templates to declaratively manage complex, configurable applications. Charts can be hosted in repositories like package managers for easy sharing and installation. Helm effectively extends Kubernetes as an application platform.

Prometheus integrates via custom resources like ServiceMonitors for dynamic target discovery and autoscaling based on custom metrics. The Prometheus Operator and controllers leverage the extensible API to simplify monitoring, alerts and autoscaling.

Istio injects proxies for traffic control, observability and security using extensibility mechanisms like custom resources, controllers and admission webhooks. This layers on advanced features without changing code.

Argo CD utilizes custom resources, controllers, CRDs, webhooks and operators to enable GitOps workflows on Kubernetes. It models CD concepts through declarative APIs and reconciles state through automation.

Kubernetes lets you fully customize your system configuration. Everything is set up through Kubernetes resources and add-ons that you define. So you can shape the platform to your specific needs, rather than being constrained by predefined options.

Kubernetes gives you common building blocks that you can combine in creative ways to meet your use cases. This open, programmable design means you can develop novel applications that even the original developers didn't think of.

Benefits and Drawbacks of Kubernetes

Kubernetes has become hugely popular due to the many benefits it offers, but like any technology, it also comes with some downsides that need careful evaluation. Below we dive into the key pros and cons of Kubernetes

The Main Benefits

Infrastructure Efficiency

One benefit of Kubernetes is how well it lets you manage resources on a large scale. By automatically scheduling containers across nodes, and features like auto-scaling and self-healing, Kubernetes makes sure resources are used optimally. It adjusts smoothly based on demand, starting up or shutting down resources as needed. This not only saves money from better resource use, but also guarantees applications have the right resources to run smoothly.

Enhanced Developer Productivity

By handling many infrastructure tasks like scaling and deployments automatically, Kubernetes frees developers from having to spend as much time on those tasks. This lets developers focus their time and energy on writing application code rather than worry about infrastructure details. This boosts developer productivity once Kubernetes is set up.

Easy Scalability

A key advantage of Kubernetes is how easily it allows applications to scale up and down based on real-time demand. This makes sure resources are used efficiently while performing well during traffic spikes. The auto-scaling is especially useful for applications with changing workloads.

Application Portability

Kubernetes provides a consistent deployment experience whether you run applications on-premises, in the public cloud, or hybrid. This makes it easy to move applications between environments and reduces dependency on any single cloud provider.

Consistent Environments

Kubernetes provides the same standardized environment for your code in development, testing and production. This reduces bugs from inconsistencies between environments. Developers and QA engineers get a reliable testing environment that closely matches production.

Resilience

Kubernetes provides capabilities that allow developers to build highly resilient applications, such as automatic scaling, rolling updates, and redundancy across regions. However, these resilience capabilities need to be purposefully implemented using Kubernetes' flexible architecture.

Large Ecosystem

The active open source community around Kubernetes has created many tools, plugins, extensions and resources that make it customizable for diverse uses. Help and support are readily available.

Vendor Neutral

With support for diverse infrastructure both on-premises and across public clouds, Kubernetes prevents locking into a single vendor. You have flexibility to choose suitable infrastructure for your needs.

The Main Drawbacks

Complexity

The biggest downside of Kubernetes is its complexity.

The extensive capabilities of Kubernetes also make it complex, especially for production-grade deployments with many moving parts working in concert. It demands significant expertise to set up and manage properly.

Ongoing management and troubleshooting also requires specialized engineering skills. Misconfigurations can easily take down applications deployed on Kubernetes.

For smaller teams without deep DevOps skills, this complexity can outweigh the benefits. The learning curve is steep, especially for those new to large-scale distributed systems.

Resource Overheads

Running Kubernetes comes with overhead resource costs. A Kubernetes control plane requires a certain baseline level of resources.

For smaller applications or organizations just starting out, these overhead costs may not justify the benefits. The resources required to run Kubernetes would be excessive.

The human resources required to properly operate Kubernetes also imposes overhead costs. At high scale, dedicated teams are needed to manage Kubernetes infrastructure full-time.

For many smaller teams, these operational costs are difficult to justify. The break-even point at which Kubernetes efficiency pays off is higher.

Security Concerns

Securing a Kubernetes environment is challenging given the platform's many configurable parts. It requires solid expertise and constant vigilance to lock down properly.

Resource Underutilization

While Kubernetes aims to optimize resource usage, improper configuration can also lead to overprovisioning and waste. Right-sizing cluster resources based on actual utilization is critical.

Upgrade Headaches

Keeping Kubernetes clusters up-to-date requires careful planning and execution to minimize application downtime and maintain compatibility during upgrades.

Limited Support for Stateful Apps

While optimized for stateless workloads, Kubernetes does provide capabilities to support stateful applications through features like StatefulSets, persistent volumes, and affinity/anti-affinity. While support for stateful apps like databases is improving, running them on Kubernetes still involves additional complexity.

Our Take

Kubernetes offers immense benefits but also comes with drawbacks. It excels for large-scale, distributed applications that require portability across environments, high availability, and operational efficiency. But it also introduces complexity and resource overheads.

As with all engineering decisions, tradeoffs must be evaluated based on application needs and team constraints. Both the technical pros and cons along with business considerations like costs and capabilities drive the appropriate decision.

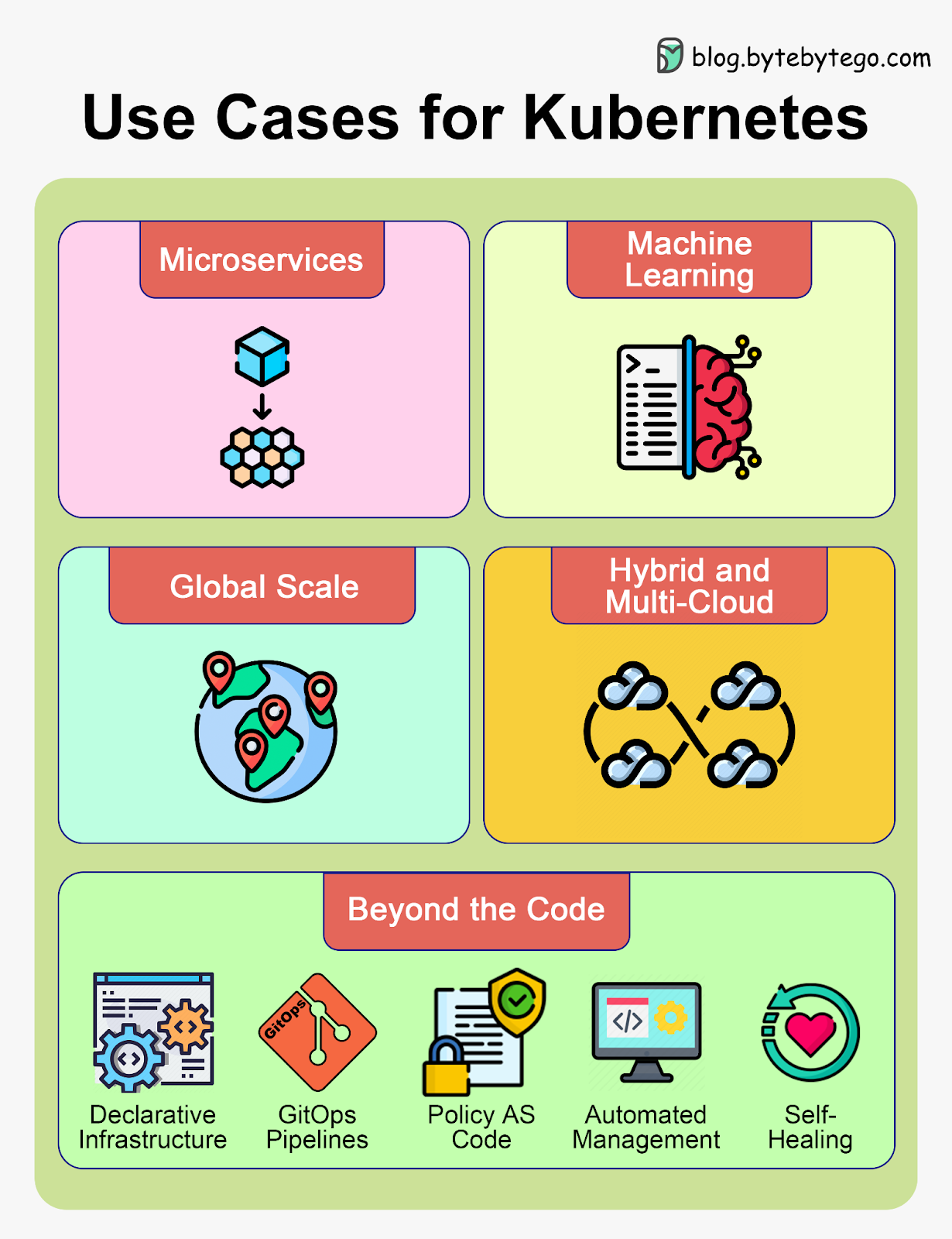

Use Cases for Kubernetes

Kubernetes shines for large-scale, complex applications. But is it a good fit for every workload? Let's examine key use cases where Kubernetes excels and also where it may be overkill.