Top AI Agentic Workflow Patterns

Tinkering with prompts can only get you so far. (Sponsored)

Most companies get stuck tinkering with prompts and wonder why their agents fail to deliver dependable results. This guide from You.com breaks down the evolution of agent management, revealing the five stages for building a successful AI agent and why most organizations haven’t gotten there yet.

In this guide, you’ll learn:

Why prompts alone aren’t enough and how context and metadata unlock reliable agent automation

Four essential ways to calculate ROI, plus when and how to use each metric

Real-world challenges at each stage of agent management and how to avoid them

When we first interact with large language models, the experience is straightforward. We type a prompt, the model generates a response, and the interaction ends.

This single-turn approach works well for simple questions or basic content generation, but it quickly reveals its limitations when we tackle more complex tasks. Imagine asking an AI to analyze market trends, create a comprehensive report, and provide actionable recommendations. A single response, no matter how well-crafted, often falls short because it lacks the opportunity to gather additional information, reflect on its reasoning, or refine its output based on feedback.

This is where agentic workflows come into play.

Rather than treating AI interactions as one-and-done transactions, agentic workflows introduce iterative processes, tool integration, and structured problem-solving approaches. These workflows transform language models from sophisticated text generators into capable agents that can break down complex problems, adapt their strategies, and produce higher-quality results. The difference is similar to comparing a quick sketch to a carefully refined painting. Both have their place, but when quality and reliability matter, the iterative approach wins.

In this article, we will look at the most popular agentic workflow patterns and how they work.

Understanding Agentic Workflows

An agentic workflow doesn’t just respond to a single instruction. Instead, it operates with a degree of autonomy, making decisions about how to approach a task, what steps to take, and how to adapt based on what it discovers along the way. This represents a fundamental shift in how we think about using AI systems.

Consider the difference between asking a basic chatbot and an agentic system to help write a research report. The basic chatbot receives our request and generates a report based on its training data, delivering whatever it produces in one response. An agentic system, however, might first search the web for current information on the topic, then organize the findings into themes, draft sections of the report, review each section for accuracy and coherence, revise weak areas, and finally compile everything into a polished document. Each of these steps might involve multiple sub-steps, decisions about which tools to use, and adaptations based on what the agent discovers.

What makes workflows truly agentic are the iteration and feedback loops built into the process. Instead of generating output in a single pass, agentic workflows involve cycles where the agent takes an action, observes the result, and uses that observation to inform the next action. This mirrors how humans actually solve complex problems. We rarely figure everything out up front and execute a perfect plan. Instead, we try something, see what happens, learn from the result, and adjust our approach. Agentic workflows bring this same adaptive, iterative quality to AI systems.

The Five Essential Agentic Workflow Patterns

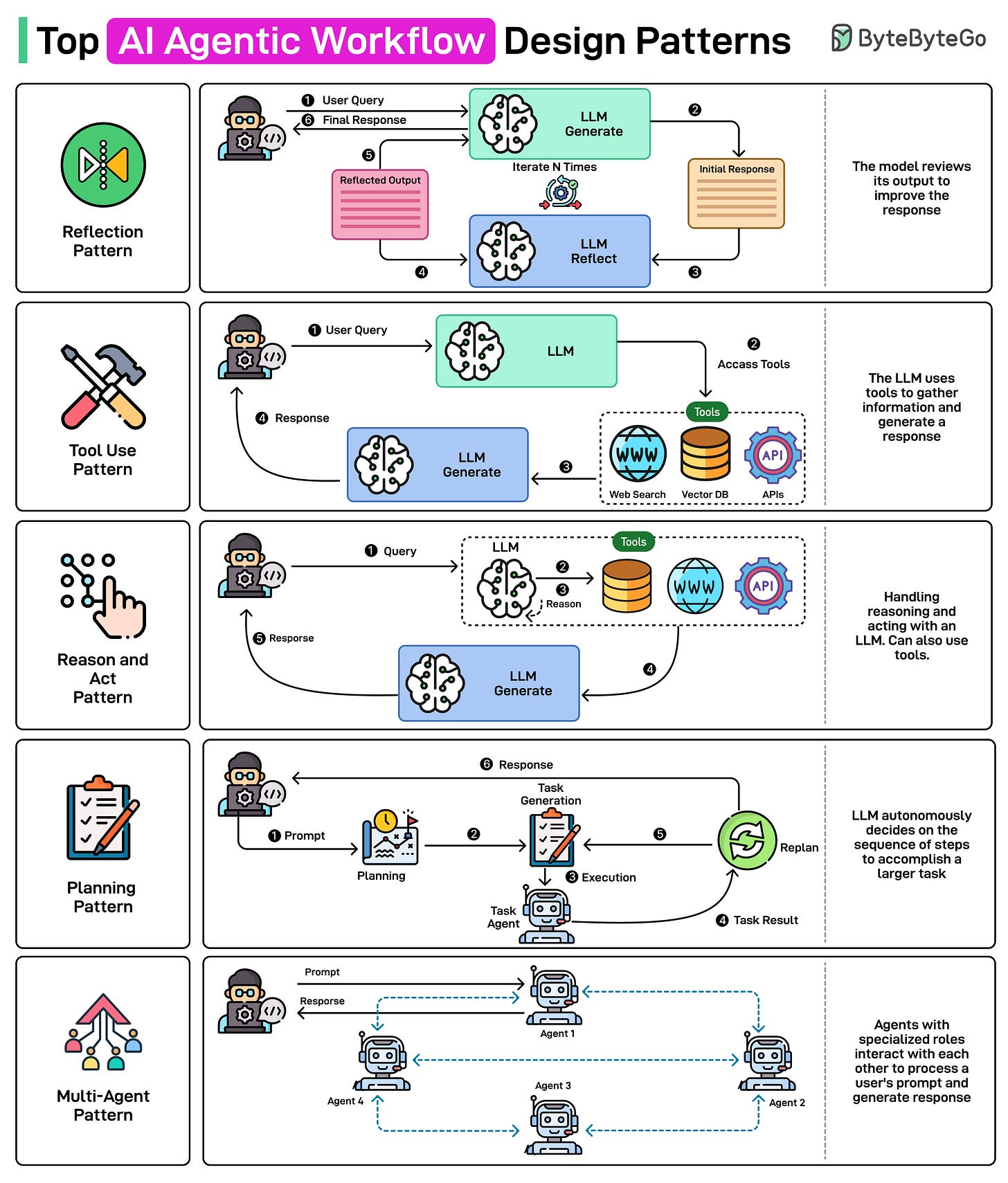

Let us now look at five essential agentic workflow patterns:

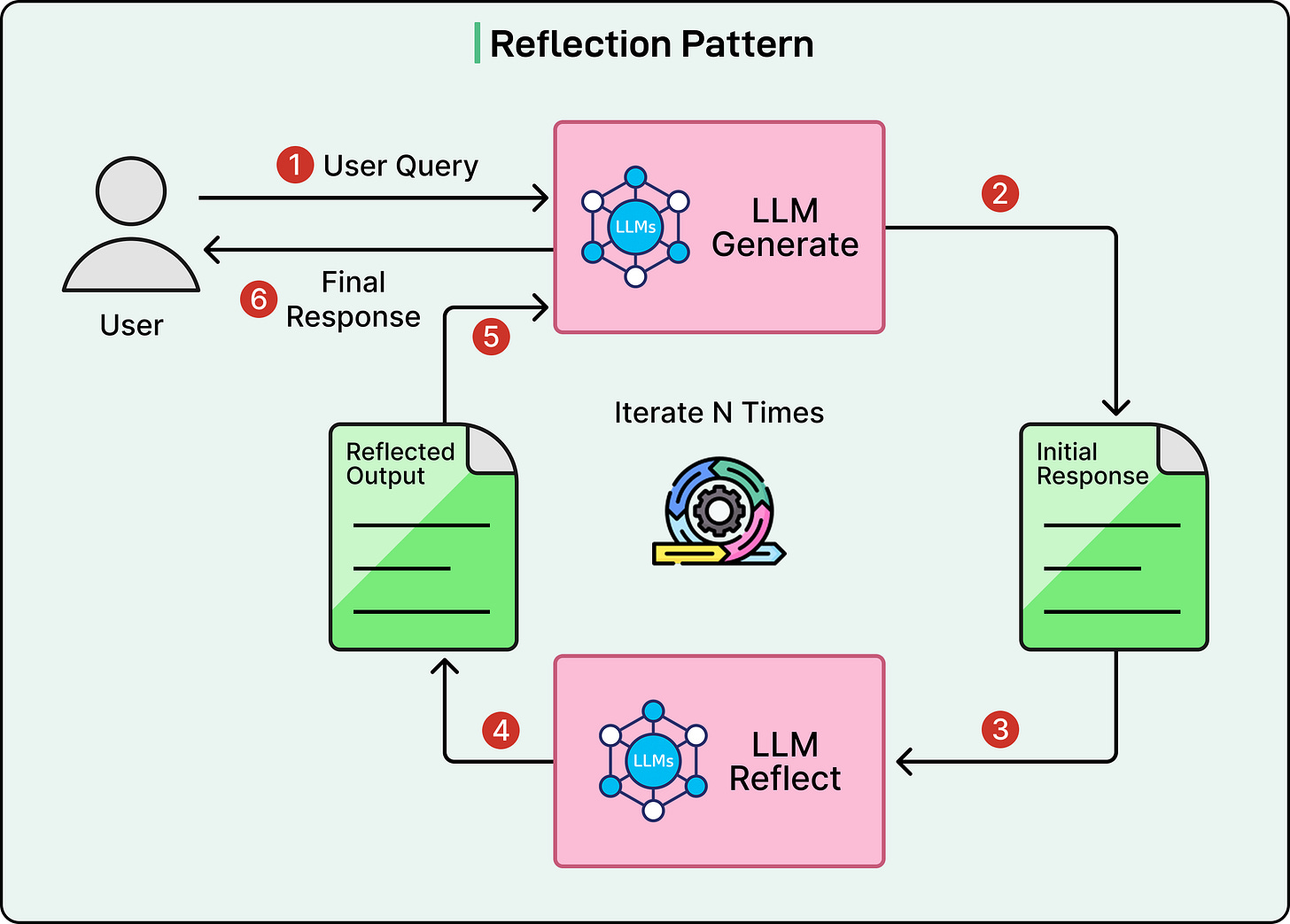

Reflection Pattern: The Self-Improving Agent

At its core, reflection is about having an agent review and critique its own work, then revise based on that critique. This simple idea improves output quality because it introduces an iterative refinement process that catches errors, identifies weaknesses, and enhances strengths.

Here’s how the reflection cycle works in practice.

The agent first generates an initial output based on the task or prompt it receives.

Then, instead of immediately presenting this output as final, the agent switches into critique mode. It examines what it just produced, looking for problems, inconsistencies, areas that lack clarity, or opportunities for improvement. This critique becomes the basis for revision.

The agent generates an improved version that addresses the issues it identified. Depending on the implementation, this cycle might repeat multiple times, with each iteration refining the output further.

See the diagram below:

The power of reflection becomes even more apparent when we specialize in the type of critique being performed. Some examples are as follows:

An agent might reflect specifically on accuracy, checking whether the facts and claims it made are correct and well-supported.

Alternatively, reflection might focus on clarity, asking whether someone unfamiliar with the topic would understand the explanation.

For creative writing, reflection might evaluate tone, ensuring the voice matches the intended style and audience.

For code generation, reflection could focus on identifying bugs, security vulnerabilities, or opportunities to optimize performance.

The reflection pattern works best for tasks where quality matters more than speed and where there are subjective aspects that benefit from review. The pattern, however, is less necessary for simple, factual queries where the answer is straightforward or for tasks where speed is paramount and good enough is truly sufficient.

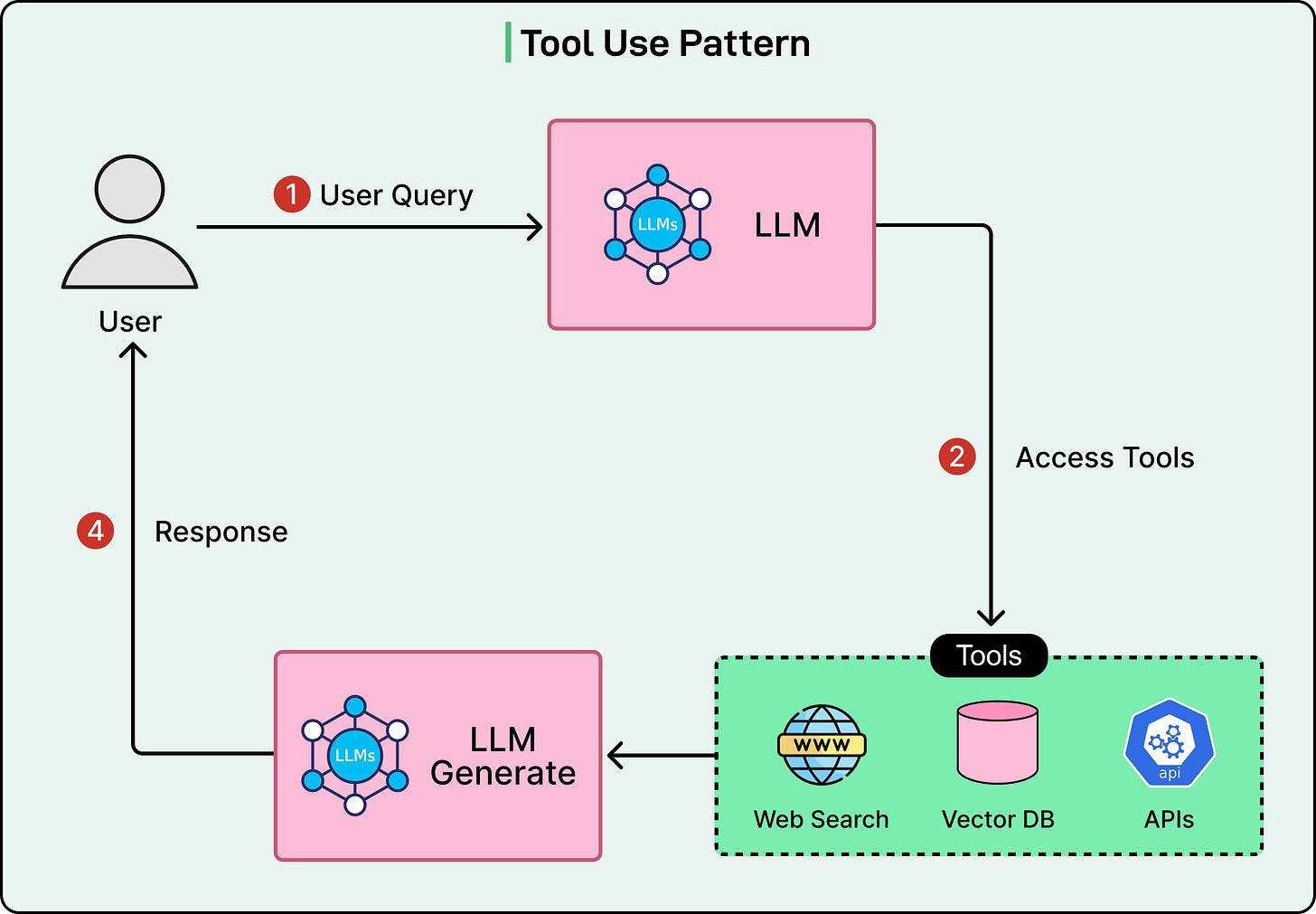

Tool Use Pattern

The tool use pattern represents a fundamental expansion of what AI agents can accomplish.

A language model by itself, no matter how sophisticated, is limited to reasoning about information it learned during training and generating text based on that knowledge. It cannot access current information, perform precise calculations with large numbers, retrieve data from specific databases, or interact with external systems. Tools change everything.

In the tool use pattern, agents are equipped with a set of capabilities they can invoke when needed. These might include web search engines for finding current information, APIs for accessing services like weather data or stock prices, code interpreters for running programs and performing calculations, database query tools for retrieving specific records, file system access for reading and writing documents, and countless other specialized functions. The critical distinction from traditional software is that the agent itself decides when and how to use these tools based on the task at hand.

See the diagram below:

When an agent receives a task, it analyzes what capabilities are needed to accomplish that task. For example:

If the task requires information the agent doesn’t have, it recognizes the need for a search or data retrieval tool.

If the task involves mathematical operations, it accesses a calculator or code interpreter.

If the task requires interacting with a specific service, it uses the appropriate API tool.

What makes tool use powerful is the dynamic nature of tool selection and the ability to chain multiple tool calls together.

The agent doesn’t follow a predetermined script. If the first search doesn’t return adequate information, the agent might reformulate its query and search again. If an API call fails or returns an error, the agent might try an alternative approach or a different tool entirely. This adaptability makes tool-enabled agents far more capable than rigid automated workflows.

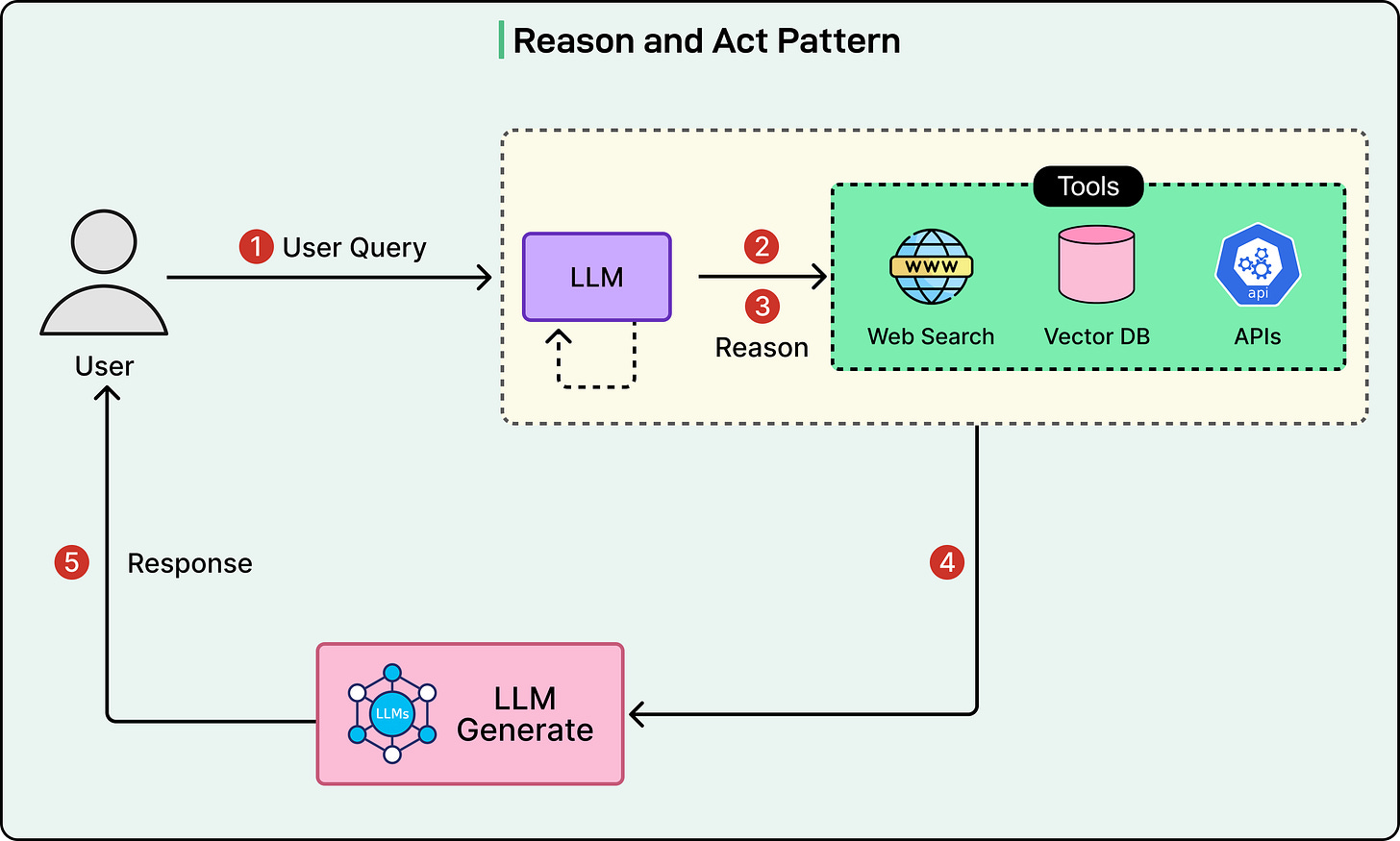

Reason and Act Pattern (ReAct)

The Reason and Act pattern, commonly known as ReAct, represents a sophisticated approach to problem-solving that combines explicit reasoning with iterative action. Rather than thinking through an entire plan before acting, or blindly taking actions without reflection, ReAct agents alternate between reasoning about what to do next and actually doing it. This interleaving of thought and action creates a natural, adaptive problem-solving process.

The ReAct cycle follows a clear pattern.

First, the agent reasons about the current situation and what it needs to accomplish. This reasoning step is made explicit, often literally written out as the agent’s internal thought process. The agent might think about what information it has, what it still needs, what approaches might work, and what the best next step is.

Then, based on this reasoning, the agent takes an action. This might be using a tool to gather information, performing a calculation, or making a decision.

After the action, the agent observes the results and enters a new reasoning phase, thinking about what it learned and what to do next. This cycle continues until the agent determines it has accomplished the goal or reached a point where it cannot proceed further.

See the diagram below:

The explicit reasoning steps serve multiple important purposes.

First, they help the agent stay on track and maintain focus on the goal. By articulating what it’s trying to accomplish and why each action makes sense, the agent is less likely to go down irrelevant paths or get stuck in unproductive loops.

Second, reasoning steps enable adaptation. When an action doesn’t yield expected results, the reasoning phase allows the agent to diagnose why and adjust its approach rather than blindly continuing.

Third, the reasoning trail provides transparency. Users and developers can see not just what the agent did, but why it made those choices, which is valuable for trust, debugging, and understanding the agent’s decision-making process.

Comparing ReAct to pure planning or pure execution highlights its strengths.

Pure planning means figuring out all the steps before taking any action. This works well when we have complete information, and the environment is predictable, but it struggles when we need to discover information along the way or when circumstances change.

Pure execution means taking actions without much forethought, which is fast but often inefficient and prone to mistakes.

ReAct finds a middle ground, providing enough structure through reasoning while maintaining flexibility through iterative action.

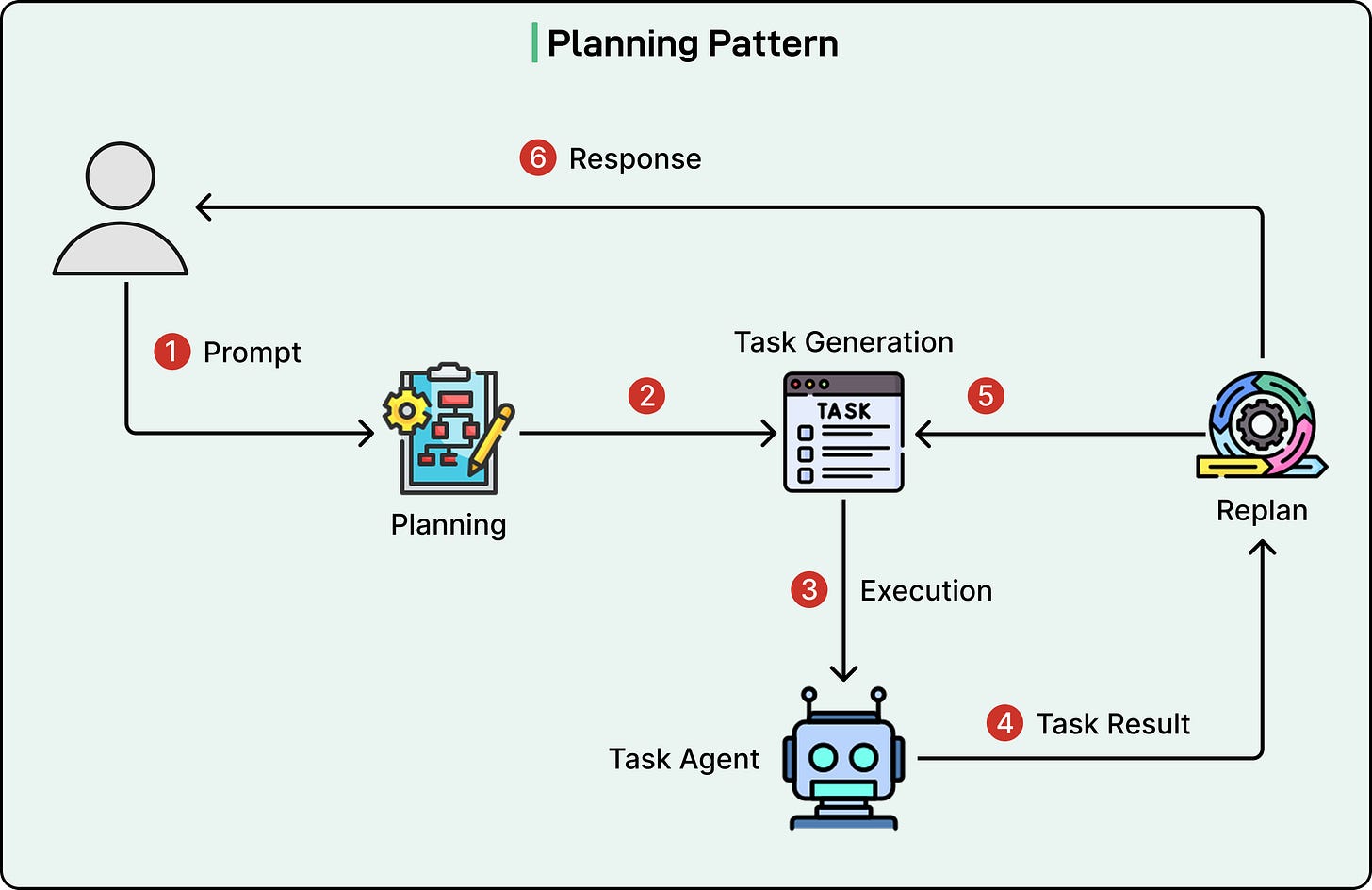

Planning Pattern

The planning pattern takes a different approach from ReAct by emphasizing upfront strategic thinking before execution begins.

When using the planning pattern, the agent starts by analyzing the overall goal and understanding what success looks like. It then breaks down this goal into smaller, more manageable subtasks. This decomposition continues until the agent has identified concrete, actionable steps.

Crucially, the agent identifies dependencies between tasks, determining which steps must be completed before others can begin and which steps can potentially happen in parallel. The agent also considers what resources, tools, or information each step will require. Only after creating this structured plan does the agent begin execution.

See the diagram below:

One of the planning pattern’s key strengths is adaptive planning.

The planning pattern works best for tasks with natural phases or stages where some activities logically precede others. It’s valuable for tasks with constraints like deadlines, budgets, or resource limitations where coordination matters. It shines in situations where mistakes or backtracking would be costly, making it worth investing time in thoughtful planning. Complex projects involving multiple work streams benefit greatly from planning.

However, the planning pattern has limitations.

For simple, linear tasks where each step naturally suggests the next one, the overhead of creating a formal plan provides little benefit.

For highly uncertain tasks where we’re likely to discover critical information during execution that fundamentally changes the approach, extensive upfront planning might be wasted effort.

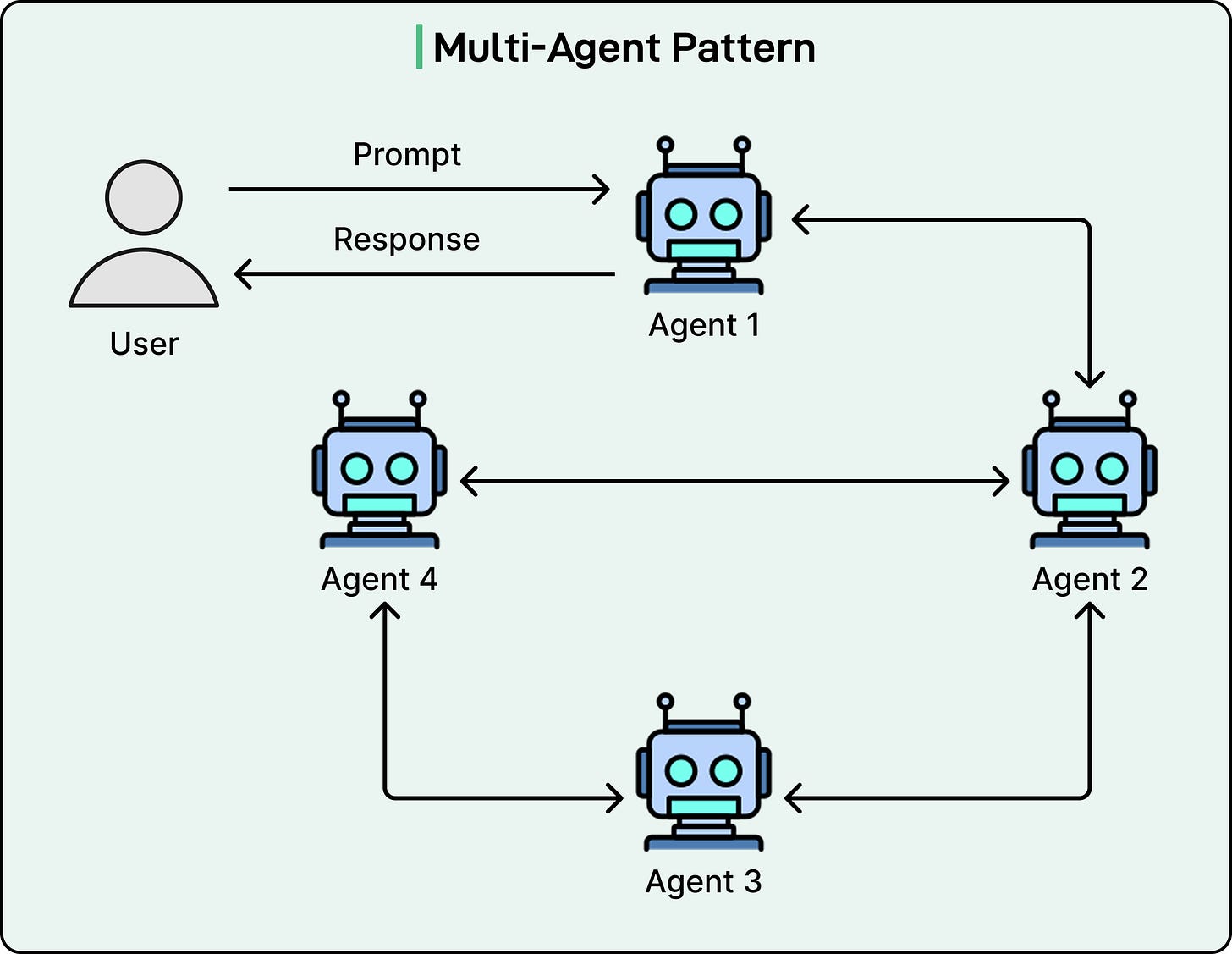

Multi-Agent Pattern

The multi-agent pattern represents perhaps the most sophisticated approach to building AI systems.

Instead of relying on a single agent to handle everything, this pattern uses multiple specialized agents that collaborate to accomplish tasks. Each agent has specific expertise, capabilities, or perspectives, and they work together much like human teams do.

The core insight behind multi-agent systems is that specialization often leads to better performance than generalization.

A single agent trying to be excellent at everything faces challenges. It must balance competing requirements in its design and training. It needs broad knowledge but also deep expertise. It must be creative but also critical. By dividing responsibilities among multiple agents, each can be optimized for its specific role.

In a multi-agent system, we typically see several types of roles.

There are specialist agents focused on particular domains or tasks, such as a research agent that excels at finding and synthesizing information, a coding agent optimized for writing and debugging code, or a data analysis agent skilled at statistical analysis and visualization.

There are often critics or review agents whose job is to evaluate outputs from other agents, identifying flaws, suggesting improvements, or verifying quality.

There’s usually a coordinator or orchestrator agent that manages the overall workflow, deciding which specialist should handle each subtask and ensuring all the pieces come together coherently.

The multi-agent pattern introduces complexity trade-offs as follows:

Coordination overhead increases with more agents.

Communication between agents requires clear protocols.

Debugging becomes more challenging because problems might arise from interactions between agents rather than individual agent errors.

The benefits must justify these costs. For simple tasks, a single capable agent is almost always better. For complex tasks requiring diverse expertise, careful coordination, or multiple perspectives, the multi-agent approach often produces superior results despite its added complexity.

Conclusion

The various agentic workflow patterns represent a fundamental evolution in how we build and deploy AI systems.

Moving beyond simple prompting to sophisticated, iterative processes has transformed what AI agents can reliably accomplish. Here’s a quick summary of the patterns we have covered:

The reflection pattern ensures quality through self-improvement.

Tool use extends capabilities far beyond pure language generation.

ReAct combines thoughtful reasoning with adaptive action.

Planning brings strategic thinking to complex tasks.

Multi-agent collaboration leverages specialization and diverse perspectives.

Together, these patterns provide a robust toolkit for building AI systems capable of handling real-world complexity.

What makes these patterns particularly powerful is that they’re not mutually exclusive. The most sophisticated agent systems often combine multiple patterns to achieve their goals.

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Informative piece, according to you which of these pattern is highly suitable for enterprise applications?

Very helpful. Question, for a small SaaS, is it overkill of using vector db?