Understanding Load Balancers: Traffic Management at Scale

When applications grow beyond a single server, they face the challenge of handling more users, more data, and more requests than one machine can manage.

This is where load balancers come in.

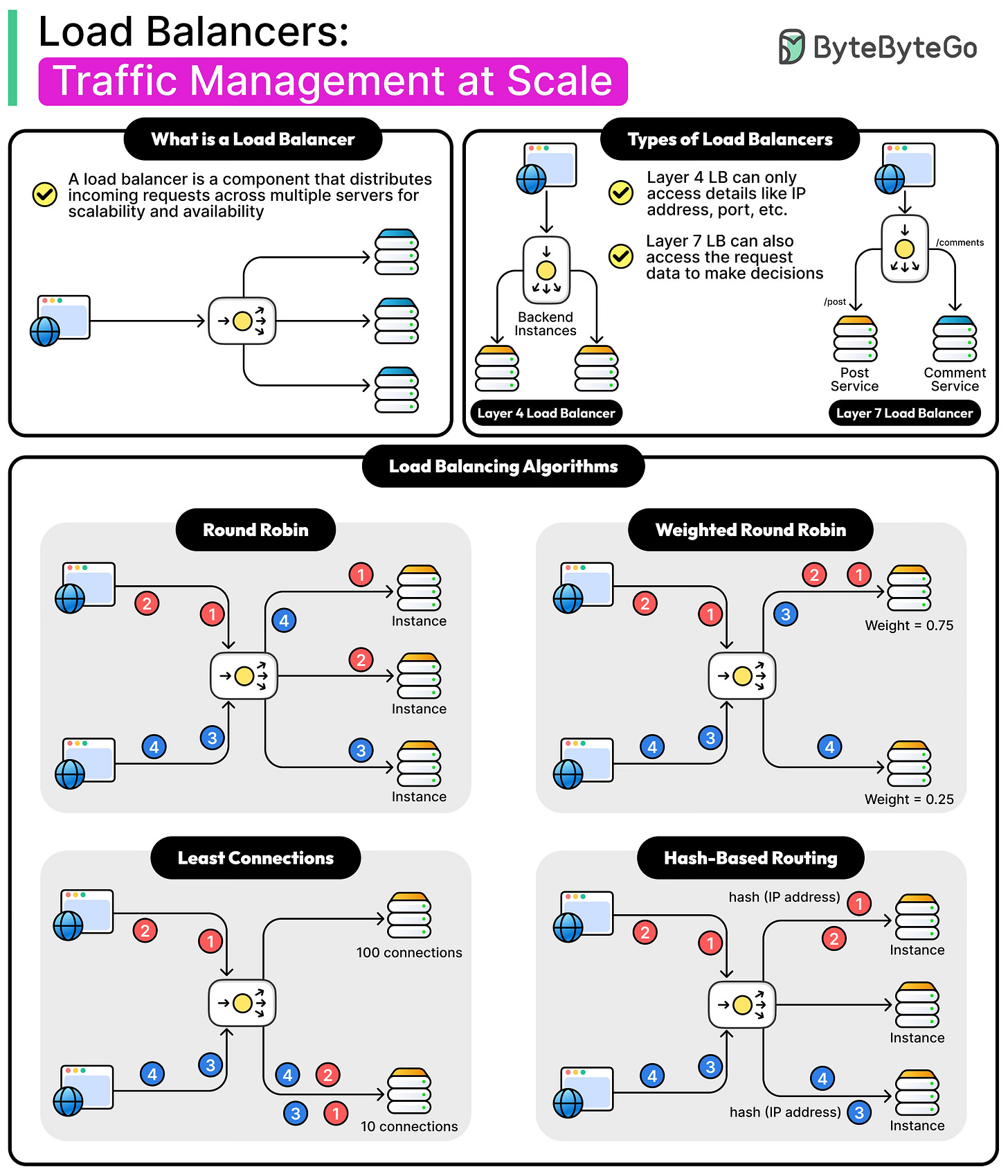

A load balancer is a system that sits between clients and servers and distributes incoming traffic across multiple backend servers. By doing this, it prevents any single server from being overloaded, ensuring that users experience smooth and reliable performance.

Load balancers are fundamental to modern distributed systems because they allow developers to scale applications horizontally by simply adding more servers to a pool. They also increase reliability by detecting server failures and automatically rerouting traffic to healthy machines.

In effect, load balancers improve both availability and scalability, two of the most critical qualities of any large-scale system.

In this article, we will learn how load balancers work internally, the differences between load balancing at the transport and application layers, and the common algorithms that power traffic distribution in real-world systems.