A Brief History of Airbnb’s Architecture

Brian Chesky and Joe Gabbia moved to San Francisco in 2007. While they were looking to raise money for their business ideas, they needed to pay their rent.

Incidentally, there was a design conference coming to town at the time which meant lots of designers would be looking for accommodation. They came up with the idea of placing an air mattress in their living room and turning it into a bed and breakfast.

In 2008, Nathan Blecharczyk joined Brian and Joe as CTO and Co-founder and they started a venture known as AirBed and Breakfast.

This was the birth of Airbnb.

Fast forward to today and Airbnb is operating in 200+ countries and 4 million hosts have welcomed over 1.5 billion guests across the globe.

As Airbnb grew by leaps and bounds, their software architecture has also evolved to keep pace with the changing times.

In this post, we will look at the evolution of Airbnb’s architecture over the years, the lessons they learned along the way and the tools they developed to support the vision.

The Initial Version

As with most startups, the first version of Airbnb’s web application was a monolithic application. It was built using Ruby-on-Rails and was internally known as the monorail.

Monolith in Airbnb’s view was a single tier unit that was responsible for both client and server-side functionality.

What does this mean in practice?

It means that the model, view and controller layers are combined together in a single repository.

The below diagram shows this approach.

There were several advantages to this monolithic approach:

Monoliths are easy to get started with which was the need of the hour for Airbnb initially

They are good for agile development

Complexity is manageable

The problems started as Airbnb’s engineering team grew rapidly. Airbnb was doubling year over year which meant more and more developers were adding new code as well as changing existing code within the monolithic application.

Over time, the code base started to get more tightly coupled and ownership of data became unclear. For example, it was difficult to determine which tables were owned by which application functionality. Any developer could make changes to any part of the application and it became difficult to track and coordinate the changes.

This situation led to multiple issues such as:

At any given time, hundreds of engineers were working on the monorail and therefore, deployments became slow and cumbersome.

Since Airbnb followed the philosophy of democratic deployments (where each engineer was responsible for testing and deploying their changes to prod), it became a huge mess of conflicting changes.

The engineering productivity went down and there was increased frustration among the developers.

To alleviate these pain points, Airbnb embarked on a migration journey to move from monolithic to a Service-Oriented Architecture or SOA.

SOA at Airbnb

How did Airbnb view Service-Oriented Architecture or SOA?

For them, SOA is a network of loosely coupled services where clients make their requests to some sort of gateway and the gateway routes these requests to multiple services and databases.

Adopting SOA allowed Airbnb to build and deploy services separately. Also, these services can be scaled independently and ownership becomes more clearly defined.

However, building services is one thing. It’s also extremely important to design these services with a disciplined approach.

Airbnb decided on some key principles to design these services with a disciplined approach:

Services should own both the reads and writes to their data. It’s quite similar to the database-per-service pattern where a particular database should be owned by one and only one service, making it easier to maintain data consistency.

Each service should address a specific concern. Airbnb wanted to make sure that the monolith does not decompose into another giant service that turned into another monolith over time. Also, they wanted to avoid the path of traditional granular microservices that are only good at one thing. Instead, Airbnb shifted towards building services focused on a specific business functionality. Think of it as a high-cohesion design.

Services should avoid duplicating functionality. Sharing parts of infrastructure or code was done by means of shared libraries and shared services, making them easier to maintain.

Data mutation should take place via standard events. For example, if the reservation service creates a new row, the availability service should learn about this reservation by means of an event so that it can mark the home’s availability as busy.

Each service must be built as if it was mission-critical. This meant the service should have appropriate alerting mechanisms, built-in observability and best practices for infrastructure.

In Airbnb’s view, these principles are extremely important and they help create a logical path that all engineers can follow in order to build a shared understanding of the service architecture.

Airbnb’s Migration Journey

With the above principles and goals, Airbnb began the migration journey from the monorail to a brand-new service-oriented approach.

It was a long migration process that went through multiple iterations along the way.

Version 1

In this version, every request went through the monorail.

The monorail was basically responsible for the presentation view, business logic and also, the data access.

You can consider it as the initial state of Airbnb’s architecture.

Version 2

The next version was a hybrid where the monorail coexisted with the service-oriented architecture.

The main difference was that the monorail only handled the routing and view layer. Its job was to send incoming API traffic to the network of new services that were responsible for the business logic, data model and access.

What kind of services are we talking about over here?

Airbnb classified their services into four different types as shown in the below diagram.

Here are the details of the various types of services:

Data Service - This is the bottom layer and acts as the entry-point for all read and write operations on the data entities. A data service must not be dependent on any other service because it only accesses the data storage.

Derived Data Service - The derived services stay one layer above the data service. These services can read from the data services and also apply some basic business logic.

Middle Tier - They are used to house large pieces of business logic that doesn’t fit at the data service level or the derived data service level.

Presentation Service - At the very top of the structure are the presentation services. Their job is to aggregate data from all the other services. In addition, the presentation service also applies some frontend specific business logic before returning the data to the client.

With these service definitions, Airbnb started by building the data service layer.

For example, they started with the home data service that acted as the foundational layer of the Airbnb business. The current monorail setup accessed data from the table using the Active Record data access library in Rails.

They intercepted the incoming requests at the Active Record level and instead of routing to the database, they sent these requests to the new homes data service. The homes data service was then responsible for routing to the data store.

The below diagram shows this approach.

After creating the core data services, Airbnb also migrated the core business logic as well to the SOA approach.

For example, migrating services like the pricing derived data service that needed some information about a home from the homes data service as well as other stores such as offline price and trends.

The next step involved migrating presentation services such as the checkout presentation service that depends on pricing information and homes information from the derived data service and the core data service.

All of these changes were part of Version 2 where both the monorail and the new services co-existed within the same request cycle.

Version 3

In this version of the migration journey, the monorail was completely eliminated.

The client makes a request to the API gateway, which acts as a service layer responsible for middleware and routing. The gateway populates the request context and routes the requests to the SOA network where the various services are responsible for the presentation logic and data access logic.

The web client is handled a little differently. There’s a specific service to handle the web requests.

Why is it needed?

This service returns the HTML to the web client by calling the API Gateway and populating the response received in the required format. The API Gateway takes care of all the middleware functions and propagates the request through the SOA network.

The below diagram tries to show this scenario:

Migrating Reads and Writes

By now, you may have realized that going from a monolithic architecture to a service-oriented one is not an overnight process.

For Airbnb, a lot of time was spent in the middle phase where both the monorail and the new services had to be supported as first-class citizens.

A request could go through the monorail or through the services. This means that it was a critical requirement to ensure that functionality didn’t break apart for both the routes and the responses were equal.

To support this, Airbnb built comparison frameworks for reads as well as writes.

Reads

The first use of these comparison frameworks was in read operations because reads are idempotent. You can issue multiple read requests and get the same response.

The idea was to issue dual reads and compare the response from read path A that went through the monorail with the response from read path B that went through the new services. The captured responses were then emitted as standard events that were consumed and sent to an offline comparison framework.

The comparison framework was placed behind an admin tool so that the traffic can be controlled without the need for code changes and deployments. Once the responses are adjusted, Airbnb engineers could slowly ramp up traffic through the service path and monitor the comparison for differences.

Once the comparison looks clean, all read requests are moved to the new service.

Writes

For writes, things had to be done differently because one cannot dual write to the same database. Instead, a shadow database was utilized.

Let’s say the monorail is making a call to a presentation service that’s hitting the production database. This is write path A.

Now, a middle-tier service is introduced to offload some validations from the presentation service. Initially, this middle-tier service will write to a shadow database instead of the main production database.

At this point, it becomes easy to issue strongly consistent read requests to both the production and shadow database and compare the results.

Once the comparison is clean, we can move the writes via the new service and to the production database.

Pros and Cons of SOA

As Airbnb went through the migration journey from the monorail to a SOA-based architecture, some pros and cons started to become obvious.

Some pros were as follows:

The system became more reliable and highly available. Even if one service went down, other parts of the service-oriented architecture could still function.

Services were now individually scalable, allowing fine-tuning of the resource allocation depending on the real needs of the system.

Increased business agility due to separating different parts of the product into different services. Each team could iterate in parallel.

However, there were some cons as well:

Engineers can take more time to ship a feature in service-oriented architecture because they need to first acquaint themselves with the various services. Also, any change potentially involves multiple services

Even though services were loosely coupled, certain patterns of logic had to be repeated across different services.

Complicated dependency graph especially when there is a lack of API governance. This could also result in circular dependencies and also make it difficult for engineers to debug errors.

Tools and Techniques to Support SOA

As we saw from the previous section, the migration to SOA unlocked multiple challenges for the Airbnb engineering team.

For example, single requests now fan out to multiple services and increase the chances of failure. Also, separating the data model in multiple databases is good for service-level consistency but it makes transactionality more difficult to enforce.

Service orchestration also became more complex over time. With hundreds of engineers building services, Airbnb needed many more EC2 instances. Ultimately, this warranted a move towards using Kubernetes.

To make it easy for the engineering team to build services, the infrastructure team at Airbnb created a lot of building blocks along the journey.

API Framework

Airbnb created an in-house API framework built using the Thrift language.

This framework is used by all Airbnb services to define clean APIs that can talk to each other.

For example, let’s say Service A wants to talk to Service B. The Service B engineer only has to define the endpoint in simple Thrift language and the framework will auto-generate the endpoint logic to handle common stuff such as schema validations, observability metrics and so on.

Also, it creates a multi-threaded RPC client that Service A can use to talk to Service B. The client handles various functionalities such as retry logic, error propagation and transport.

What’s the advantage of this?

Engineers can focus on handling the core business logic and not spend any time worrying about the details of inter-service communication.

To boost developer productivity, the Airbnb infra team also developed the API Explorer where engineers can browse different services, figure out which endpoints to call and even use an API playground to figure out how to call those endpoints.

Automated Canary Analysis with Spinnaker

Airbnb also leverages Spinnaker which is an open-source continuous delivery platform.

Spinnaker is used for application management and deployment across various cloud platforms. It supports all major platforms such as AWS, Azure, Kubernetes and so on, making it extremely easy to spin up new deployment environments.

You can create pipelines in Spinnaker representing a particular delivery process that starts with the creation of a build artifact and goes all the way to deploying the artifact in an environment.

With Spinnaker, Airbnb was able to easily set up the environments for performing automated canary analysis.

Basically, they deploy both the old and new snapshots to two temporary environments followed by routing a small percentage of traffic to both of them.

Based on the traffic analysis and error rates, an aggregate score is generated for the canary environment that helps decide whether to fail or promote the canary to the next stage in the deployment process.

Powergrid

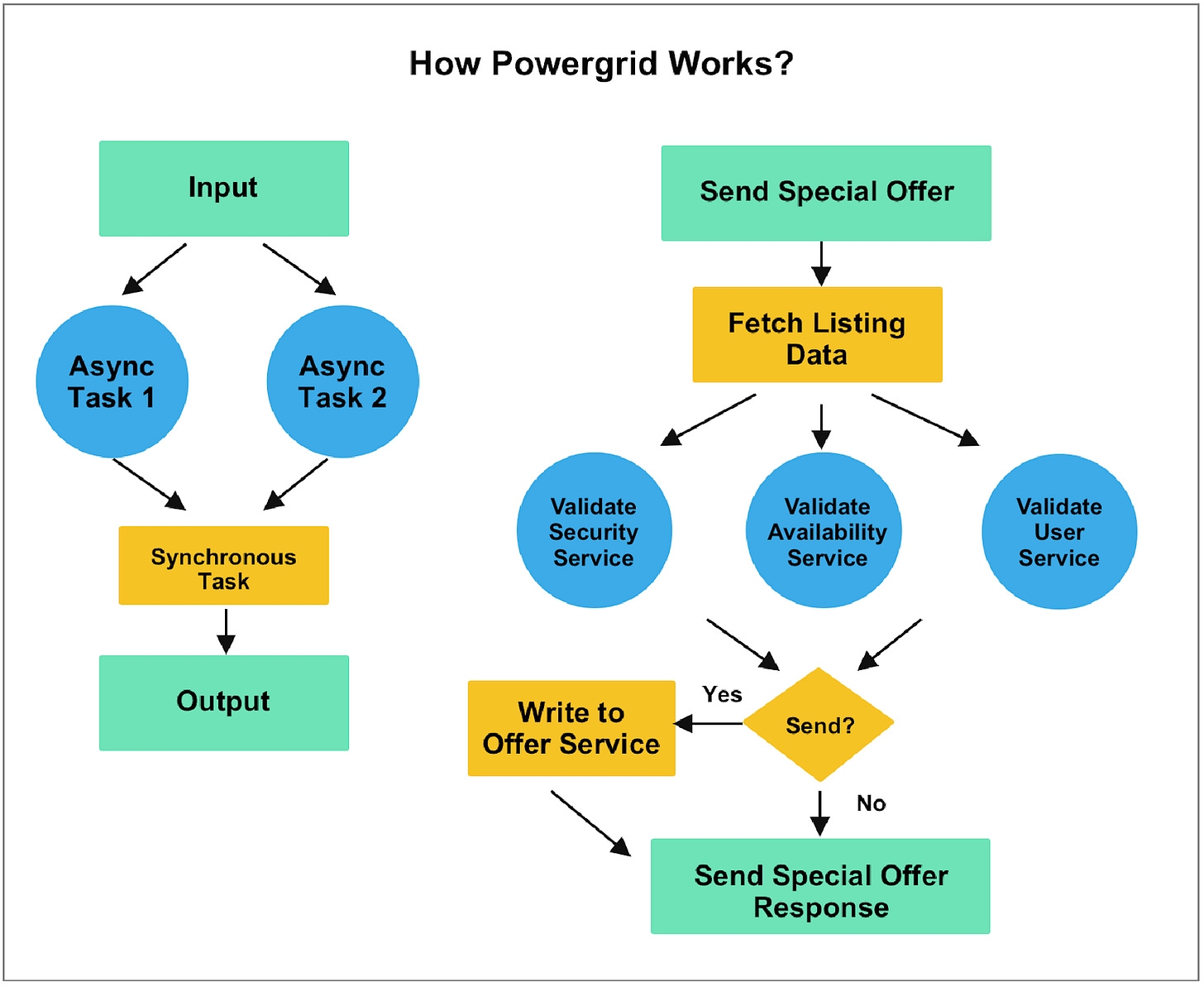

Airbnb also built an in-house library called Powergrid that makes running tasks in parallel easy.

With Powergrid, they were able to organize the code execution as a Directed Acyclic Graph (DAG).

Each node of this DAG is a function or a task. Using this, the Airbnb engineers can model each service endpoint as a data flow with requests as the input and response as the output.

Since Powergrid supports multithreading and concurrency, it can be used to run tasks in parallel.

The below diagram shows the concept of Powergrid.

For example, consider that the host wants to send a special offer to the guest. However, the process has multiple checks and validations to be performed before this can be done.

With Powergrid, these validations can be performed by the respective services in parallel. After aggregating the responses, the special offer can be sent to the guest.

Simplifying Service Dependencies

Once Airbnb started down the path of SOA, there was no turning back.

However, initially there was a lack of service governance and dependency management that led to a complicated service interaction graph. It’s always a risky situation in a service-oriented approach where the call graph becomes extremely complex.

You end up with reduced speed of development for any new change.

Also, maintenance becomes difficult.

To handle this situation, Airbnb decided to simplify the service dependencies using the concept of Service Blocks.

Basically, you can think of each block as a collection of services related to a particular business functionality.

For example, the Listing Block encapsulates both the data and business logic related to the core listing attributes. Similarly, you can have other blocks such as the User Block and Availability Block.

The Block can then expose a nice and clean facade with consistent read and write endpoints to upstream clients. Under the hood, the facade orchestrates the coordination between data and business logic services as needed. Also, strict checks are implemented to prevent direct calls to any internal services within the block.

This approach greatly reduces the complexity of a service-oriented call graph.

Conclusion

To conclude things, Airbnb had several important lessons during the whole journey of migration from the monorail to SOA.

Here are a few important ones to takeaway:

Invest in common infrastructure early

Prioritize the simplification of service dependencies

Make the necessary cultural changes to enable service-oriented approach

SOA is not a fixed destination but a journey of continuous improvement and refinement.

References:

Hi, I have a question about SOA. I keep hearing about how it's difficult to support eg 100k writes per second and one needs a super tuned replicated and sharded database. But somehow, it's a "solved problem" to support 100k writes per second to the network cards of each one of this services and to the API gateway? I understand that the services in SOA have multiple instances of themselves. But let's say a client request in json format goes through web layer->api gateway->presentation service->middle-tier service -> data service-> DB.

How is this comparably efficient to web layer->DB ? Or, rather, is the network latency so negligible than it's OK to add multiple hops?

Some thoughts I have that may explain it:

1) The data is written in the network card buffers but never makes it to disk. It is read from memory in all the services, except for the data tier.

1b) The data is written in the first available place of free memory, sequentially when writing to the receving server's receive queue. Whereas when writing to a db, we may need to update several places and also overwrite an entire block on disk if only a part of it changes.

2) Most of the network hops are within same DC so they can be quite fast

I would be very happy to receive some more explanation as to how the network is so fast

So I’m paying for this newsletter and I also have to see ads in it? Time to cancel.