EP111: My Favorite 10 Books for Software Developers

This week’s system design refresher:

10 Coding Principles Explained in 5 Minutes (Youtube video)

My Favorite 10 Books for Software Developers

25 Papers That Completely Transformed the Computer World

Change Data Capture: Key to Leverage Real-time Data

IPv4 vs. IPv6, what are the differences?

SPONSOR US

Latest articles

If you’re not a paid subscriber, here’s what you missed.

To receive all the full articles and support ByteByteGo, consider subscribing:

10 Coding Principles Explained in 5 Minutes

My Favorite 10 Books for Software Developers

General Advice

The Pragmatic Programmer by Andrew Hunt and David Thomas

Code Complete by Steve McConnell: Often considered a bible for software developers, this comprehensive book covers all aspects of software development, from design and coding to testing and maintenance.

Coding

Clean Code by Robert C. Martin

Refactoring by Martin Fowler

Software Architecture

Designing Data-Intensive Applications by Martin Kleppmann

System Design Interview (our own book :))

Design Patterns

Design Patterns by Eric Gamma and Others

Domain-Driven Design by Eric Evans

Data Structures and Algorithms

Introduction to Algorithms by Cormen, Leiserson, Rivest, and Stein

Cracking the Coding Interview by Gayle Laakmann McDowell

Over to you: What is your favorite book?

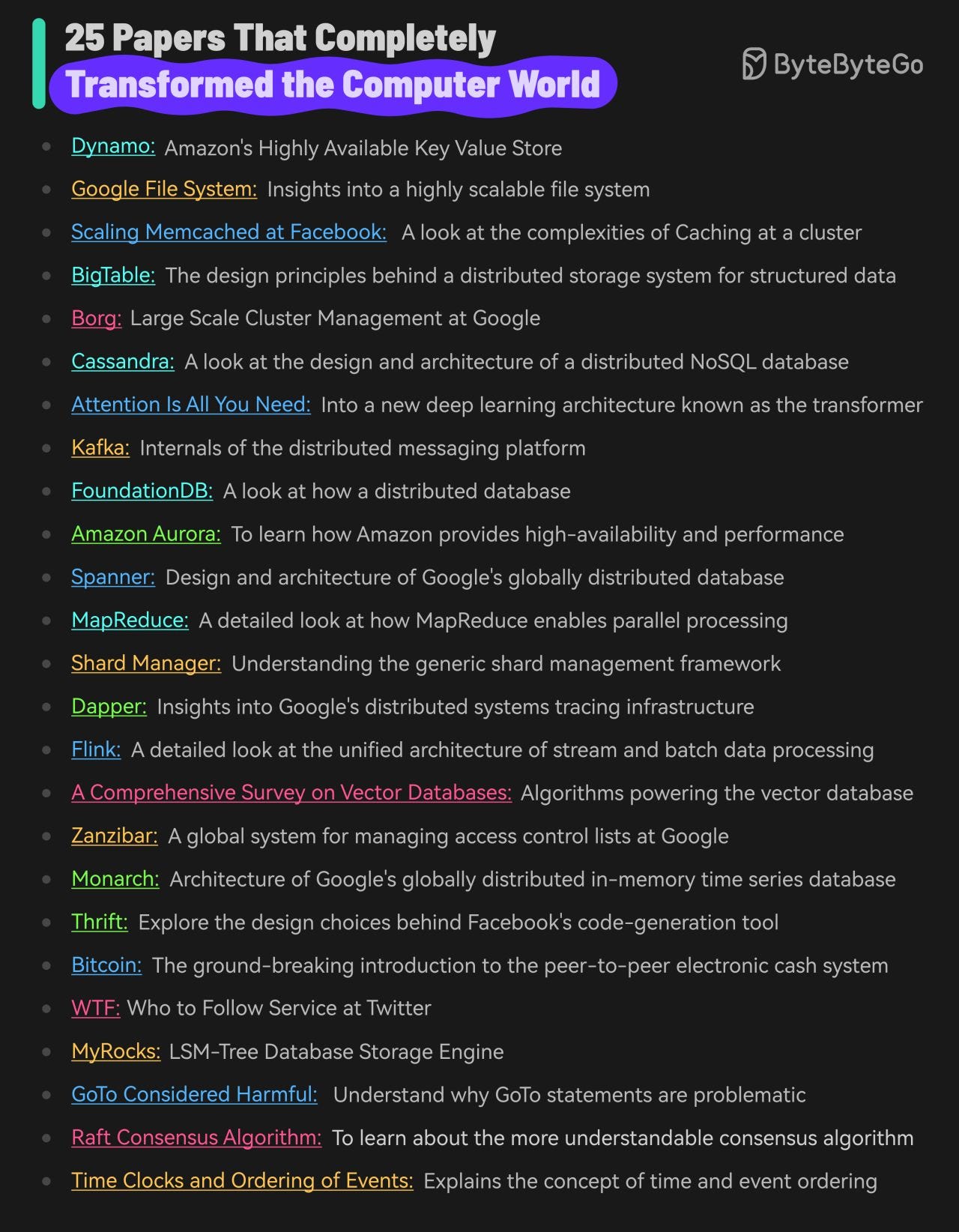

25 Papers That Completely Transformed the Computer World

Google File System: Insights into a highly scalable file system

Scaling Memcached at Facebook: A look at the complexities of Caching

BigTable: The design principles behind a distributed storage system

Cassandra: A look at the design and architecture of a distributed NoSQL database

Attention Is All You Need: Into a new deep learning architecture known as the transformer

Kafka: Internals of the distributed messaging platform

FoundationDB: A look at how a distributed database

Amazon Aurora: To learn how Amazon provides high-availability and performance

Spanner: Design and architecture of Google’s globally distributed databas

MapReduce: A detailed look at how MapReduce enables parallel processing of massive volumes of data

Shard Manager: Understanding the generic shard management framework

Dapper: Insights into Google’s distributed systems tracing infrastructure

Flink: A detailed look at the unified architecture of stream and batch processing

Zanzibar: A look at the design, implementation and deployment of a global system for managing access control lists at Google

Monarch: Architecture of Google’s in-memory time series database

Thrift: Explore the design choices behind Facebook’s code-generation tool

Bitcoin: The ground-breaking introduction to the peer-to-peer electronic cash system

WTF - Who to Follow Service at Twitter: Twitter’s (now X) user recommendation system

Raft Consensus Algorithm: To learn about the more understandable consensus algorithm

Time Clocks and Ordering of Events: The extremely important paper that explains the concept of time and event ordering in a distributed system

Over to you: I’m sure we missed many important papers. Which ones do you think should be included?

Change Data Capture: Key to Leverage Real-time Data

90% of the world’s data was created in the last two years and this growth will only get faster.

However, the biggest challenge is to leverage this data in real-time. Constant data changes make databases, data lakes, and data warehouses out of sync.

CDC or Change Data Capture can help you overcome this challenge.

CDC identifies and captures changes made to the data in a database, allowing you to replicate and sync data across multiple systems.

So, how does Change Data Capture work? Here's a step-by-step breakdown:

1 - Data Modification: A change is made to the data in the source database. It could be an insert, update, or delete operation on a table.

2 - Change Capture: A CDC tool monitors the database transaction logs to capture the modifications. It uses the source connector to connect to the database and read the logs.

3 - Change Processing: The captured changes are processed and transformed into a format suitable for the downstream systems.

4 - Change Propagation: The processed changes are published to a message queue and propagated to the target systems, such as data warehouses, analytics platforms, distributed caches like Redis, and so on.

5 - Real-Time Integration: The CDC tool uses its sink connector to consume the log and update the target systems. The changes are received in real time, allowing for conflict-free data analysis and decision-making.

Users only need to take care of step 1 while all other steps are transparent.

A popular CDC solution uses Debezium with Kafka Connect to stream data changes from the source to target systems using Kafka as the broker. Debezium has connectors for most databases such as MySQL, PostgreSQL, Oracle, etc.

Over to you: have you leveraged CDC in your application before?

IPv4 vs. IPv6, what are the differences?

The transition from Internet Protocol version 4 (IPv4) to Internet Protocol version 6 (IPv6) is primarily driven by the need for more internet addresses, alongside the desire to streamline certain aspects of network management.

Format and Length

IPv4 uses a 32-bit address format, which is typically displayed as four decimal numbers separated by dots (e.g., 192.168.0. 12). The 32-bit format allows for approximately 4.3 billion unique addresses, a number that is rapidly proving insufficient due to the explosion of internet-connected devices.

In contrast, IPv6 utilizes a 128-bit address format, represented by eight groups of four hexadecimal digits separated by colons (e.g., 50B3:F200:0211:AB00:0123:4321:6571:B000). This expansion allows for approximately much more addresses, ensuring the internet's growth can continue unabated.Header

The IPv4 header is more complex and includes fields such as the header length, service type, total length, identification, flags, fragment offset, time to live (TTL), protocol, header checksum, source and destination IP addresses, and options.

IPv6 headers are designed to be simpler and more efficient. The fixed header size is 40 bytes and includes less frequently used fields in optional extension headers. The main fields include version, traffic class, flow label, payload length, next header, hop limit, and source and destination addresses. This simplification helps improve packet processing speeds.Translation between IPv4 and IPv6

As the internet transitions from IPv4 to IPv6, mechanisms to allow these protocols to coexist have become essential:

- Dual Stack: This technique involves running IPv4 and IPv6 simultaneously on the same network devices. It allows seamless communication in both protocols, depending on the destination address availability and compatibility. The dual stack is considered one of the best approaches for the smooth transition from IPv4 to IPv6.SPONSOR US

Get your product in front of more than 500,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing hi@bytebytego.com

Test-Driven Development by Example, by Kent Beck, has got to be on that list of books.

Studied the Designing Data-Intensive Applications book when I was in uni and I learned A LOT from it. 100/10 would recommend